Detecting hidden and complicated emotions in parents, or in anyone, is a difficult and time-consuming process for software. While software and artificial intelligence have made great progress in emotion recognition, it is crucial to remember that reliably identifying hidden and complicated emotions is still a work in progress.

Researchers tested a software capable of identifying precise aspects of emotions that are invisible to the human eye. The program can analyze the intensities of numerous different facial expressions at the same time because it utilizes a ‘artificial net’ to map important aspects of the face.

The team from the University of Bristol and Manchester Metropolitan University collaborated with Bristol’s Children of the 90s research participants to examine how effectively computer tools might capture authentic human emotions in the context of normal family life. This included the usage of recordings obtained at home by babies wearing headcams during interactions with their parents.

According to the findings, which were published in Frontiers, scientists may use machine learning techniques to effectively anticipate human judgments of parent facial expressions based on the computers’ decisions.

Our research used wearable headcams to capture genuine, unscripted emotions in everyday parent-infant interactions. Together with the application of cutting-edge computer approaches, this means we can find previously unachievable subtleties by the human eye, transforming how we comprehend parents’ true feelings during interactions with their newborns.

Romana Burgess

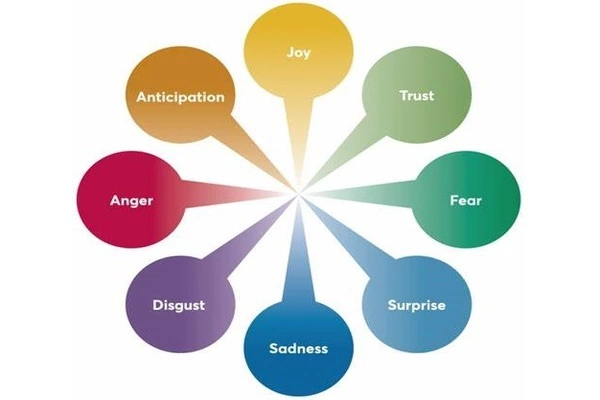

Lead author Romana Burgess, PhD student on the EPSRC Digital Health and Care CDT in the School of Electrical, Electronic and Mechanical Engineering at the University of Bristol, explained: “Humans experience complicated emotions – the algorithms tell us that someone can be 5% sad or 10% happy, for example.

“Using computational methods to detect facial expressions from video data can be very accurate when the videos are of high quality and represent optimal conditions – for instance, when videos are recorded in rooms with good lighting, when participants are sat face-on with the camera, and when glasses or long hair are kept from blocking the face.”

“Their performance in the chaotic, real-world settings of family homes piqued our interest. The software spotted a face in approximately 25% of the movies collected in real-world situations, indicating the difficulties in evaluating faces in such dynamic interactions.”

The team used data from the Children of the 90s health study – also known as the Avon Longitudinal Study of Parents and Children (ALSPAC). Parents were invited to attend a clinic at the University of Bristol when their babies were 6 months old.

As part of the ERC MHINT Headcam Study, parents were given two wearable headcams to take home and use during interactions with their babies. The headcams were worn by both parents and infants during feeding and play sessions.

They then utilized ‘automatic facial coding’ software to computationally analyze parents’ facial expressions in the videos and had human coders analyze the identical facial expressions in the films. The scientists measured how frequently the machine detected faces in videos and how often people and algorithms agreed on facial expressions. Finally, they employed machine learning to forecast human decisions based on the computer’s decisions.

Romana said: “Deploying automated facial analysis in the parents’ home environment could change how we detect early signs of mood or mental health disorders, such as postnatal depression. For instance, we might expect parents with depression to show more sad expressions and less happy facial expressions.”

“These conditions could be better understood through subtle nuances in parents’ facial expressions, providing early intervention opportunities that were previously unimaginable,” said Professor Rebecca Pearson of Manchester Metropolitan University, co-author and PI of the ERC research. Most parents, for example, will strive to ‘mask’ their own distress in order to appear ‘fine’ to people around them. The software can detect more nuanced combinations, such as expressions that are a mix of grief and delight or that change quickly.”

The team now intends to investigate the use of automated facial coding in the home as a tool for understanding mood and mental health disorders and interactions. This will help to usher in a new era of health monitoring by bringing cutting-edge science into the house.

“Our research used wearable headcams to capture genuine, unscripted emotions in everyday parent-infant interactions,” Romana concluded. Together with the application of cutting-edge computer approaches, this means we can find previously unachievable subtleties by the human eye, transforming how we comprehend parents’ true feelings during interactions with their newborns.”