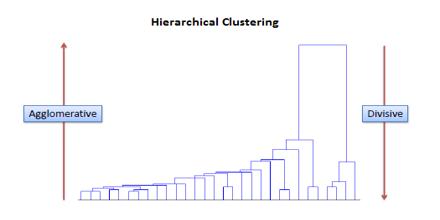

Hierarchical Clustering is achieved by use of an appropriate metric, and a linkage criterion which specifies the dissimilarity of sets as a function of the pairwise distances of observations in the sets. Hierarchical Clustering has the distinct advantage that any valid measure of distance can be used. In fact, the observations themselves are not required: all that is used is a matrix of distances. Hierarchical clustering groups data over a variety of scales by creating a cluster tree or dendrogram. The tree is not a single set of clusters, but rather a multilevel hierarchy, where clusters at one level are joined as clusters at the next level.

Hierarchical Clustering