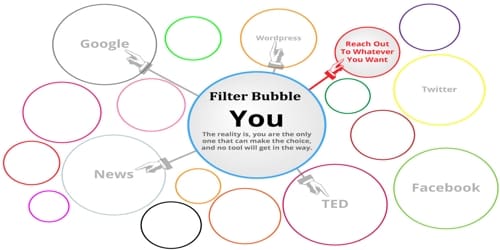

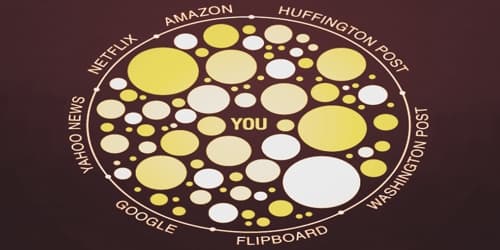

Filter Bubble

A filter bubble is an intellectual isolation that can occur when websites make use of algorithms to selectively assume the information a user would want to see, and then give information to the user according to this assumption. It is an algorithmic bias that skews or limits the information an individual user sees on the internet. The bias is caused by the weighted algorithms that search engines, social media sites, and marketers use to personalize user experience (UX).

The bubble effect may have negative implications for civic discourse, according to Pariser, but contrasting views regard the effect as minimal and addressable. The surprising results of the U.S. presidential election in 2016 have been associated with the influence of social media platforms such as Twitter and Facebook, and as a result have called into question the effects of the “filter bubble” phenomenon on user exposure to fake news and echo chambers, spurring new interest in the term, with many concerned that the phenomenon may harm democracy.

The term filter bubble is often credited to Eli Pariser, whose 2011 book urged companies to become more transparent about their filtering practices. Pariser defined his concept of a filter bubble in more formal terms as “that personal ecosystem of information that’s been catered by these algorithms”. Pariser’s idea of the filter bubble was popularized after the TED talk he gave in May 2011, in which he gives examples of how filter bubbles work and where they can be seen.

“Instead of a balanced information diet, we may end up with only information junk food.” So, the internet, which appears at first to be a window into the world’s information, may only be showing you half of the story. This is also bad for democracy, which requires political discourse and discussion of issues to reach a compromise. If everyone’s views are so polarized that this becomes impossible, we’re in trouble as a country.

In The Filter Bubble, Pariser warns that a potential downside to filtered searching is that it “closes us off to new ideas, subjects, and important information”, and “creates the impression that our narrow self-interest is all that exists”. It is potentially harmful to both individuals and society, in his view. He criticized Google and Facebook for offering users “too much candy, and not enough carrots”. He warned that “invisible algorithmic editing of the web” may limit our exposure to new information and narrow our outlook.

Algorithmic websites, like many search engines and social media sites, show users content based on their past behavior. Depending on what you’ve clicked on in the past, the website shows you what it thinks you are most likely to engage with.

Social Media companies, like Facebook, want you to keep using the product. So instead of being a feed of all the information, Facebook is selective with what it puts in your feed. People often assume that the information they see is unbiased when it is actually skewed towards their beliefs.

Filter bubbles in popular social media and personalized search sites can determine the particular content seen by users, often without their direct consent or cognizance, due to the algorithms used to curate that content. Critics of the use of filter bubbles speculate that individuals may lose autonomy over their own social media experience and have their identities socially constructed as a result of the pervasiveness of filter bubbles.

Information Source: