Nvidia stated that it is expanding support for its TensorRT-LLM SDK to Windows and models such as Stable Diffusion in order to accelerate large language models (LLMs) and related tools. TensorRT accelerates inference, which is the process of sifting over pre-trained data and calculating probabilities to get a result — such as a newly created Stable Diffusion image. Nvidia hopes to play a larger role in generative AI with this software.

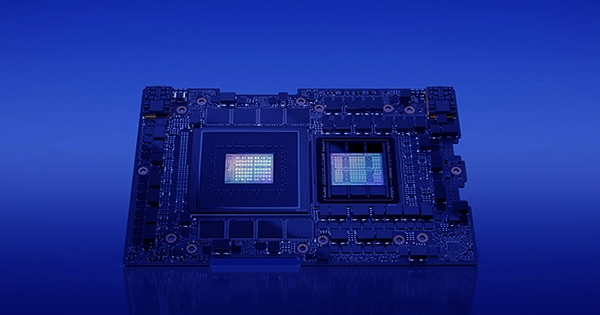

TensorRT-LLM deconstructs LLMs such as Meta’s Llama 2 and other AI models such as Stability AI’s Stable Diffusion so that they can run quicker on Nvidia’s H100 GPUs. According to the business, by processing LLMs through TensorRT-LLM, “this acceleration significantly improves the experience for more sophisticated LLM use — like writing and coding assistants.”

In this manner, Nvidia can not only provide the GPUs that train and operate LLMs but also the software that allows models to run and work faster, preventing consumers from looking for other ways to make generative AI more cost-effective. TensorRT-LLM will be “available publicly to anyone who wants to use or integrate it,” according to the business, and the SDK will be published on its website.

Nvidia already has a near-monopoly on the powerful CPUs that train LLMs like GPT-4 — and training and running one requires a large number of GPUs. Demand for its H100 GPUs has surged, with anticipated prices reaching $40,000 per chip. The GH200, a newer version of the company’s GPU, will be available next year. It’s no surprise that Nvidia’s revenue grew to $13.5 billion in the second quarter.

However, the area of generative AI advances quickly, and new approaches for running LLMs without a large number of expensive GPUs have emerged. Microsoft and AMD have announced plans to develop their own CPUs in order to reduce their reliance on Nvidia.

And businesses have focused on the inference side of AI development. AMD intends to acquire software startup Nod.ai in order to enable LLMs to run exclusively on AMD hardware, while companies like SambaNova already provide services that make it easier to run models.

For the time being, Nvidia is the hardware leader in generative AI, but it appears to be aiming for a future in which users don’t have to rely on purchasing large quantities of its GPUs.