Neuromorphic chips have been backed by research that shows they are far more energy-efficient than non-neuromorphic hardware when running large deep learning networks. According to new research, neuromorphic technology is up to sixteen times more energy-efficient than other AI systems for large deep learning networks.

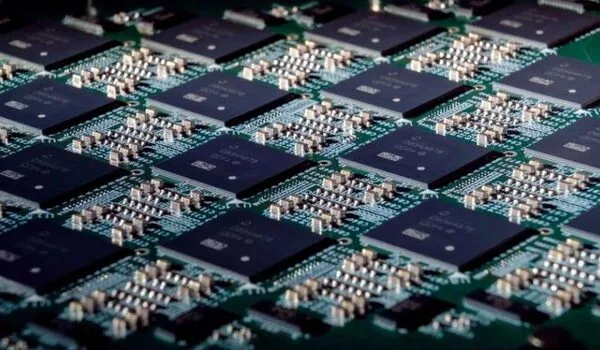

The Institute of Theoretical Computer Science at TU Graz and Intel Labs demonstrated experimentally for the first time that a large neural network can process sequences such as sentences while consuming four to sixteen times less energy when running on neuromorphic hardware than non-neuromorphic hardware. The new study is based on Intel Labs’ Loihi neuromorphic research chip, which uses neuroscience insights to create chips that function similarly to those found in the biological brain.

The research was funded by The Human Brain Project (HBP), one of the largest research projects in the world with more than 500 scientists and engineers across Europe studying the human brain. The results of the research are published in the research paper “Memory for AI Applications in Spike-based Neuromorphic Hardware” (DOI 10.1038/s42256-022-00480-w) which in published in Nature Machine Intelligence.

Our system is four to sixteen times more energy-efficient than other AI models on standard hardware. Recurrent neural structures are expected to provide the greatest gains for applications running on neuromorphic hardware in the future.

Philipp Plank

Human brain as a role model

The subjects of worldwide artificial intelligence (AI) research are smart machines and intelligent computers that can autonomously recognize and infer objects and relationships between different objects. Energy consumption is a significant impediment to the widespread application of such AI methods. Neuromorphic technology, it is hoped, will provide a push in the right direction. Neuromorphic technology is based on the human brain, which is extremely energy efficient. Its hundred billion neurons consume only about 20 watts of energy to process information, which is not much more than an average energy-saving light bulb.

In the research, the group focused on algorithms that work with temporal processes. For example, the system had to answer questions about a previously told story and grasp the relationships between objects or people from the context. The hardware tested consisted of 32 Loihi chips.

Loihi research chip: up to sixteen times more energy-efficient than non-neuromorphic hardware

“Our system is four to sixteen times more energy-efficient than other AI models on standard hardware,” says Philipp Plank, a doctoral student at the Institute of Theoretical Computer Science at TU Graz. Plank anticipates further efficiency gains as these models are migrated to the next generation of Loihi hardware, which significantly improves chip-to-chip communication performance.

“Intel’s Loihi research chips promise to bring AI gains, particularly by lowering their high energy cost,” Mike Davies, director of Intel’s Neuromorphic Computing Lab, said. “Our collaboration with TU Graz adds to the evidence that neuromorphic technology can improve the energy efficiency of today’s deep learning workloads by rethinking their implementation from a biological standpoint.”

Mimicking human short-term memory

As Wolfgang Maass, Philipp Plank’s doctoral supervisor at the Institute of Theoretical Computer Science, explains: “In their neuromorphic network, the group reproduced a presumed memory mechanism of the brain. Experiments have shown that the human brain can store information for a short period of time even when there is no neural activity, namely in what are known as “internal variables” of neurons. According to simulations, a fatigue mechanism of a subset of neurons is required for this short-term memory.”

Although direct proof is lacking because these internal variables cannot yet be measured, it does imply that the network only needs to test which neurons are currently fatigued in order to reconstruct what information it has previously processed. In other words, previous information is stored in the non-activity of neurons, and non-activity consumes the least energy.

Symbiosis of recurrent and feed-forward network

For this purpose, the researchers link two types of deep learning networks. Feedback neural networks are in charge of “short-term memory.” Many of these so-called recurrent modules filter out and store potentially relevant information from the input signal. A feed-forward network then determines which of the discovered relationships are critical for completing the task at hand. Meaningless relationships are filtered out, and neurons only fire in modules where relevant information has been found. This procedure eventually results in energy savings.

“Recurrent neural structures are expected to provide the greatest gains for applications running on neuromorphic hardware in the future,” Davies said. “Neuromorphic hardware like Loihi is uniquely suited to facilitate the fast, sparse and unpredictable patterns of network activity that we observe in the brain and need for the most energy efficient AI applications.”