For those who are blind or visually challenged, engineers at the University of Colorado Boulder are creating a new type of walking stick by utilizing developments in artificial intelligence (AI).

Think of it as assistive technology meets Silicon Valley.

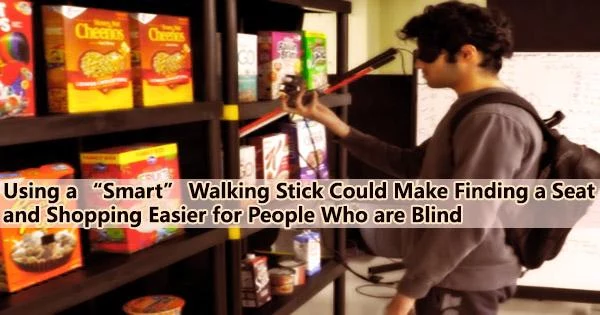

According to the researchers, their “smart” walking stick could one day aid blind individuals in doing tasks in a world built for sighted people, such as choosing a secluded seat in a crowded diner or shopping for cereal at the grocery store.

“I really enjoy grocery shopping and spend a significant amount of time in the store,” said Shivendra Agrawal, a doctoral student in the Department of Computer Science. “A lot of people can’t do that, however, and it can be really restrictive. We think this is a solvable problem.”

In a study published in October, Agrawal and his colleagues in the Collaborative Artificial Intelligence and Robotics Lab got one step closer to solving it.

The walking stick of the team looks like one of those white and red canes you can buy at Walmart. But it also includes a few add-ons: Using a camera and computer vision technology, the walking stick maps and catalogs the world around it. The user is then guided by spoken instructions such as “reach a little bit to your right” and vibrations in the handle.

“The device isn’t supposed to be a substitute for designing places like grocery stores to be more accessible,” Agrawal said. But he hopes his team’s prototype will show that, in some cases, AI can help millions of Americans become more independent.

“AI and computer vision are improving, and people are using them to build self-driving cars and similar inventions,” Agrawal said. “But these technologies also have the potential to improve quality of life for many people.”

Take a seat

Agrawal and his colleagues first explored that potential by tackling a familiar problem: Where do I sit?

AI and computer vision are improving, and people are using them to build self-driving cars and similar inventions. But these technologies also have the potential to improve quality of life for many people.

Shivendra Agrawal

“Imagine you’re in a café,” he said. “You don’t want to sit just anywhere. You usually take a seat close to the walls to preserve your privacy, and you usually don’t like to sit face-to-face with a stranger.”

Making these kinds of judgments is a priority for those who are blind or visually challenged, according to prior study. The researchers built up a sort of café in their lab replete with a few chairs, customers, and barriers to test if their smart walking stick could be of use.

Participants in the study put on a backpack that contained a laptop and took out a smart walking stick. They turned to look around the room while holding a camera near the cane handle. Algorithms running on the laptop recognized the different elements of the room, much like a self-driving car, and then determined the best path to a certain seat.

The team reported its findings this fall at the International Conference on Intelligent Robots and Systems in Kyoto, Japan. Researchers on the study included Bradley Hayes, assistant professor of computer science, and doctoral student Mary Etta West.

The study showed promising results: Subjects were able to find the right chair in 10 out of 12 trials with varying levels of difficulty. So far, the subjects have all been sighted people wearing blindfolds. But once the technology is more dependable, the researchers intend to test and develop their device using working people who are blind or visually challenged.

“Shivendra’s work is the perfect combination of technical innovation and impactful application, going beyond navigation to bring advancements in underexplored areas, such as assisting people with visual impairment with social convention adherence or finding and grasping objects,” Hayes said.

Let’s go shopping

Next up for the group: grocery shopping.

In new research, which the team hasn’t yet published, Agrawal and his colleagues adapted their device for a task that can be daunting for anyone: finding and grasping products in aisles filled with dozens of similar-looking and similar-feeling choices.

Once more, the group created an improvised setting in their lab: this time, a cereal shelf from the grocery store. The researchers created a database of product photos, such as boxes of Honey Nut Cheerios or Apple Jacks, into their software. Study subjects then used the walking stick to scan the shelf, searching for the product they wanted.

“It assigns a score to the objects present, selecting what is the most likely product,” Agrawal said. “Then the system issues commands like ‘move a little bit to your left.’”

He added that it will be a while before the team’s walking stick makes it into the hands of real shoppers. For instance, the group hopes to make the system smaller by designing it to be powered by a regular smartphone that is attached to a cane.

Yet the academics studying human-robot interaction also hope that their early findings will encourage other experts to reconsider what robotics and AI are capable of.

“Our aim is to make this technology mature but also attract other researchers into this field of assistive robotics,” Agrawal said. “We think assistive robotics has the potential to change the world.”