Software Quality:

Definition of Quality:

Industry accepted definitions of quality are “conformance to requirements” (from Philip Crosby) and “fit for use” (from Dr. Joseph Juran and Dr. W. Edwards Deming). These two definitions are not contradictory.

Meeting requirements is a producer’s view of quality. This is the view of the organization responsible for the project and processes, and the products and services acquired, developed, or maintained by those processes. Meeting requirements means that the person building the product does so in accordance with the requirements. Requirements can be very complete or they can be simple, but they must be defined in a measurable format, so it can be determined whether they have been met. The producer’s view of quality has these four characteristics:

- Doing the right thing

- Doing it the right way

- Doing it right the first time

- Doing it on time without exceeding cost

Being “fit for use” is the customer’s definition. The customer is the end user of the products or services. Fit for use means that the product or service meets the customer’s needs regardless of the product requirements. Of the two definitions of quality, “fit for use” is the most important. The customer’s view of quality has these characteristics:

- Receiving the right product for their use

- Being satisfied that their needs have been met

- Meeting their expectations

- Being treated with integrity, courtesy, and respect

1.2 How to minimize tow Quality gaps:

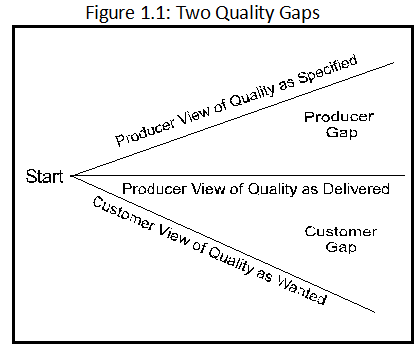

Most Information Technology (IT) groups have two quality gaps: the producer gap and the customer gap (see Figure 1.1). The producer gap is the difference between what is specified (the documented requirements and internal standards) vs. what is delivered (what is actually built). The customer gap is the difference between what the producer actually delivered vs. what the customer wanted.

Closing these two gaps is the responsibility of the quality function. The quality function must first improve the processes to the point where the producer can develop the products according to requirements received and its own internal standards. Closing the producer’s gap enables the IT function to provide its customers consistency in what it can produce. This has been referred to as the “McDonald’s effect” – at any McDonald’s in the world, a Big Mac should taste the same. It doesn’t mean that every customer likes the Big Mac or that it meets everyone’s needs, but, rather, that McDonald’s has now produced consistency in its delivered product.

Closing the customer gap requires the quality function to understand the true needs of the customer. This can be done by customer surveys, Joint Application Development (JAD) sessions, and more user involvement through the process of building information products. The processes can then be changed to close the customer gap, keeping consistency while producing products and services needed by the customer.

1.3 Commonly accepted Quality Attributes:

| Attributes | Definitions |

| Correctness

| The extent to which a program satisfies its specifications and fulfills the user’s mission and goals |

| Reliability | The extent to which a program can be expected to perform its intended function with required precision |

| Efficiency

| The amount of computing resources and code required by a program to perform a function |

| Integrity

| The extent to which access to software or data by unauthorized persons can be controlled |

| Usability

| The effort required for learning, operating, preparing input, and interpreting output of a program |

| Maintainability

| The effort required for locating and fixing an error in an operational program |

| Testability

| The effort required for testing a program to ensure it performs its intended function |

| Flexibility

| The effort required for modifying an operational program |

| Portability

| The effort required for transferring a program from one hardware configuration and/or software system environment to another |

| Reusability

| The extent to which a program can be used in other applications – related to the packaging and scope of the functions that the programs perform |

| Interoperability | The effort required to couple one system with another |

Table 1: Commonly accepted software quality attributes

1.4 Quality Challenges:

People say they want quality; however, their actions may not support this view for the following reasons:

- Many think that defect-free products and services are not practical or economical, and thus believe some level of defects is normal and acceptable. (This is called Acceptable Quality Level, or AQL.) Quality experts agree that AQL is not a suitable definition of quality. As long as management is willing to “accept” defective products, the entire quality program will be in jeopardy.

- Quality is frequently associated with cost, meaning that high quality is synonymous with high cost. (This is confusion between quality of design and quality of conformance.) Organizations may be reluctant to allocate resources for quality assurance, as they do not see an immediate payback.

- Quality by definition calls for requirements/specifications in enough detail so that the products produced can be quantitatively measured against those specifications. Few organizations are willing to expend the effort to produce requirements/specifications at the level of detail required for quantitative measurement.

- Many technical personnel believe that standards inhibit their creativity, and, thus do not strive for compliance to standards. However, for quality to happen there must be well-defined standards and procedures that are followed.

The contributors to poor quality in many organizations can be categorized as either lack of involvement by management, or lack of knowledge about quality. Following are some of the specific contributors for these two categories:

- Lack of involvement by management

– Management’s unwillingness to accept full responsibility for all defects

– Failure to determine the cost associated with defects (i.e., poor quality)

– Failure to initiate a program to “manage defects”

– Lack of emphasis on processes and measurement

– Failure to enforce standards

– Failure to reward people for following processes

- Lack of knowledge about quality

– Lack of a quality vocabulary, which makes it difficult to communicate quality problems and objectives

– Lack of knowledge of the principles of quality (i.e., what is necessary to make it happen)

– No categorization scheme for defects (i.e., naming of defects by type)

– No information on the occurrence of defects by type, by frequency, and by location

– Unknown defect expectation rates for new products

– Defect-prone processes unknown or unidentified

– Defect-prone products unknown or unidentified

– An economical means for identifying defects unknown

– Proven quality solutions are unknown and unused

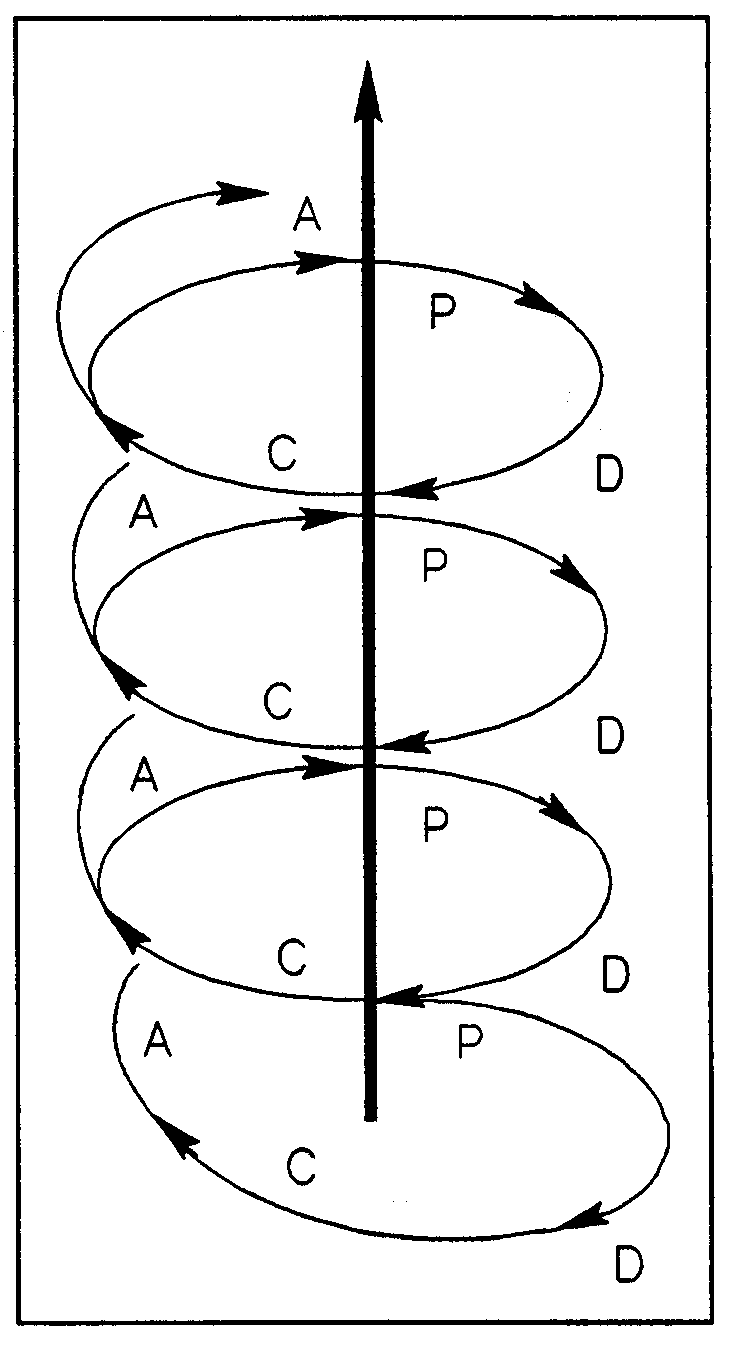

1.5 The PDCA Cycle

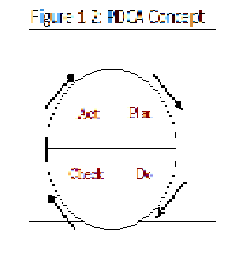

A major premise of a quality management environment is an emphasis on continuous improvement. The approach to continuous improvement is best illustrated using the PDCA cycle, which was developed in the 1930s by Dr. Shewhart of the Bell System. The cycle comprises the four steps of Plan, Do, Check, and Act. It is also called the Deming Wheel, and is one of the key concepts of quality.

- Plan (P): Devise a plan – Define the objective, expressing it numerically if possible. Clearly describe the goals and policies needed to attain the objective at this stage. Determine the procedures and conditions for the means and methods that will be used to achieve the objective.

- Do (D): Execute the plan – Create the conditions and perform the necessary teaching and training to ensure everyone understands the objectives and the plan. Teach workers the procedures and skills they need to fulfill the plan and thoroughly understand the job. Then perform the work according to these procedures.

- Check (C): Check the results – As often as possible, check to determine whether work is progressing according to the plan and whether the expected results are obtained. Check for performance of the procedures, changes in conditions, or abnormalities that may appear.

- Act (A): Take the necessary action – If the check reveals that the work is not being performed according to plan, or if results are not what were anticipated, devise measures for appropriate action. Look for the cause of the abnormality to prevent its recurrence. Sometimes workers may need to be retrained and procedures revised. The next plan should reflect these changes and define them in more detail.

The PDCA procedures ensure that the quality of the products and services meets expectations, and that the anticipated budget and delivery date are fulfilled. Sometimes preoccupation with current concerns limits the ability to achieve optimal results. Repeatedly going around the PDCA circle can improve the quality of the work and work methods, and obtain the desired results. This concept can be seen in the ascending spiral of Figure 1.3.

1.6 The Three Key Principles of Quality

Everyone is responsible for quality, but senior management must emphasize and initiate quality improvement, and then move it down through the organization to the individual employees. The following three quality principles must be in place for quality to happen:

- Management is responsible for quality – Quality cannot be delegated effectively. Management must accept the responsibility for the quality of the products produced in their organization; otherwise, quality will not happen. A quality function is only a catalyst in making quality happen. The quality function assists management in building quality information systems by monitoring quality and making recommendations to management about areas where quality can be improved. As the quality function is a staff function, not management, it cannot dictate quality for the organization. Only management can make quality happen.

- Producers must use effective quality control – All of the parties and activities involved in producing a product must be involved in controlling the quality of those products. This means that the workers will be actively involved in the establishment of their own standards and procedures.

- Quality is a journey, not a destination – The objective of the quality program must be continuous improvement. The end objective of the quality process must be satisfied customers.

1.7 Quality Control and Quality Assurance

Very few individuals can differentiate between quality control and quality assurance. Most quality assurance groups, in fact, practice quality control. This section differentiates between the two, and describes how to recognize a control practice from an assurance practice.

Quality means meeting requirements and meeting customer needs, which means a defect-free product from both the producer’s and the customer’s viewpoint. Both quality control and quality assurance are used to make quality happen. Of the two, quality assurance is the more important.

Quality is an attribute of a product. A product is something produced, such as a requirement document, test data, source code, load module, or terminal screen. Another type of product is a service that is performed, such as meetings with customers, help desk activities, and training sessions. Services are a form of products; and therefore, also contain attributes. For example, an agenda might be a quality attribute of a meeting.

A process is the set of activities that is performed to produce a product. Quality is achieved through processes. Processes have the advantage of being able to replicate a product time and time again. Even in data processing, the process is able to replicate similar products with the same quality characteristics.

Quality assurance is associated with a process. Once processes are consistent, they can “assure” that the same level of quality will be incorporated into each product produced by that process.

Quality Control

Quality control (QC) is defined as the processes and methods used to compare product quality to requirements and applicable standards, and the action taken when a nonconformance is detected. QC uses reviews and testing to focus on the detection and correction of defects before shipment of products.

Quality control should be the responsibility of the organizational unit producing the product and should be integrated into the work activities. Ideally the same group that builds the product performs the control function; however, some organizations establish a separate group or department to check the product.

Impediments to QC include the following:

- Quality control is often viewed as a police action

- IT is often considered an art

- Unclear or ineffective standards and processes

- Lack of process training

Quality Assurance

Quality assurance (QA) is the set of activities (including facilitation, training, measurement, and analysis) needed to provide adequate confidence that processes are established and continuously improved in order to produce products or services that conform to requirements and are fit for use.

QA is a staff function that prevents problems by heading them off, and by advising restraint and redirection at the proper time. It is also a catalytic function that should promote quality concepts, and encourage quality attitudes and discipline on the part of management and workers. Successful QA managers know how to make people quality conscious and to make them recognize the personal and organizational benefits of quality.

The major impediments to QA come from management, which is typically results oriented, and sees little need for a function that emphasizes managing and controlling processes. Thus, many of the impediments to QA are associated with processes, and include the following:

- Management does not insist on compliance to processes

- Workers are not convinced of the value of processes

- Processes become obsolete

- Processes are difficult to use

- Workers lack training in processes

- Processes are not measurable

- Measurement can threaten employees

- Processes do not focus on critical aspects of products

1.8 Differentiate between QC and QA

| Quality Control | Quality Assurance |

| QC relates to a specific product or service.

| QA helps establish processes.

|

| QC verifies whether particular attributes exist, or do not exist, in a specific product or service.

| QA sets up measurement programs to evaluate processes.

|

| QC identifies defects for the primary purpose of correcting defects.

| QA identifies weaknesses in processes and improves them.

|

| QC is the responsibility of the worker.

| QA is concerned with all of the products that will ever be produced by a process.

|

Software Testing Methodologies

2.1 Overview

There are various methodologies available for developing and testing software. The choice of methodology depends on factors such as the nature of project, the project schedule, and resource availability. Although most software development projects involve periodic testing, some methodologies focus on getting the input from testing early in the cycle rather than waiting for input when a working model of the system is ready. Those methodologies that require early test involvement have several advantages, but also involve tradeoffs in terms of project management, schedule, customer interaction, budget, and communication among team members.

Various generic software development life cycle methodologies are available for executing software development projects. Although each methodology is designed for a specific purpose and has its own advantages and disadvantages, most methodologies divide the life cycle into phases and share tasks across these phases. This section briefly summarizes common methodologies used for software development and describes their relationship to testing.

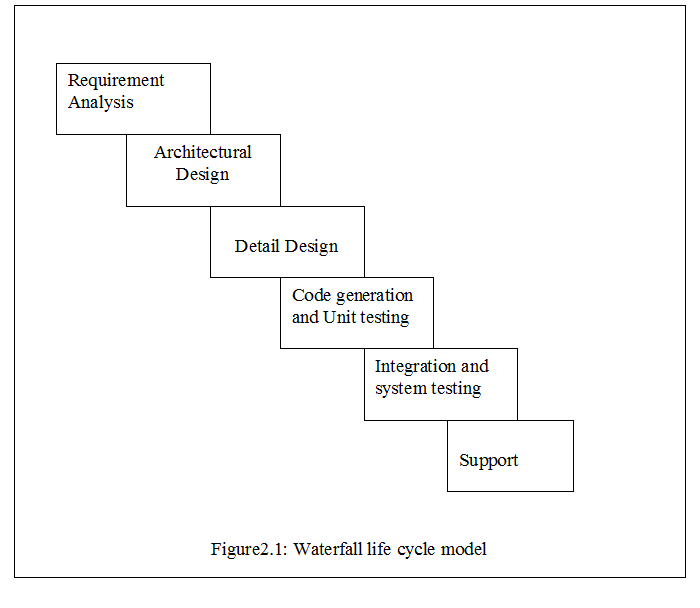

2.2 Waterfall Model

Waterfall model is also known as linear sequential model for its sequential approach. The waterfall model is one of the earliest structured models for software development. It begins with the system level and progress through analysis, design, coding, testing and support. It consists of the following sequential phases through which the development life cycle progresses:

System/information engineering and modeling: In this phase, the various aspects of the targeted business process are considered and, find out which aspects are worth incorporating into a system, and evaluate various approaches to building the required software.

Requirement analysis: In this phase, software requirements are captured in such a way that they can be translated into actual use cases for the system. The requirements can derive from use cases, performance goals, target deployment, and so on.

System design: System design is actually a multistep process that focuses on four distinct attributes of a program: data structure, software architecture, interface representation and procedural detail. The design process is translates requirements into a representation of the software that can be assessed for quality before coding begin. An architecture and design review is conducted at the end of this phase to ensure that the design conforms to the previously defined requirements.

Coding and unit testing: The design must be translated into machine readable form. The code generation phase performs this task. If design is performed in a detail manner, code generation code generation can be accomplished mechanically.

Integration and system testing: In this phase, integration all of the modules is done in the system and test them as a single system for all of the use cases, making sure that the modules meet the requirements.

Support: Software will undoubtedly undergo change after it is delivered to the customer. Changes will occur because errors have been encountered or because customer requires functional or performance enhancements. Software support/maintenance reapplies each of the preceding phases to an existing program rather than a new one.

The waterfall model has the following advantages:

- It allows compartmentalizing the life cycle into various phases, which allows planning the resources and effort required through the development process.

- It enforces testing in every stage in the form of reviews and unit testing. You conduct design reviews, code reviews, unit testing, and integration testing during the stages of the life cycle.

- It allows to set expectations for deliverables after each phase.

The waterfall model has the following disadvantages:

- We can not see a working version of the software until late in the life cycle. For this reason, we can fail to detect problems until the system testing phase. Problems may be more costly to fix in this phase than they would have been earlier in the life cycle.

- It is often difficult for the customer to state all requirements explicitly. The waterfall model require this and has difficulty accommodating the natural uncertainty that exits at the beginning of many projects.

- When an application is in the system testing phase, it is difficult to change something that was not carefully considered in the system design phase. The emphasis on early planning tends to delay or restrict the amount of change that the testing effort can instigate, which is not the case when a working model is tested for immediate feedback.

- For a phase to begin, the preceding phase must be complete; for example, the system design phase cannot begin until the requirement analysis phase is complete and the requirements are frozen. As a result, the waterfall model is not able to accommodate uncertainties that may persist after a phase is completed. These uncertainties may lead to delays and extended project schedules.

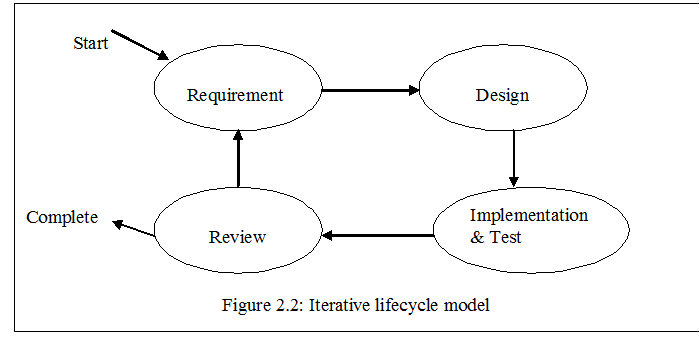

2.3 Incremental or Iterative Development Model

The incremental, or iterative, development model breaks the project into small parts. Each part is subjected to multiple iterations of the waterfall model. At the end of each iteration, a new module is completed or an existing one is improved on, the module is integrated into the structure, and the structure is then tested as a whole.

For example, using the iterative development model, a project can be divided into 12 one to four-week iterations. The system is tested at the end of each iteration, and the test feedback is immediately incorporated at the end of each test cycle. The time required for successive iterations can be reduced based on the experience gained from past iterations. The system grows by adding new functions during the development portion of each iteration. Each cycle tackles a relatively small set of requirements; therefore, testing evolves as the system evolves. In contrast, in a classic waterfall life cycle, each phase (requirement analysis, system design, and so on) occurs once in the development cycle for the entire set of system requirements.

The main advantage of the iterative development model is that corrective actions can be taken at the end of each iteration. The corrective actions can be changes to the specification because of incorrect interpretation of the requirements, changes to the requirements themselves, and other design or code-related changes based on the system testing conducted at the end of each cycle.

The main disadvantages of the iterative development model are as follows:

- The communication overhead for the project team is significant, because each iteration involves giving feedback about deliverables, effort, timelines, and so on.

- It is difficult to freeze requirements, and they may continue to change in later iterations because of increasing customer demands. As a result, more iteration may be added to the project, leading to project delays and cost overruns.

- The project requires a very efficient change control mechanism to manage changes made to the system during each iteration.

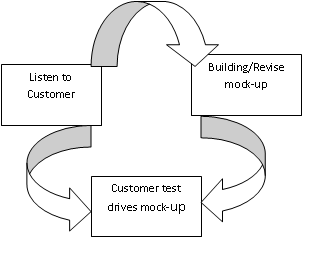

2.4 Prototyping Model

The prototyping model assumes that you do not have clear requirements at the beginning of the project. Often, customers have a vague idea of the requirements in the form of objectives that they want the system to address. With the prototyping model, you build a simplified version of the system and seek feedback from the parties who have a stake in the project. The next iteration incorporates the feedback and improves on the requirements specification. The prototypes that are built during the iterations can be any of the following:

- A simple user interface without any actual data processing logic

- A few subsystems with functionality that is partially or completely implemented

- Existing components that demonstrate the functionality that will be incorporated into the system

The prototyping model consists of the following steps.

- Capture requirements. This step involves collecting the requirements over a period of time as they become available.

- Design the system. After capturing the requirements, a new design is made or an existing one is modified to address the new requirements.

- Create or modify the prototype. A prototype is created or an existing prototype is modified based on the design from the previous step.

- Assess based on feedback. The prototype is sent to the stakeholders for review. Based on their feedback, an impact analysis is conducted for the requirements, the design, and the prototype. The role of testing at this step is to ensure that customer feedback is incorporated in the next version of the prototype.

- Refine the prototype. The prototype is refined based on the impact analysis conducted in the previous step.

- Implement the system. After the requirements are understood, the system is rewritten either from scratch or by reusing the prototypes. The testing effort consists of the following:

- Ensuring that the system meets the refined requirements

- Code review

- Unit testing

- System testing

The main advantage of the prototyping model is that it allows you to start with requirements that are not clearly defined.

The main disadvantage of the prototyping model is that it can lead to poorly designed systems. The prototypes are usually built without regard to how they might be used later, so attempts to reuse them may result in inefficient systems. This model emphasizes refining the requirements based on customer feedback, rather than ensuring a better product through quick change based on test feedback.

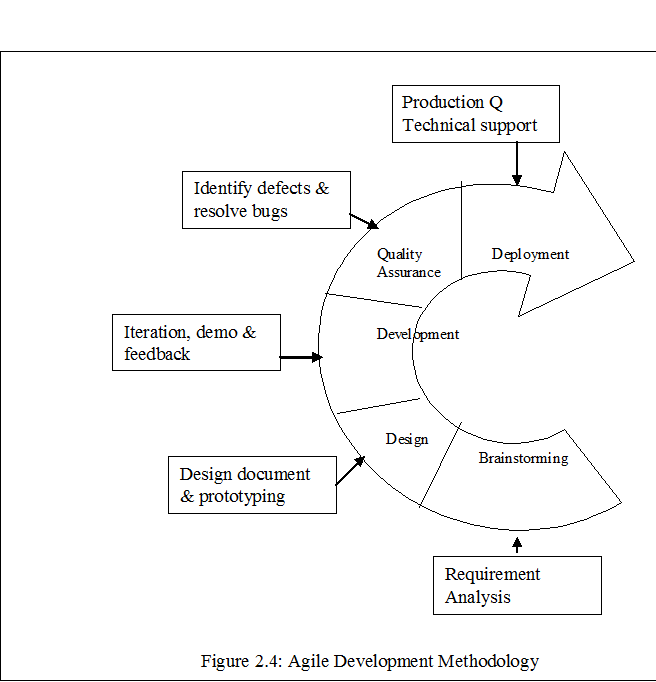

2.5 Agile Methodology

Most software development life cycle methodologies are either iterative or follow a sequential model (as the waterfall model does). As software development becomes more complex, these models cannot efficiently adapt to the continuous and numerous changes that occur. Agile methodology was developed to respond to changes quickly and smoothly. Although the iterative methodologies tend to remove the disadvantage of sequential models, they still are based on the traditional waterfall approach. Agile methodology is a collection of values, principles, and practices that incorporates iterative development, test, and feedback into a new style of development.

a) Customer satisfaction by rapid delivery of useful software

b) Welcome changing requirements, even late in development

c) Working software is delivered frequently (weeks rather than months)

d) Working software is the principal measure of progress

e) Sustainable development, able to maintain a constant pace

f) Close, daily co-operation between business people and developers

g) Face-to-face conversation is the best form of communication (co-location)

h) Projects are built around motivated individuals, who should be trusted

i) Continuous attention to technical excellence and good design

j) Simplicity

k) Self-organizing teams

l) Regular adaptation to changing circumstances

The key differences between agile and traditional methodologies are as follows:

- Development is incremental rather than sequential. Software is developed in incremental, rapid cycles. This results in small, incremental releases, with each release building on previous functionality. Each release is thoroughly tested, which ensures that all issues are addressed in the next iteration.

- People and interactions are emphasized, rather than processes and tools. Customers, developers, and testers constantly interact with each other. This interaction ensures that the tester is aware of the requirements for the features being developed during a particular iteration and can easily identify any discrepancy between the system and the requirements.

- Working software is the priority rather than detailed documentation. Agile methodologies rely on face-to-face communication and collaboration, with people working in pairs. Because of the extensive communication with customers and among team members, the project does not need a comprehensive requirements document.

- Customer collaboration is used, rather than contract negotiation. All agile projects include customers as a part of the team. When developers have questions about a requirement, they immediately get clarification from customers.

- Responding to change is emphasized, rather than extensive planning. Extreme Programming does not preclude planning your project. However, it suggests changing the plan to accommodate any changes in assumptions for the plan, rather than stubbornly trying to follow the original plan.

Agile methodology has various derivate approaches, such as Extreme Programming, Dynamic Systems Development Method (DSDM), and SCRUM. Extreme Programming is one of the most widely used approaches.

2.6 Extreme Programming

In Extreme Programming, rather than designing whole of the system at the start of the project, the preliminary design work is reduced to solving the simple tasks that have already been identified.

The developers communicate directly with customers and other developers to understand the initial requirements. They start with a very simple task and then get feedback by testing their software as soon as it is developed. The system is delivered to the customers as soon as possible, and the requirements are refined or added based on customer feedback. In this way, requirements evolve over a period of time, and developers are able to respond quickly to changes.

The real design effort occurs when the developers write the code to fulfill the specific engineering task. The engineering task is a part of a greater user story (which is similar to a use case). The user story concerns itself with how the overall system solves a particular problem. It represents a part of the functionality of the overall system. A group of user stories is capable of describing the system as a whole. The developers refactor the previous code iteration to establish the design needed to implement the functionality.

During the Extreme Programming development life cycle, developers usually work in pairs. One developer writes the code for a particular feature, and the second developer reviews the code to ensure that it uses simple solutions and adheres to best design principles and coding practices.

Discussion of the core practices of Extreme Programming is beyond the scope of this chapter. For more information, see the links referred to in “More Information” later in this section.

Test-driven development, which is one of the core practices in Extreme Programming, is discussed in greater detail later in this chapter.

When to Use Extreme Programming

Extreme Programming is useful in the following situations:

- When the customer does not have a clear understanding of the details of the new system. The developers interact continuously with the customer, delivering small pieces of the application to the customer for feedback, and taking corrective action as necessary.

- When the technology used to develop the system is new compared to other technologies. Frequent test cycles in Extreme Programming mitigate the risk of incompatibility with other existing systems.

- When you can afford to create automated unit and functional tests. In some situations, you may need to change the system design so that each module can be tested in isolation using automated unit tests.

- When the team size is not very large (usually 2 to 12 people). Extreme Programming is successful in part because it requires close team interaction and working in pairs. A large team would have difficulty in communicating efficiently at a fast pace. However, large teams have used Extreme Programming successfully.

2.7 Test-Driven Development

Test-driven development is one of the core practices of Extreme Programming. The practice extends the feedback approach, and requires that you develop test cases before you develop code. Developers develop functionality to pass the existing test cases. The test team then adds new test cases to test the existing functionality, and runs the entire test suite to ensure that the code fails (either because the existing functionality needs to be modified or because required functionality is not yet included). The developers then modify the functionality or create new functionality so that the code can withstand the failed test cases. This cycle continues until the test code passes all of the test cases that the team can create. The developers then refactor the functional code to remove any duplicate or dead code and make it more maintainable and extensible.

Test-driven development reverses the traditional development process. Instead of writing functional code first and then testing it, the team writes the test code before the functional code. The team does this in very small steps—one test and a small amount of corresponding functional code at a time. The developers do not write code for new functionality until a test fails because some functionality is not present. Only when a test is in place do developers do the work required to ensure that the test cases in the test suite pass. In subsequent iterations, when the team has the updated code and another set of test cases, the code may break several existing tests as well as the new tests. The developers continue to develop or modify the functionality to pass all of the test cases.

Test-driven development allows you to start with an unclear set of requirements and relies on the feedback loop between the developers and the customers for input on the requirements. The customer or a customer representative is the part of the core team and immediately provides feedback about the functionality. This practice ensures that the requirements evolve over the course of the project cycle. Testing before writing functional code ensures that the functional code addresses all of the requirements, without including unnecessary functionality.

With test-driven development, you do not need to have a well-defined architectural design before beginning the development phase, as you do with traditional development life cycle methodologies. Test-driven development allows you to tackle smaller problems first and then evolve the system as the requirements become more clear later in the project cycle.

Other advantages of test-driven development are as follows:

Test-driven development promotes loosely coupled and highly cohesive code, because the functionality is evolved in small steps. Each piece of the functionality needs to be self-sufficient in terms of the helper classes and the data that it acts on so that it can be successfully tested in isolation.

- The test suite acts as documentation for the functional specification of the final system.

- The system uses automated tests, which significantly reduce the time taken to retest the existing functionality for each new build of the system.

- When a test fails, you have a clear idea of the tasks that you must perform to resolve the problem. You also have a clear measure of success when the test no longer fails. This increases your confidence that the system actually meets the customer requirements.

Test-driven development helps ensure that your source code is thoroughly unit tested. However, you still need to consider traditional testing techniques, such as functional testing, user acceptance testing, and system integration testing. Much of this testing can also be done early in your project. In fact, in Extreme Programming, the acceptance tests for a user story are specified by the project stakeholder(s) either before or in parallel to the code being written, giving stakeholders the confidence that the system meets their requirements.

2.7 Summary

This chapter compared various software development life cycle approaches and described how testing fits into each approach. The chapter also presented a process for test-driven development, which is one of the core practices of Extreme Programming, an agile development methodology.

By using test-driven development, you can ensure that each feature of an application block is rigorously tested early in the project life cycle. Early testing significantly reduces the risk of costly fixes and delays later in the life cycle and ensures a robust application block that meets all of the feature requirements.

Software Testing

3.1 Introduction:

Software testing simply refers the process of executing a program (or part of program) with the intention or find out errors. Or more elaborately it can be defined as an investigation which is conducted to provide stakeholder with the information about the quality of the product or service under test.

Software testing also be stated as the process of validating and verifying, where validating find out the answer from the question “are we building the product right?” that is the correctness of the product in relation with users needs and requirements. Validation must determine if the customer’s needs are correctly captured and correctly expressed and understood. It must also determine if the delivered reflects these needs and requirements, in other way “are we building the right product?”

A primary purpose of testing is to detect software failures so that the defects may be discovered and corrected. It is a non trivial pursuit. Testing can not establish that a product functions properly under all conditions but can only establish that it does not function properly under specific conditions.

Software testing can be implemented at any development process. However, most of the test effort occurs after the requirements that have been defined and coding

Different software development models will focus the testing effort at different points in the development process. Most of the test effort occurs after the requirements that have been defined and coding process has been completed. Newer development models, such as Agile, often test driven development and place an increased portion of the testing in the hands of the developer, before it reaches a formal team of testers.

It is not possible to find out all defects within software. Every software product has a target audience. For example, the audience for banking software is completely different from video game software. Therefore, when an organization develops or invest in a software product, it assess whether the software product will be acceptable to target audience. Software testing is the process of attempting to make this assessment.

3.2 Testing Principles:

Before applying methods to design effective test cases, a software engineer must understand the basic principles that guide software testing. A common set of testing principles are described below:

All tests should be traceable to customer requirements. As we have seen, the objective of software testing is to uncover errors. It follows that the most severe defects are those that cause the program to fail to meet its requirements.

Test should be planned long before testing begins. Test planning can begin as the requirements model is complete. Detailed definition of test cases can begin as soon as the design model has been solidified. Therefore, all tests can be planned and designed before may code has been generated.

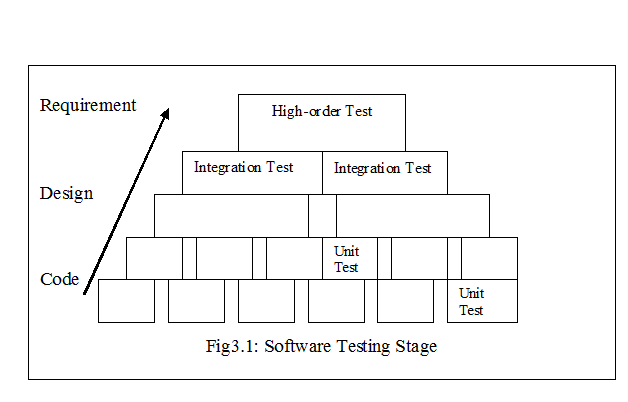

Testing should begin “in the small” and progress toward testing “in the large”. The first test planned and executed generally focus on individual components. As testing progresses, focus shifts in an attempt to find errors in integrated clusters of components and ultimately in the entire system.

Exhaustive testing is not possible. The number of path permutations for even a moderately sized program is exceptionally large. For this reason, it is impossible to execute every combination of paths during testing. It is possible, however, to adequately cover program logic and to ensure that all conditions in the component-level design have been exercised.

To be most effective, testing should be conducted by an independent third party. By most effective, we mean testing that has the highest probability of finding errors. For reasons that have been introduction earlier, the software engineer who created the system is not the best person to conduct all tests for the software.

3.3 Software Testing Methods:

3.3.1 Levels of Testing

Unit Testing:

A unit is the smallest testable part of an application. In procedural programming a unit may be an individual function or procedure. Unit test refers to test that verify the functionality of a specific section of code, usually at the function level. In an object-oriented environment, this is usually a class.

The primary goal of unit testing is to take the smallest piece of testable software in the application, isolate it from the remainder of the code, and determine whether it behaves exactly as you expect. Each unit is tested separately before integrating them into modules to test the interfaces between modules. Unit testing has proven its value in that a large percentage of defects are identified during its use.

Integration Testing:

Integration testing is a logical extension of unit testing. In its simplest form, two units that have already been tested are combined into a component and the interface between them is tested. A component, in this sense, refers to an integrated aggregate of more than one unit. In a realistic scenario, many units are combined into components, which are in turn aggregated into even larger parts of the program. The idea is to test combinations of pieces and eventually expand the process to test your modules with those of other groups. Eventually all the modules making up a process are tested together. Beyond that, if the program is composed of more than one process, they should be tested in pairs rather than all at once.

Integration testing identifies problems that occur when units are combined. By using a test plan that requires you to test each unit and ensure the viability of each before combining units, you know that any errors discovered when combining units are likely related to the interface between units. This method reduces the number of possibilities to a far simpler level of analysis.

Integration testing in a variety of ways but the following are three common strategies:

Top-down approach:

The top-down approach to integration testing requires the highest-level modules be test and integrated first. This allows high-level logic and data flow to be tested early in the process and it tends to minimize the need for drivers. However, the need for stubs complicates test management and low-level utilities are tested relatively late in the development cycle. Another disadvantage of top-down integration testing is its poor support for early release of limited functionality.

Bottom-up approach:

The bottom-up approach requires the lowest-level units be tested and integrated first. These units are frequently referred to as utility modules. By using this approach, utility modules are tested early in the development process and the need for stubs is minimized. The downside, however, is that the need for drivers complicates test management and high-level logic and data flow are tested late. Like the top-down approach, the bottom-up approach also provides poor support for early release of limited functionality.

The third approach, sometimes referred to as the umbrella approach, requires testing along functional data and control-flow paths. First, the inputs for functions are integrated in the bottom-up pattern discussed above. The outputs for each function are then integrated in the top-down manner. The primary advantage of this approach is the degree of support for early release of limited functionality. It also helps minimize the need for stubs and drivers. The potential weaknesses of this approach are significant, however, in that it can be less systematic than the other two approaches, leading to the need for more regression testing.

System Testing:

System testing, in which the software is integrated to the overall product and tested to show that all requirements are met. System testing falls within the black box testing as the it does not require of the internal design of the code or logic.

System integration testing verifies that a system is integrated to any external or third party system defined by the system requirement.

As a rule, system testing takes, as its input, all of the “integrated” software components that have successfully passed integration testing and also the software system itself integrated with any applicable hardware system(s). The purpose of integration testing is to detect any inconsistencies between the software units that are integrated together. System testing is a more limited type of testing; it seeks to detect defects both within the “inter-assemblages” and also within the system as a whole.

System testing is performed on the entire system in the context of a functional requirement specification and/or system requirement specification. System testing tests not only the design, but also the behavior and even the believed expectations of the customer. It is also intended to test up to and beyond the bounds defined in the software/hardware requirements specification(s).

Following types of tests should be conducted during system testing:

a) Performance testing

b) Load testing

c) Usability testing

d) Compatibility testing

e) Graphical user interface testing

f) Security testing

g) Stress testing

h) Volume testing

i) Regression testing

j) Reliability testing

3.4 Software Testing Strategies:

3.4.1 Black-Box Testing

Black-box testing which is also known as functional testing is a testing that ignores the internal mechanism of a system and focuses mainly on the output generated in response to selected input and execution conditions. Generally black-box testing methods are widely used in system level testing.

The primary goal of black-box testing is to assess whether the software does what it is suppose to do. In other words, does the behaviour of the software conform to the requirement specification? Black-box testing can be performed at different levels such as unit testing, integration testing, system testing and acceptance testing. But for different levels there are different requirements and objectives. For instance black-box at integration level mainly focus on the functional behaviour of interacting modules while at black-box testing at the system level focuses on both functional and nonfunctional requirements.

Black-box testing has been widely used for a long time and different approaches can be used for different situations. Basically the techniques could be classified into three groups:

I. Usage based black-box testing techniques, such as random testing or statistical technique

II. Error based black-box technique such as equivalence partitioning testing, boundary value analysis, decision table based testing

III. Fault based black-box techniques which focus on detecting faults in software.

3.4.2 Black-box testing techniques:

Random testing:

Random testing is a strategy that requires the random selection of test cases from the entire input domain. For random testing values of each test case are generated randomly but very often the overall distribution of the test cases has to conform to the distribution of the input domain or an operational profile. For example, for an ATM system we known 80% of the transaction are withdrawal transaction, 15% are deposit transaction and other 5% are transfer transaction. For random testing test case selection has to conform to that distribution.

Equivalence testing:

Equivalence partitioning divides all possible inputs into classes (partitions) such that there are a finite number of input equivalence classes. As a program behaves analogously for inputs in the same class and a test representative value from a class in sufficient to determine the defects. If the representatives detects defect, other class members will detect the same defect.

If input is of type integer or real, then its domain is divided into

- One valid equivalent class with values within the allowed range

- Two invalid equivalent class with values that are greater than the max value and values that are smaller than the min value.

If the input value is of type Boolean, we have two classes, one valid and one invalid.

Input n consisting of 1……..10 the classes are: n<1, 1 <= n <= n < = 10, n> 10

Enumeration A,B,C the classes are: A,B,C not {A,B,C}

Effectiveness

- Reduces the number of test cases that must be run, thus reduces the cost

- Eliminates the fuzzy criteria of test data selection that is inefficient

- Helps identify the different classes for which the program is not working properly

- Partition analysis can detect missing path errors, incorrect relational operators in condition statements, domain errors and computational errors.

Can be applied during unit, integration and system testing phases

Boundary Value analysis

Boundary value analysis is an extension of equivalence partitioning. It is assumed that most of the errors for a system occur at the boundary values of the classes.

The system is checked for its correctness at these boundary values by applying the input values as follows:

Choose one arbitrary value “n’ in each equivalence class

Choose values exactly on lower and upper boundaries of equivalence class

Choose values immediately below and above each boundary (if applicable)

Boundary values for a numeral input n could be

Class n < 0, arbitrary value: n=-10

Class n > 0, arbitrary value: n=100

Class n > 0, n>0, on boundary: n=0

Classes n < 0, n>0, below and above: n=-1, n=1

Effectiveness

This technique is applicable during unit and integration test phases.

- Extreme input testing

- This determines if the system can work in extreme conditions.

- The system is checked for operations by simulating some extreme conditions like applying

- Only minimum values or absence of certain input values

- Only maximum values

- Combination of these minimum and maximum values

State transition testing

State transition-testing technique uses a state model relationship to derive test cases, which covers transition with details of the beginning state and next /end state, the events that cause these transitions and the actions that may result from those transitions.

Design

Test cases are arrived at, based on the transitions between states, and can exercise any number of such transitions as per the state model. Typically the following details are captured in the test case

Starting state of the component, inputs to the component, expected results of the transition, expected final state

There could be two categories of test cases: a) exercise valid transitions between states and b) unspecified transitions that cannot be induced via a state table which lists the events and starting state and tabulates the next state.

3.4.2 White-box testing:

According to IEEE standards white-box testing also known as structural testing or glass box testing, is a test case design method that uses the control structure of the procedural design to derive test cases. Using white-box testing methods, the software engineers can derive test cases that 1) guarantee that all independent paths within a module have been exercised at least once, 2) exercise all logical decisions on their true and false sides, 3) execute all loops at their boundaries and with their operational bounds and 4) exercise internal data structures to ensure their validity.

Statement Coverage

In this type of testing the code is executed in such a manner that every statement of the application is executed at least once. It helps in assuring that all the statements execute without any side effect.

Branch Coverage

No software application can be written in a continuous mode of coding, at some point we need to branch out the code in order to perform a particular functionality. Branch coverage testing helps in validating of all the branches in the code and making sure that no branching leads to abnormal behavior of the application.

Security Testing

Security Testing is carried out in order to find out how well the system can protect itself from unauthorized access, hacking – cracking, any code damage etc. which deals with the code of application. This type of testing needs sophisticated testing techniques.

Mutation Testing

A kind of testing in which, the application is tested for the code that was modified after fixing a particular bug/defect. It also helps in finding out which code and which strategy of coding can help in developing the functionality effectively.

3.4.3 Alpha Testing:

The alpha test is conducted at the developer’s site by a customer. The software is used in a natural setting with the developer looking over the shoulder of the user and recording errors and usage problems. Alpha tests are conducted in a controlled environment.

3.4.4 Beta Testing:

The beta test is conducted at one or more customer sites b the end user of the software. The developer is generally not present. Therefore, the beta test is a live application of the software in an environment that cannot be controlled by the developer .The customer records all problems (real or imagined) that are encountered during beta testing and reports these to the developer at regular intervals. As a results of problems reported during beta tests software engineering make modifications and then prepare for release of the software product to the entire customer base.

3.4.5. Regression testing:

Regression testing is any type of software testing that seeks to uncover software errors after changes to the program (e.g. bugfixes or new functionality) have been made, by retesting the program. The intent of regression testing is to assure that a change, such as a bug fix, did not introduce new bugs. Regression testing can be used to test the system efficiently by systematically selecting the appropriate minimum suite of tests needed to adequately cover the affected change. Common methods of regression testing include rerunning previously run tests and checking whether program behavior has changed and whether previously fixed faults have re-emerged. “One of the main reasons for regression testing is that it’s often extremely difficult for a programmer to figure out how a change in one part of the software will echo in other parts of the software.” This is done by comparing results of previous tests to results of the current tests being run.

3.4.6. Automated testing:

Software test automation refers to the activities and efforts that intend to automate engineering tasks and operations in a software test process using well defined strategies and systematic solutions. The major objectives of test automation are:

- To free engineers from tedious and redundant manual testing operations,

- To speed up a software testing process, and to reduce software testing cost and time during a software life cycle;

- To increase the quality and effectiveness of a software test process by achieving predefined adequate test criteria in a limited schedule.

There are various ways to achieve software test automation. Each organization may be its own focuses and concerns in software test automation. There are three types of software test automation as Enterprise-oriented test automation, Product-oriented test automation, and Project-oriented test automation.

Software Testing Life Cycle

4.1 Introduction to Software Testing Life Cycle

Software testing life cycle consists of the various stages of testing through which a software product goes and describes the various activities pertaining to testing that are carried out on the product. Here’s an explanation of the STLC along with a software testing life cycle flow chart.

Every organization has to undertake testing of each of its products. However, their way to conducted is differs from one organization to another. This refers to the life cycle of the testing process. It is advisable to carry out the testing process from the initial phases, with regard to the Software Development Life Cycle or SDLC to avoid any complications.

4.2 Software Testing Life Cycle Phases:

Software testing has its own life cycle that meets every stage of the SDLC. The software testing life cycle has the following phases. They are

a) Requirement Stage

b) Test Planning

c) Test Analysis

d) Test Design

e) Test Verification and construction

f) Test Execution

g) Result Analysis

h) Bug Tracking

i) Reporting

j) Final Testing and Implementation

k) Post Implementation

Requirement Stage

This is the initial stage of the life cycle process in which the developers take part in analyzing the requirements for designing a product. Testers can also involve themselves as they can think from the users’ point of view which the developers may not. Thus a panel of developers, testers and users can be formed. Formal meetings of the panel can be held in order to document the requirements discussed which can be further used as software requirements specifications

Test Planning

Test planning is predetermining a plan well in advance to reduce further risks. Without a good plan, no work can lead to success be it software-related or routine work. A test plan document plays an important role in achieving a process-oriented approach. Once the requirements of the project are confirmed, a test plan is documented. The test plan structure is as follows:

a) Introduction: This describes the objective of the test plan.

b) Test Items The items that are referred to prepare this document will be listed here such as SRS, project plan.

c) Features to be tested: This describes the coverage area of the test plan, i.e. the list of features that are to be tested that are based on the implicit and explicit requirements from the customer.

d) Features not to be tested: The incorporated or comprised features that can be skipped from the testing phase are listed here. Features that are out of scope of testing, like incomplete modules or those on low severity e.g. GUI features that don’t hamper the further process can be included in the list.

e) Approach: This is the test strategy that should be appropriate to the level of the plan. It should be in acceptance with the higher and lower levels of the plan.

f) Item pass/fail criteria: Related to the show stopper issue. The criterion which is used has to explain which test item has passed or failed.

g) Suspension criteria and resumption requirements: The suspension criterion specifies the criterion that is to be used to suspend all or a portion of the testing activities, whereas resumption criterion specifies when testing can resume with the suspended portion.

h) Test deliverable: This includes a list of documents, reports, charts that are required to be presented to the stakeholders on a regular basis during testing and when testing is completed.

i) Testing tasks: This stage is needed to avoid confusion whether the defects should be reported for future function. This also helps users and testers to avoid incomplete functions and prevent waste of resources.

j) Environmental needs: The special requirements of that test plan depending on the environment in which that application has to be designed are listed here.

k) Responsibilities: This phase assigns responsibilities to the person who can be held responsible in case of a risk.

l) Staffing and training needs: Training on the application/system and training on the testing tools to be used needs to be given to the staff members who are responsible for the application.

m) Risks and contingencies: This emphasizes on the probable risks and various events that can occur and what can be done in such situation.

n) Approval: This decides who can approve the process as complete and allow the project to proceed to the next level that depends on the level of the plan.

Test Analysis

Once the test plan documentation is done, the next stage is to analyze what types of software testing should be carried out at the various stages of SDLC.

Test Design

Test design is done based on the requirements of the project documented in the SRS. This phase decides whether manual or automated testing is to be done. In automation testing, different paths for testing are to be identified first and writing of scripts has to be done if required. There originates a need for an end to end checklist that covers all the features of projec

Test Verification and Construction

In this phase test plans, the test design and automated script tests are completed. Stress and performance testing plans are also completed at this stage. When the development team is done with a unit of code, the testing team is required to help them in testing that unit and reporting of the bug if found. Integration testing and bug reporting is done in this phase of the software testing life cycle.

Test Execution

Planning and execution of various test cases is done in this phase. Once the unit testing is completed, the functionality of the tests is done in this phase. At first, top level testing is done to find out top level failures and bugs are reported immediately to the development team to get the required workaround. Test reports have to be documented properly and the bugs have to be reported to the development team.

Result Analysis

Once the bug is fixed by the development team, i.e after the successful execution of the test case, the testing team has to retest it to compare the expected values with the actual values, and declare the result as pass/fail.

Bug Tracking

This is one of the important stages as the Defect Profile Document (DPD) has to be updated for letting the developers know about the defect. Defect Profile Document contains the following

a) Defect Id: Unique identification of the Defect.

b) Test Case Id: Test case identification for that defect.

c) Description: Detailed description of the bug.

d) Summary: This field contains some keyword information about the bug, which can help in minimizing the number of records to be searched.

e) Defect Submitted By: Name of the tester who detected/reported the bug.

f) Date of Submission: Date at which the bug was detected and reported.

g) Build No.: Number of test runs required.

h) Version No.: The version information of the software application in which the bug was detected and fixed.

i) Assigned To: Name of the developer who is supposed to fix the bug.

j) Severity: Degree of severity of the defect.

k) Priority: Priority of fixing the bug.

l) Status: This field displays current status of the bug.

The contents of a bug well explain all the above mentioned things.

Reporting and Rework

Testing is an iterative process. The bug once reported and as the development team fixes the bug, it has to undergo the testing process again to assure that the bug found is resolved. Regression testing has to be done. Once the Quality Analyst assures that the product is ready, the software is released for production. Before release, the software has to undergo one more round of top level testing. Thus testing is an ongoing process.

Final Testing and Implementation

This phase focuses on the remaining levels of testing, such as acceptance, load, stress, performance and recovery testing. The application needs to be verified under specified conditions with respect to the SRS. Various documents are updated and different matrices for testing are completed at this stage of the software testing life cycle.

Post Implementation

Once the tests are evaluated, the recording of errors that occurred during various levels of the software testing life cycle is done. Creating plans for improvement and enhancement is an ongoing process. This helps to prevent similar problems from occurring in the future projects. In short, planning for improvement of the testing process for future applications is done in this phase.

Performance Testing

5.1 Introduction

In software engineering, performance testing is testing that is performed, to determine how fast some aspect of a system performs under a particular workload. It can also serve to validate and verify other quality attributes of the system, such as scalability, reliability and resource usage.

An empirical technical investigation conducted to provide stakeholders with information about the quality of the product or service under test with regard to speed, scalability and/or stability characteristics. – Scott Barber.

Performance testing is the process of determining the speed or effectiveness of a computer, network, software program or device. This process can involve quantitative tests done in a lab, such as measuring the response time or the number of MIPS (millions of instructions per second) at which a system functions. Qualitative attributes such as reliability, scalability and interoperability may also be evaluated. Performance testing is often done in conjunction with stress testing.

Performance testing can verify that a system meets the specifications claimed by its manufacturer or vendor. The process can compare two or more devices or programs in terms of parameters such as speed, data transfer rate, bandwidth, throughput, efficiency and reliability.

Performance testing can also be used as a diagnostic tool to find out communications bottlenecks. A system can work better or worse just because of a single problem or in a single component. For example, a faster computer can function poorly in today’s web if the connection occurs only 30 to 40 Kbps.

Slow data transfer may be hardware related but can also software-related problems, such as: a corrupted file in a web browser or a security exploit or too many applications running at the same time. Effective performance test can quickly identify the nature of a software related performance problem.

During a performance testing and evaluation process, test engineers must identify the major focuses and prioritize them based on a limited budget and schedule. The major focuses of software performance testing as follows:

Processing speed, latency and response time: Checking the speed of functional tasks of software is always focusing in performance testing and evaluation. Typical examples are checking the processing speed of an e-mail server and measuring the processing speed of different types of transaction of a bank system. Latency is used to measure the delay time of transferring messages, processing events and transactions.

Throughput: The amount of work a computer can perform by a computer within a given time. It is a combination of internal processing speed, speeds of peripheral (I/O), operating systems efficiency as well as other system software and application working together.

Reliability: Since reliability is one of the important product quality factors, reliability validation and measurement must be performed before a product releases. Reliability evaluation focuses on the reliability measurement of a system or its components in delivering functional services during a given time period. Most defense and safety critical system have a strict reliability requirement.

Availability: System availability analysis helps engineers understand the availability of its hardware and software parts such as network, computer hardware and software and application functions and servers.

Scalability: Checking scalability of a system helps test engineers to discover how well a given system can be scale up in terms of network traffic load, input/output throughput, data transaction volume, the number of concurrent access of clients and users.

Utilization: System resource utilization is frequently validated during system performance and evaluation. The goal is to measure the usage of various system resources by components and systems under the given system loads. The common system resources include network bandwidth, CPU time, memory, disk, cache and so on.

5.3 Sub-Genres of Performance testing:

Load testing: Load testing is simplest but realistic form of performance testing. A load test is usually conducted to understand the behavior of an application under expected load. Scenarios are model on the demand that the system is expected to face under real condition. Load testing starts by placing a low demand and gradually increasing the load. This load can be expected concurrent number of users performing a specific number of transactions within time duration. This process allows measuring the performance of the system under different loads as well as determining the maximum number of load which the system can response acceptably.

Stress testing: Stress testing involves testing a system under extreme cases, which will rarely occur under normal conditions. Such testing involve such scenarios as running many users concurrently for short times, short durations, short repeated quickly and extraordinary long transactions. Stress testing is done to determine the application’s robustness in terms of extreme load and helps application administrators to determine if the application will perform sufficiently if the load goes well above the expected maximum. The main purpose behind this is to make sure that the system fails and recovers gracefully.

Spike testing: In Spike testing the number of users are increasing and understanding the behavior of the application; whether performance will suffer, the application will fail or it will be able to handle dramatic changes in load.

Soak testing: Soak testing is usually done to determine if the application can sustain the continuous expected load. During soak tests, memory utilization is monitored to detect potential leaks. It ensure that the throughput and/or response time after some long period of sustained activity are as good or better than at the beginning of the test.

Isolation testing: In Isolation testing application is isolate and a test execution is reputedly done to check to find any fault occurs.

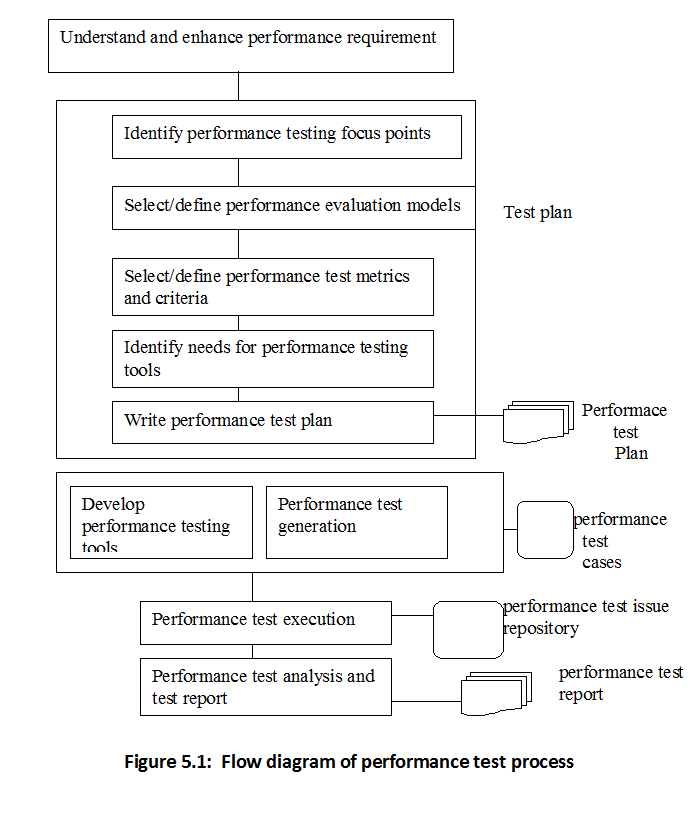

5.4 Performance Test process:

A performance testing process is needed to support the control and management of system performance testing and evaluation. Usually, a performance testing process includes following working steps:

Step 1: understand and enhance performance requirements:

Before carrying out performance testing and evaluation activities, engineers must understand the system performance requirement. In many cases, performance engineers and tester needs to review system specification documents to specify, check, define and enhance system performance requirements. There are two tasks in this step. The first is to make sure that the necessary performance requirements are specified. The second is to check if the given performance requirements are measurable and technologically achievable.

Step 2: Identify the focus areas in performance testing and evaluation:

The major goal of this step is to communicate with system and analysts and development managers to identify the major objectives and focus area for performance validation and list them according to priority.

Step 3 (a): Select or define performance evaluation models.

Its major task is to select and/or define performance evaluation models as a basis to define performance evaluation metrics and supporting tools.

Step 3 (b): Select and/or define performance test metrics and criteria.

For each performance metric, engineers must make sure that it is feasible to collect and monitor the necessary performance data and parameters during performance evaluation

Step 4: Identify needs of performance testing and evaluation tools.

The major task here is to discover and define the needs of performance testing and evaluation solutions, including necessary performance data collection and monitoring techniques, performance analysis facilities and supporting environment and tools.

Step 5: Write a performance test plan.

The result of this step is a performance test plan that includes performance validation objectives, focuses, performance models, evaluation metrics, required solution and tools. In addition a performance test schedule must be included to specify tasks.

Step 6 (a): Develop and deploy performance testing tools.

Step 6 (b): Design and generate performance test cases, test data and test suites.

Step 7: Carry out performance test and evaluation task.

Step 8: Analyze and report system performance:

The major task here is to come out with a high quality performance validation and evaluation report based on the collected performance test results. A good report usually presents well formatted results and provides a clear picture of system performance issues and boundaries.

5.5 Performance indicators:

To ensure the stability of the website and provide a good measure for performance testing, it is necessary to define a set of indictors. These indicators will provide the performance behavior of the website. One can categories the indicators from the client and server side. Though these indicators provide basic information about the performance, they do not provide the true picture of the real environment. The selection of the performance parameters purely depends on the type of websites and the requirements. However, some of the performance parameters are common for all websites. A few performance indicators are defined below:

Processor: It is an important resource, which affects the performance of the application. It is necessary to measure the amount of time spent in processing the threads by one or many CPUs. If the number is consistently above 90% on one or more of the processor’s it indicates that the test is too intense for the hardware. This indicator is applicable only when the system has multiple processors.

Physical disk: Since the disk is a slow device with more capacity to store, parameters like latency and elapsed time provide more information on improving performance. The performance of the system also depends on the disk queue length which shows the number of outstanding requests on the disk. Sustained queue length indicates a disk or memory problem.

Memory: Memory in any computer system is an integral part of the hardware system. More memory speeds up the I/O process during execution but is a burden on the cost parameter. All data and instructions to be executed must be available on the memory comprising pages. If more pages are available, the execution is faster. Observing the number of page faults gives information that helps decide on memory requirements. It is worth considering how many pages are being moved to and from the disk to satisfy the virtual memory requirements.

Network Traffic: It is possible to directly analyze the network traffic on the internet that depends on the bandwidth, type of network connection and other overheads. However, it is possible to find out the time taken for the number of bytes reaching the client from the server. The bandwidth problem will also affect the performance, as the type of connection varies from client to client.

Performance indicators provide the basis for testing, and each category different types of counters can be set. The values that are provided greatly help in analyzing the performance.

5.6 Performance testing challenges:

Even beyond the technical limitations, the creation, running and evaluation of meaningful load tests is a complex and time consuming test. Given the hectic pace of e-business expansion in today’s market place, there are many logistic problems that can compromise a company’s load testing process, mostly because of urgent time to market deadline or the lack of human or physical resources. Following logistic problem often encountered in load testing:

Understanding the problem and defining goals: Experienced testers know that a large share of the analysis effort has given to define a problem. There is no one, general purpose testing plan. Each plan must be developed with a particular goal in mind. Since most performance problems are difficult to diagnose at first they appear.

Level of detail: the level of detail has a significant impact on the formulation of the problem. Formulation that are too marrow or too broad should be avoided.

Determining a representative workload: The workload used to load test a web application should be representative of the actual usage of the application in the field. An inappropriate workload will lead to inaccurate conclusions.

Simulation: Care needs to be taken in simulation and measurement to avoid the kinds of errors that will occur if, for example, the average values or even if the load tests are simply too short.

Analysis: load test produce sometimes staggering amounts of data. The analysys and interpretation of all this raw information is where results are finally generated. Flawed analysis will produce flawed results. It takes experience and skill to extract meaningful conclusions from load testing data.

5.7 Conclusion:

Performance testing, which enhances customer confidence on the website is based on many approaches and strategies. Testing as a whole is cumbersome and tedious due to many complexities behavior including in it. The behavior of each component is captured as a scenario by using the ‘record’ and ‘play’ which is available in all performance testing tools. The scenario is then tested for a specific test session for different stress level. The results are analyzed and decision is taken about its performance.