Tampere University’s continuing research effort aims to develop adaptive safety systems for highly automated off-road mobile machinery to fulfill industry requirements. Research has uncovered serious loopholes in compliance with public safety legislation when deploying artificial intelligence-controlled mobile working devices.

As the use of highly automated off-road gear grows, so does the demand for effective safety precautions. Conventional safety protocols frequently overlook the health and safety issues offered by systems controlled by artificial intelligence (AI).

Marea de Koning, a doctoral researcher specializing in automation at Tampere University, conducts research with the goal of ensuring public safety without jeopardizing technological advancements by creating a safety framework specifically designed for autonomous mobile machines that collaborate with humans. This framework aims to help original equipment manufacturers (OEMs), safety and system engineers, and industry stakeholders develop safety systems that comply with changing legislation.

My approach considers hazards with AI-driven decision-making, risk assessment, and adaptability to unforeseen scenarios. I think it is important to actively engage with industry partners to ensure real-world applicability.

Marea de Koning

Balance between humans and autonomous machines

Anticipating all the possible ways a hazard can emerge and ensuring that the AI can safely manage hazardous scenarios is practically impossible. We need to adjust our approach to safety to focus more on finding ways to manage unforeseen events successfully.

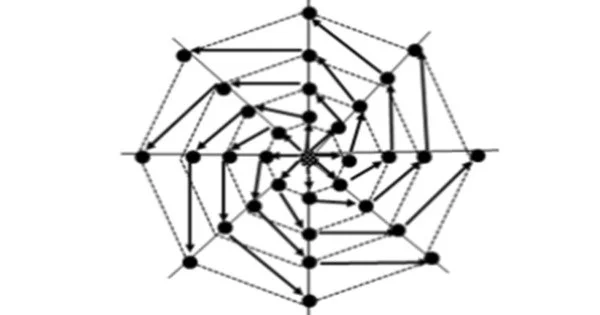

We need strong risk management systems, which frequently include a human-in-the-loop safety option. A human supervisor is expected to intervene as needed. However, reliance on human involvement is impractical in autonomous machines. According to de Koning, using automation might result in significant degradations in human performance due to factors such as boredom, bewilderment, cognitive capabilities, loss of situational awareness, and automation bias. These elements have a substantial impact on safety, and a machine must develop the ability to safely manage its own behaviour.

“My approach considers hazards with AI-driven decision-making, risk assessment, and adaptability to unforeseen scenarios. I think it is important to actively engage with industry partners to ensure real-world applicability. By collaborating with manufacturers, it is possible to bridge the gap between theoretical frameworks and practical implementation,” she says.

The framework intents to support OEMs in designing and developing compliant safety systems and ensure that their products adhere to evolving regulations.

Integrating framework to existing machinery

Marea de Koning started her research in November 2020 and will finish it by November 2024. The project is funded partly by the Doctoral School of Industry Innovations and partly by a Finnish system supplier.

De Koning’s next research project, starting in April, will focus on integrating a subset of her safety framework and rigorously testing its effectiveness. Regulation 2023/1230 replaces Directive 2006/42/ec as of January 2027, significantly challenging OEMs.

“I’m doing everything I can to ensure that safety remains at the forefront of technological advancements,” she concludes.

Human collaboration guarantees that AI-powered gear meets regulatory standards and safety measures. Humans can negotiate complex regulatory frameworks and guarantee that AI systems follow legal criteria.

Collaboration with humans increases user and stakeholder trust and adoption of AI-powered machines. When humans participate in the development and deployment process, they are more likely to trust and feel at ease utilizing AI-powered solutions.

In summary, greater collaboration with humans is critical for improving the safety of AI-powered machines because it combines human brilliance, ethical oversight, adaptability, regulatory compliance, and user feedback to develop safer and more trustworthy AI systems.