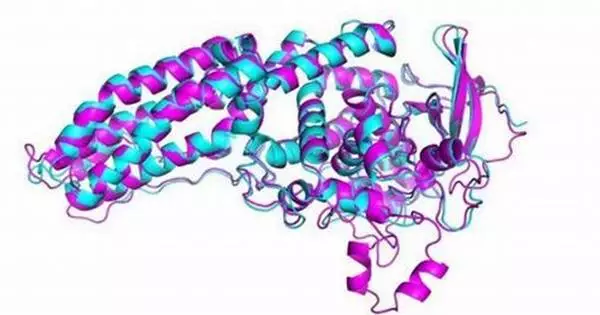

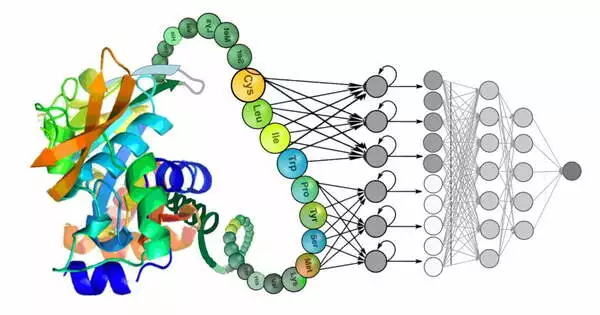

Deep learning has shown promising results in the field of protein design. The ability to accurately predict protein structures and functions is critical for a variety of applications, including drug discovery, enzyme engineering, and bioengineering.

Deep learning methods were used to augment existing energy-based physical models in ‘do novo’ or from-scratch computational protein design, resulting in a 10-fold increase in success rates verified in the lab for binding a designed protein with its target protein. The findings will assist scientists in developing better drugs to combat diseases such as cancer and COVID-19.

The key to understanding proteins that govern cancer, COVID-19, and other diseases is quite simple. Determine their chemical structure and which other proteins can bind to them. But there’s a catch.

“The search space for proteins is enormous,” said Brian Coventry, a research scientist with the Institute for Protein Design, University of Washington and The Howard Hughes Medical Institute.

A protein studied by his lab typically is made of 65 amino acids, and with 20 different amino acid choices at each position, there are 65 to the 20th power binding combinations, a number bigger than the estimated number of atoms there are in the universe.

We just split up this problem, which has 2 to 6 million designs in it, and run all of those in parallel on the massive computing resources of Frontera. It has a large amount of CPU nodes on it. And we assigned each of these CPUS to do one of these design trajectories, which let us complete an extremely large number of design trajectories in a feasible time.

Brian Coventry

Coventry co-authored a study that was published in the journal Nature Communications. In it, his team used deep learning methods to augment existing energy-based physical models in ‘do novo’ or from-scratch computational protein design, resulting in a 10-fold increase in success rates for binding a designed protein with its target protein, which was verified in the lab.

“We demonstrated that incorporating deep learning methods to evaluate the quality of the interfaces where hydrogen bonds form or from hydrophobic interactions can result in a significantly improved pipeline,” said study co-author Nathaniel Bennett, a post-doctoral scholar at the University of Washington’s Institute for Protein Design.

“This is as opposed to trying to exactly enumerate all of these energies by themselves,” he added. Readers might be familiar with popular examples of deep learning applications such as the language model ChatGPT or the image generator DALL-E.

Deep learning employs computer algorithms to analyze and infer patterns in data, layering the algorithms to extract higher-level features from the raw input. Deep learning methods were used in the study to learn iterative transformations of representations of the protein sequence and possible structure that rapidly converge on models that turn out to be very accurate.

The authors’ deep learning-augmented de novo protein binder design protocol included the machine learning software tools AlphaFold 2 and the RoseTTA fold, which was developed by the Institute for Protein Design.

Study co-author David Baker, the director of the Institute for Protein Design and an investigator with the Howard Hughes Medical Institute, was awarded a Pathways allocation on the Texas Advanced Computing Center’s (TACC) Frontera supercomputer, which is funded by the National Science Foundation.

The study problem was well-suited for parallelization on Frontera because the protein design trajectories are all independent of one another, meaning that information didn’t need to pass between design trajectories as the compute jobs were running.

“We just split up this problem, which has 2 to 6 million designs in it, and run all of those in parallel on the massive computing resources of Frontera. It has a large amount of CPU nodes on it. And we assigned each of these CPUS to do one of these design trajectories, which let us complete an extremely large number of design trajectories in a feasible time,” said Bennett.

The researchers used the RifDock docking program to generate six million protein ‘docks,’ or interactions between potentially bound protein structures, then divided them into 100,000 chunks and assigned each chunk to one of Frontera’s 8000+ compute nodes using Linux utilities.

Each of those 100,000 docks would be divided into 100 jobs, each containing a thousand proteins. A thousand proteins are fed into the computational design software Rosetta, where they are screened at the tenth of a second scale before being screened at the few-minutes scale.

What’s more, the authors used the software tool ProteinMPNN developed by the Institute for Protein Design to further increase the computational efficiency of generating protein sequences neural networks to over 200 times faster than the previous best software.

The yeast surface display binding data used in their modeling is all publicly available and collected by the Institute for Protein Design. Tens of thousands of different strands of DNA were ordered to encode a different protein designed by the scientists.

After that, the DNA was combined with yeast, resulting in each yeast cell expressing one of the designed proteins on its surface. The yeast cells were then separated into those that bind and those that do not. They then used tools from the human genome sequencing project to determine which DNA worked and which did not. Despite the study results that showed a 10-fold increase in the success rate for designed structures to bind on their target protein, there is still a long way to go, according to Coventry.