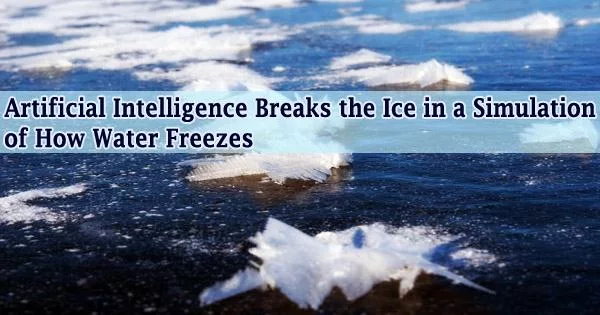

A team from Princeton University has successfully used artificial intelligence (AI) to use equations that control the quantum behavior of individual atoms and molecules to replicate the early stages of ice formation.

The simulation shows with quantum accuracy how water molecules change into solid ice. The researchers used deep neural networks, a type of artificial intelligence, in their procedures to achieve a degree of accuracy that was previously believed to be unachievable due to the amount of computer power it would need. Journal Proceedings of the National Academy of Sciences published the study.

“In a sense, this is like a dream come true,” said Roberto Car, Princeton’s Ralph W. *31 Dornte Professor in Chemistry, who co-pioneered the approach of simulating molecular behaviors based on the underlying quantum laws more than 35 years ago.

“Our hope then was that eventually we would be able to study systems like this one, but it was not possible without further conceptual development, and that development came via a completely different field, that of artificial intelligence and data science.”

Modeling the early stages of freezing water, or ice nucleation, could increase the precision of weather and climate predictions as well as other processes like flash-freezing food.

Despite still being only fractions of a second, the new method enables the researchers to monitor the activities of hundreds of thousands of atoms for periods of time that are thousands of times longer than in previous investigations.

Ice nucleation is one of the major unknown quantities in weather prediction models. This is a quite significant step forward because we see very good agreement with experiments. We’ve been able to simulate very large systems, which was previously unthinkable for quantum calculations.

Pablo Debenedetti

The method of applying underlying quantum mechanical laws to forecast the physical motions of atoms and molecules was co-invented by Car. How atoms associate with one another to form molecules and how molecules associate with one another to make common objects are both governed by quantum mechanical principles.

Car and Michele Parrinello, a physicist now at the Istituto Italiano di Tecnologia in Italy, published their approach, known as “ab initio” (Latin for “from the beginning”) molecular dynamics, in a groundbreaking paper in 1985.

However, quantum mechanical computations are difficult and demand a lot of processing power. Only one hundred atoms could be simulated by computers in the 1980s across timescales of a few trillionths of a second.

Modern supercomputers and subsequent breakthroughs in computing increased the simulation’s atom count and runtime, but these numbers were still far below the number of atoms required to witness intricate processes like ice nucleation.

An appealing possible solution was offered by AI. Researchers train a neural network, so called because of its resemblance to how the human brain functions, to recognize a relatively small subset of chosen quantum calculations.

Once taught, the neural network is capable of performing quantum mechanically accurate calculations of forces between atoms that it has never seen before. Voice recognition and self-driving cars are only two examples of commonplace applications that make use of this “machine learning” methodology.

In the case of AI applied to molecular modeling, a major contribution came in 2018 when Princeton graduate student Linfeng Zhang, working with Car and Princeton professor of mathematics Weinan E, found a way to apply deep neural networks to modeling quantum-mechanical interatomic forces. Zhang, who earned his Ph.D. in 2020 and is now a research scientist at the Beijing Institute of Big Data Research, called the approach “deep potential molecular dynamics.”

In the current article, Car, Pablo Piaggi, a postdoctoral researcher, and other researchers used these methods to tackle the difficult task of simulating ice nucleation.

They were able to execute simulations of up to 300,000 atoms utilizing deep potential molecular dynamics using substantially less computational power and for far longer timespans than were previously achievable. On Summit, one of the fastest supercomputers in the world, which is housed at Oak Ridge National Laboratory, they ran the simulations.

According to Pablo Debenedetti, a co-author of the new study and the Class of 1950 Professor of Engineering and Applied Science at Princeton University, this work offers one of the best examinations of ice nucleation.

“Ice nucleation is one of the major unknown quantities in weather prediction models,” Debenedetti said. “This is a quite significant step forward because we see very good agreement with experiments. We’ve been able to simulate very large systems, which was previously unthinkable for quantum calculations.”

At the moment, observations from laboratory experiments are the main source of information used by climate models to determine how quickly ice nucleates, but these correlations are only descriptive rather than predictive, and they only hold for a small set of experimental settings.

In contrast, molecular simulations of the kind used under this study may generate simulations that are predictive of future events and can calculate the amount of ice that will form in high temperature and pressure environments, such as those found on other planets.

“The deep potential methodology used in our study will help realize the promise of ab initio molecular dynamics to produce valuable predictions of complex phenomena, such as chemical reactions and the design of new materials,” said Athanassios Panagiotopoulos, the Susan Dod Brown Professor of Chemical and Biological Engineering and a co-author of the study.

“The fact that we are studying very complex phenomena from the fundamental laws of nature, to me that is very exciting,” said Piaggi, the study’s first author and a postdoctoral research associate in chemistry at Princeton.

Piaggi collaborated with Parrinello on the creation of fresh methods for researching uncommon occurrences, such as nucleation, using computer simulation while pursuing his doctorate. Even with the aid of AI, rare events occur over timescales that cannot be given for simulation, necessitating the use of specific approaches to expedite them.

By “seeding” microscopic ice crystals into the simulation, Jack Weis, a PhD student in chemical and biological engineering, helped improve the likelihood of witnessing nucleation.

“The goal of seeding is to increase the likelihood that water will form ice crystals during the simulation, allowing us to measure the nucleation rate,” said Weis, who is advised by Debenedetti and Panagiotopoulos.

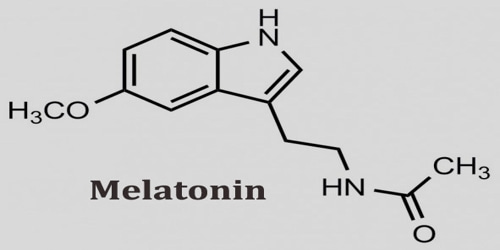

Two hydrogen atoms and one oxygen atom make up a water molecule. The number of electrons surrounding each atom controls how easily atoms can join together to form molecules.

“We start with the equation that describes how electrons behave,” Piaggi said. “Electrons determine how atoms interact, how they form chemical bonds, and virtually the whole of chemistry.”

According to Car, director of the Chemistry in Solution and at Interfaces center, which receives funding from the U.S. Department of Energy Office of Science and collaborates with local colleges, the atoms can live in literally millions of distinct configurations.

“The magic is that because of some physical principles, the machine is able to extrapolate what happens in a relatively small number of configurations of a small collection of atoms to the countless arrangements of a much bigger system,” Car said.

Although AI approaches have been available for some years, researchers have been cautious about applying them to calculations of physical systems, Piaggi said. “When machine learning algorithms started to become popular, a big part of the scientific community was skeptical, because these algorithms are a black box. Machine learning algorithms don’t know anything about the physics, so why would we use them?”

This mindset has significantly changed in recent years, according to Piaggi, not just because the algorithms are effective but also because scientists are now applying their understanding of physics to guide the development of machine learning models.

For Car, it is satisfying to see the work started three decades ago come to fruition.

“The development came via something that was developed in a different field, that of data science and applied mathematics,” Car said. “Having this kind of cross interaction between different fields is very important.”

This work was supported by the U.S. Department of Energy (grant DE-731 SC0019394) and used resources of the Oak Ridge Leadership Computing Facility (grant DE-AC05-00OR22725) at the Oak Ridge National Laboratory.

The Princeton Research Computing resources of Princeton University were largely used to run the simulations. Pablo Piaggi was supported by an Early Postdoc.Mobility fellowship from the Swiss National Science Foundation.