A novel machine-learning technique can train and control a reconfigurable soft robot that can change shape to complete a task. The team also developed a simulator to test control algorithms for shape-shifting soft robots.

Consider a slime-like robot that can fluidly change shape to fit through tight areas and may be deployed within the human body to remove an undesired item. While such a robot does not currently exist outside of a laboratory, researchers are striving to create customizable soft robots for use in health care, wearable devices, and industrial systems.

But how can you control a squishy robot that has no movable joints, limbs, or fingers and can change its entire shape at will? MIT researchers are attempting to address that question.

They created a control system that can self-learn how to move, stretch, and shape a reconfigurable robot to fulfill a certain task, even if that task requires the robot to change its morphology several times. The researchers also created a simulator to run control algorithms for deformable soft robots through a range of difficult shape-changing tasks.

Their technique accomplished all eight of the tasks they tested, outperforming other algorithms. The strategy worked particularly effectively for diverse projects. For example, in one test, the robot had to lower its height while growing two tiny legs to fit through a narrow conduit, then retract those legs and lengthen its torso to open the pipe’s lid.

When people think about soft robots, they tend to think about robots that are elastic, but return to their original shape. Our robot is like slime and can actually change its morphology. It is very striking that our method worked so well because we are dealing with something very new.

Boyuan Chen

While reconfigurable soft robots are still in their early stages, this technology could one day allow general-purpose robots to modify their shapes to perform a variety of jobs.

“When people think about soft robots, they tend to think about robots that are elastic, but return to their original shape. Our robot is like slime and can actually change its morphology. It is very striking that our method worked so well because we are dealing with something very new,” says Boyuan Chen, an electrical engineering and computer science (EECS) graduate student and co-author of a paper on this approach.

Chen’s co-authors include lead author Suning Huang, an undergraduate student at Tsinghua University in China who completed this work while a visiting student at MIT; Huazhe Xu, an assistant professor at Tsinghua University; and senior author Vincent Sitzmann, an assistant professor of EECS at MIT who leads the Scene Representation Group in the Computer Science and Artificial Intelligence Laboratory. The research will be presented at the International Conference on Learning Representations.

Controlling dynamic motion

Scientists frequently train robots to do tasks using a machine-learning approach known as reinforcement learning, which is a trial-and-error process in which the robot is rewarded for actions that get it closer to a goal.

This is useful when the robot’s moving parts are consistent and well-defined, such as a gripper with three fingers. A reinforcement learning system in a robotic gripper may move one finger gently, learning by trial and error if that motion results in a reward. Then it would proceed to the next finger, and so on. However, magnetically controlled shape-shifting robots can dynamically squish, bend, or extend their entire bodies.

“Such a robot could have thousands of small pieces of muscle to control, so it is very hard to learn in a traditional way,” says Chen.

To overcome this difficulty, he and his collaborators had to approach it differently. Rather than controlling each tiny muscle independently, their reinforcement learning algorithm starts by learning to control groups of adjacent muscles that collaborate. After exploring the space of possible actions by focusing on muscle groups, the algorithm goes down into further detail to optimise the policy, or action plan, that it has learned. In this way, the control algorithm employs a coarse-to-fine approach.

“Coarse-to-fine means that when you take a random action, that random action is likely to make a difference. The change in the outcome is likely very significant because you coarsely control several muscles at the same time,” Sitzmann says.

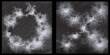

To enable this, the researchers treat a robot’s action space, or how it can move in a certain area, like an image. Their machine-learning model uses images of the robot’s environment to generate a 2D action space, which includes the robot and the area around it. They simulate robot motion using what is known as the material-point-method, where the action space is covered by points, like image pixels, and overlayed with a grid.

They designed their system to comprehend that neighboring action points have stronger correlations, just as nearby pixels in an image are related (for example, the pixels that form a tree in a photo). When the robot changes shape, points surrounding its “shoulder” move similarly, while points on its “leg” move similarly, but in a different way than those on the “shoulder.”

Furthermore, the researchers employ the same machine-learning algorithm to analyze the environment and forecast the robot’s next steps, making it more efficient.

Building a simulator

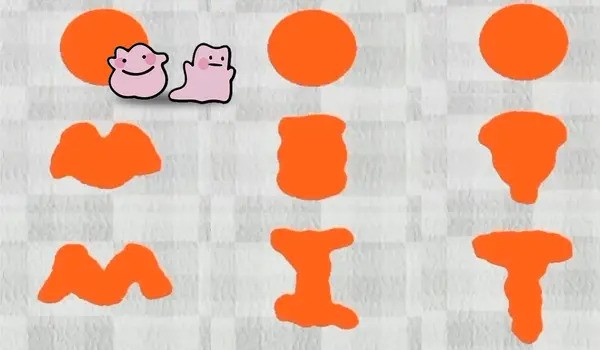

After inventing this approach, the researchers needed a testing environment, so they established DittoGym. DittoGym includes eight exercises that assess a reconfigurable robot’s capacity to dynamically change shape. In one, the robot’s body must extend and curve in order to navigate past obstacles and achieve its destination. In another, it must alter shape to resemble letters of the alphabet.

“Our task selection in DittoGym follows both generic reinforcement learning benchmark design principles and the specific needs of reconfigurable robots. Each task is designed to represent certain properties that we deem important, such as the capability to navigate through long-horizon explorations, the ability to analyze the environment, and interact with external objects,” Huang says. “We believe they together can give users a comprehensive understanding of the flexibility of reconfigurable robots and the effectiveness of our reinforcement learning scheme.”

Their approach outperformed baseline methods and was the only technique capable of completing multistage challenges involving many shape modifications.

“We have a stronger correlation between action points that are closer to each other, and I think that is key to making this work so well,” Chen says.

While shape-shifting robots may not be deployed in the real world for many years, Chen and his colleagues hope that their work will inspire other scientists to research reconfigurable soft robotics as well as to consider using 2D action spaces for other complicated control challenges.