Motivation

The Fast Fourier Transform (FFT) is important to a wide range of applications, from signal processing to spectral methods for solving partial differential equations. The computationally challenging nature of the FFT has made it a staple of benchmarks for decades. The FFT benchmark is a simple program that stresses both the local memory bandwidth and the network bandwidth of a parallel computer. It is typical of a class of problems that can perform well on canonical parallel computers, provided there is suffcient network bandwidth. The communication pattern of this benchmark is complicated, but it is well matched to distributed arrays and can be implemented succinctly and effciently.

The FFT was used for measuring the bandwidth between processors on a network. FFT is also a good example of a communication-intensive parallel application with complex communication patterns and allows the demonstration of a number of useful parallel coding techniques, in particular, how to write a distributed array program that succinctly and efficiently implements these complicated patterns. A serial FFT algorithm is usually limited by the memory bandwidth. Likewise, a parallel FFT algorithm is usually limited by the aggregate bandwidth or bisection bandwidth of the communication network. The FFT provides an excellent illustration of the kinds of complex communication patterns that can perform well on canonical parallel computers, provided there is suffcient network bandwidth. This paper illustrates both coding and optimization approaches for efficiently implementing the FFT algorithm in pMatlab.

Objectives

This paper focuses on providing more breadth regarding the key design, code, debug, and test trade-o s associated with parallel programming. The goal is to reveal the breadth of issues associated with applying these principles. In addition, the following steps also provide a short introduction to the primary parallel programming models: distributed array semantics, message passing, and manager/worker. Some of the principles covered in this section include the following:

Design: When to go parallel, classes of parallel programs, parallel programming models, machine model.

Coding: Different parallel programming styles, impact of code on performance and scalability.

Debug: Details of the parallel debug process, what errors are found at each step?

Testing: Performance metrics and predicting performance (relative to machine parameters).

1.3 Literature Review

In commenting on Charles Van Loan.’s seminal book [1] Computational Frameworks for the Fast Fourier Transform, David Bailey wrote in the journal SIAM Review that –“the FFT has found application in almost every field of modern science, including fields as diverse as astronomy, acoustics, image processing, fluid dynamics, petroleum exploration, medicine, and quantum physics. It is not an exercise in hyperbole to say that the world as we know it would be different without the FFT.” [2]. This observation, made over a decade ago, is ever more valid today.

The application area of interest to us involves detection, classification and tracking of underwater targets [3]. As stealth technologies become more pervasive, there is a need to deploy ever more performing sensor arrays. To fully exploit the information contained in data measured by these novel devices requires use of unconventional algorithms that exhibit growing computational complexity (function of the number of acoustic channels and the number of complex samples in each observation window). For example, signal whitening has long been recognized as an essential stage in processing sonar array data [4]. The unconventional twice whitening paradigm includes the inversion of the spatiotemporal covariance matrix for ambient noise via unitary Fourier factorization [3]. The computational challenge one faces in such an endeavor stems from the following considerations. An array consisting of Ne acoustic channels, capturing Nt complex samples per time window per channel, (Ne ~ 103, Nt ~ 104) yields a spatio-temporal covariance

matrix of size 107 × 107 for diffuse ambient noise. Its inversion involves 3 × 103 16K -point complex FFTs [3]. This, in turn, translates into computational throughput that cannot readily be met with conventional hardware. In such a context, the emergence of streaming multicore processors with multi-SIMD architectures (e.g., the IBM Cell [5], the NVIDIA Tesla [6]), or with ultra-low power operation combined with real-time compute and I/O reconfigurability (Coherent Logix ““HyperX”” [7]), opens unprecedented opportunities for executing many sophisticated signal processing algorithms, including FFTs, faster and within a much lower energy budget.

Discrete Fourier transforms are primarily used in signal processing. They are also used in processing information stored in computers, solving partial differential equations, and performing convolutions. The discrete Fourier transform can be computed efficiently using the FFT algorithm. The FFT has various applications including digital audio recording and image processing. FFTs are also used in scientific and statistical applications, such as detecting periodic fluctuations in stock prices and analyzing seismographic information to take “sonograms” of the inside of the Earth [8]. Due to the vast usage of FFT different algorithms have been developed over time. We will discuss some of the FFT algorithms which are currently being used.

The discrete Fourier transform of length N requires time complexity to be O (N2) whereas the time complexity of FFT is O (Nlog2N), where N is the number of data points. The following table shows the significant difference between the operation counts for the DFT and FFT algorithms.

| N | DFT(counts) | FFT(counts) |

| 2 | 4 | 8 |

| 4 | 16 | 8 |

| 8 | 64 | 24 |

| 16 | 256 | 64 |

| 32 | 1024 | 160 |

| 64 | 4096 | 384 |

| 128 | 16384 | 896 |

| 256 | 65536 | 2048 |

| 512 | 262144 | 4608 |

| 1024 | 1048576 | 10240 |

Table 1.1 Operation counts for DFT and FFT

Fastest Fourier Transform in the West (FFTW)

The Fastest Fourier Transform in the West package developed at the Massachusetts Institute of Technology (MIT) by Matteo Frigo and Steve G. Johnson. FFTW is a subroutine library for computing the discrete Fourier transform (DFT) in one or more dimensions, of arbitrary input size, and of both real and complex data [9]. FFTW 3.1.2 is the latest official version of FFTW. Here is a list of some of FFTW’s more interesting features [9]:

1. FFTW supports both one one-dimensional and multi-dimensional transforms.

2. The input data can have arbitrary length. FFTW employs O(n log n) algorithms for all lengths, including prime numbers.

3. FFTW supports fast transforms of purely real input or output data.

4. FFTW with versions above 3.0 supports transforms of real even/odd data.

5. Efficient handling of multiple, strides transforms, which lets the user transform multiple arrays at once, transform one dimension of a multi-dimensional array, or transform one field of a multi-component array.

6. Portability to any platform with a C compiler

The FFTW has obtained more accurate and optimized results for FFT. They achieved more extensive benchmarks on a variety of platforms. Their code is machine independent. The same program without any modification performs well on all most all the architectures. FFTW’s performance is typically superior to any other publicly available FFT software. The authors of FFTW give three reasons for making their code more superior and faster than others [9]:

1. FFTW uses a variety of algorithms and implementation styles that adapt themselves to the machine.

2. FFTW uses an explicit divide-and-conquer methodology to take advantage of the memory hierarchy.

3. FFTW uses a code generator to produce highly optimized routines for computing small transforms.

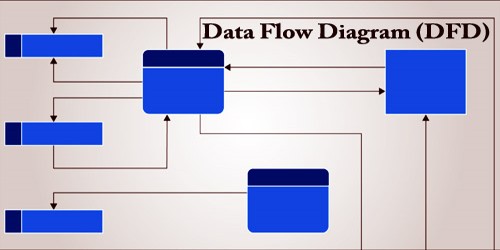

DFT IP Generator

The Spiral DFT IP generator [10] is a fast generator for customized DFT soft IP cores. The user provides variety of input parameters like size of DFT, scaling mode, data width, constant width, parallelism, twiddle storage method, and FIFO threshold that control the functionality of the generated core. All these parameters control resource tradeoffs such as area and throughput as well. After filling in the parameters in the input form, the resources are dynamically estimated. The output generated is synthesizable Verilog code for an n-point DFT with parallelism p.

Cooley-Tukey FFT algorithm

The Cooley-Tukey FFT algorithm is the most common algorithm for developing FFT. This algorithm uses a recursive way of solving DFT of any arbitrary size N [11]. The technique divides the larger DFT into smaller DFT. Which subsequently reduce the complexity of their algorithm. If the size of the DFT is N then this algorithm makes N=N1.N2 where N1 and N2 are smaller DFT’s. The complexity then becomes O (N log N). Radix-2 decimation-in-time (DIT) is the most common form of the Cooley-Tukey algorithm, for any arbitrary size N. Radix-2 DIT divides the size N DFT’s into two interleaved DFT’s of size N/2. The DFT is defined by the formula [12]:

Radix-2 divides the DFT into two equal parts. The first part calculates the Fourier transform of the even index numbers. The other part calculates the Fourier transform of the odd index numbers and then finally merges them to get the Fourier transform for the whole sequence. This will reduce the overall time to O (N log N).

The structure describes that given an input N, the algorithm divides it into equal pairs, and further divides it in a recursive way until single data points are left. Once all the data points are formed the algorithm then merges them to get the Fourier transform for the whole sequence.

In this chapter, we discussed the FFT models parallel computational scheme for the FFT which are currently being used. However the models provide sequential versions of the FFT. Little amount of research has been done on developing a parallel version of the FFT. We developed this approach of implementing the FFT in parallel.

Here, our objective is to report on the development, implementation, and demonstration of a novel, massively parallel computational scheme for the FFT that exploit the capabilities of one such multicore processor, namely the IBM Cell. Our paper is organized as follows. Capter 2 briefly reviews current approaches to parallelizing the FFT and the basic FFT algorithm, and motivates the new concept of transverse vectorization. Capter 3 highlights several multicore platforms that are under consideration for sensor array processing. The implementation of the transverse vectorization scheme on the IBM Cell is subsequently discussed in chapter 4.

Discrete Fourier Transform

This chapter is intended to provide a brief introduction to the discrete Fourier transform (DFT).. A major application of Fourier transforms is the analysis of a series of observations: xl , l = 0, . . . ,N−1.Typically, N will be quite large: 10000 would not be unusual. The sources of such observations are many: ocean tidal records over many years, communication signals over many microseconds, stockprices over a few months, sonar signals over a few minutes, and so on. The assumption is that there are repeating patterns in the data that form part of the xl. However, usually there will be other phenomena which may not repeat, or repeat in a way that is not discernably cyclic. This is called “noise.” The DFT helps to identify and quantify the cyclic phenomena. If a pattern repeats itself m times in the N observations, it is said to have Fourier frequency m. To make this more specific, suppose one measures a signal from time t = 0 to t = 680 in steps of 2.5 seconds, giving 273 observations. The measurements might appear as shown in Figure 1.1.

Complex Numbers

Effective computation of the DFT relies heavily on the use of complex numbers, so it is useful to review their basic properties. This material is elementary and probably wellknown , but it is included for completeness. Historically, complex numbers were introduced to deal with polynomial equations, such as x2 +1 =0, which have no real solutions. Informally, they can be defined as the set C of all “numbers” of the form a + jb where a and b are real numbers and j2 = −1. Addition, subtraction, and multiplication are performed among complex numbers by treating them as binomials in the unknown j and using j2 = −1 to simplify the result. Thus

(a + jb) + (c + jd) = (a + c) + j(b + d)

And,

(a + jb) × (c + jd) = (ac − bd) + j(ad + bc).

For the complex number z = a + jb, a is the real part of z and b is the imaginary part of

z. The zero element of C is 0 + 0i, and the additive inverse of z = a + jb is –a + i (−b).

The multiplicative inverse z−1 is z−1 = a – jb / a2 + b2 .

The complex conjugate of z = a + jb is denoted by z̅ and is equal to a − jb. The

modulus of z, denoted by |z |, is √zz̅ = √ a2 + b2.

Some additional facts that will be used later are, ez = e(a+jb) = ea ejb and ejb = cos b + j sin b.

Thus, Re (ez) = ea cos b and Im (z) = ea sin b.

Just as a real number can be pictured as a point lying on a line, a complex number can be pictured as a point lying in a plane. With each complex number a+jb one can associate a vector beginning at the origin and terminating at the point (a, b). These notions are depicted in Figure 2.2.

Instead of the pair (a, b), one can use the “length” (modulus) together with the angle the number makes with the real axis. Thus, a+jb can be represented as r cos θ+ jr sin θ = rejθ, where r =|z | = √a2 + b2 and θ = arctan (b/a). This representation of a complex number is depicted in Figure 2.3.

Multiplication of complex numbers in polar form is straightforward: if z1 = a + jb = r1ejθ1 and

z2 = c + jd = r2ejθ2 , then z1z2= r1 r2e j (θ1+θ2).

The moduli are multiplied together, and the angles are added. Note that if z = ejθ, then |z |= 1 for all values of θ.

Some Mathematical and Computational Preliminaries

The development in section 2.1 showed that the computation of the DFT involves the multiplication of a matrix M̅ by a vector x, where the matrix has very special structure. In particular, it is symmetric, and each of its elements is a power of a single number ω, where ω depends on the order N = 2n+1 of the matrix. These numbers are called the twiddle factors. Moreover, since ω−l= ω−l±N, only N of the N2 entries in the matrix are actually different. Finally, since ω depends on N, in many contexts it will be necessary to distinguish ω’s corresponding to different values of N; to do this, the notation will be used.

Given these features, it is not surprising that when N is a power of two, the structure of the matrix can be exploited to reduce the cost2 of computing X from Θ(N2) resulting from a straightforward matrix times vector computation to Θ(N log2 N). Indeed, exploring the numerous variants of the fast Fourier transform (FFT) algorithm which exploit this structure is a main topic of this book. However, the price of this reduction is the use of complex arithmetic. This chapter deals with various aspects of complex arithmetic, together with the efficient computation of the twiddle factors. Usually the twiddle factors are computed in advance of the rest of the computation, although in some contexts they may be computed “on the fly.” This issue is explored more fully in Chapter 4 for implementing sequential FFTs and in Chapter 24 for implementing parallel FFTs.

Computing the Twiddle Factors

Let N be a power of 2, and recall that

ejrθ = cos(rθ) + j sin(rθ), θ=; j =

Since the ’s are the complex roots of unity, they are symmetric and equally spaced on the unit circle. Thus, if a+jb is a twiddle factor, then so are ±a±jb and ±b±ja. By exploiting this property, one needs only to compute the first N/8−1 values, namely for r = 1, . . . , N/8 − 1. (Note that = 1 for r = 0.)

The most straightforward approach is to use the standard trigonometric function library procedures for each of the N/8 − 1 values of cos(rθ) and sin(rθ). The cost will be N/4−2 trigonometric function calls, and each call will require several floating-point arithmetic operations. To avoid the relatively expensive trigonometric function calls, one can use the following algorithm proposed by Singleton [83]. It makes use of the trigonometric identities

cos(a + b) = cos a cos b − sin a sin b

cos a = cos(2 ) = 1 – 2sin2()

sin(a + b) = sin a cos b + sin b cos a

Letting θ = 2π/N as above, cos((r + 1)θ) can be computed in terms of cos(rθ) and sin(rθ) according to the formula derived below.

cos((r + 1)θ) = cos(rθ + θ) = cos(rθ) cos(θ) − sin(rθ) sin(θ)

= cos (rθ)(1-2sin2 θ/2) sin(rθ) sin(θ)

= C × cos(rθ) − S × sin(rθ) ,

where the constants C and S are

C =(1-2sin2 θ/2) and S = sin(θ).

When r = 0, the initial values are cos(0) = 1 and sin(0) = 0. Using these same

constants C and S, sin((r +1)θ) can be computed in terms of cos(rθ) and sin(rθ) in a

similar way:

sin ((r + 1)θ) = sin(rθ + θ)

= sin(rθ) cos(θ) + cos(rθ) sin(θ)

= cos (rθ)(1-2sin2 θ/2) + sin(rθ) sin(θ)

= S × cos(rθ) + C × sin(rθ) .

A pseudo-code program for computing the N/2 twiddle factors is given as Algorithm 2.1 below. Note that the array wcos stores the real part of the twiddle factors and the array wsin stores the imaginary part of the twiddle factors.

Sequential FFT Algorithms

The Divide-and-Conquer Paradigm and Two Basic FFT Algorithms

The computation of the DFT involves the multiplication of a matrix M̅ by a vector x, where the matrix has very special structure. FFT algorithms exploit that structure by employing a a divide-and-conquer paradigm. Developments over the past 30 years have led to a host of variations of the basic algorithm. In addition, the development of multiprocessor computers has spurred the development of FFT algorithms specifically tailored to run well on such machines. The purpose of this section is to introduce the main ideas of FFT algorithms. This will serve as a basis and motivation for the material presented in subsequent chapters, where numerous issues related to its efficient implementation are considered. The three major steps of the divide-and-conquer paradigm are

Step 1. Divide the problem into two or more subproblems of smaller size.

Step 2. Solve each subproblem recursively by the same algorithm. Apply the boundary

condition to terminate the recursion when the sizes of the subproblems are small enough.

Step 3. Obtain the solution for the original problem by combining the solutions to

the subproblems.

The radix-2 FFT is a recursive algorithm obtained from dividing the given problem (and each subproblem) into two subproblems of half the size. Within this framework, there are two commonly-used FFT variants which differ in the way the two half-size subproblems are defined. They are referred to as the DIT (decimation in time) FFT and the DIF (decimation in frequency) FFT, and are derived below. It is intuitively apparent that a divide-and-conquer strategy will work best when N is a power of two, since subdivision of the problems into successively smaller ones can proceed until their size is one. Of course, there are many circumstances when it is not possible to arrange that N is a power of two, and so the algorithms must be modified accordingly. Such modifications are dealt with in detail in later chapters. However, in this chapter it is assumed that N = 2n. Also, since the algorithm involves solving problems of different sizes, it is necessary to distinguish among their respective ω’s; ωq will refer to the ω corresponding to a problem of size q.

In addition, to simplify the presentation in the remainder of the book, and to avoid

notational clutter, two adjustments have been made in the notation in the sequel. First, the factor 1/ N has been omitted from consideration in the computations. This obviously does not materially change the computation. Second, ω has been implicitly redefined as ω̅ so the negative superscript can be omitted. Again, this does not change things in any material way, but does make the presentation somewhat cleaner. Thus, the equation actually studied in the remainder of this book is

(2.1) Xr = l ωrl , r = 0, 1, . . . , N – 1

The following identities involving the twiddle factors are used repeatedly in what follows.

(2.2) (ωN)N/2 = -1, ωN/2 = ωn2, =1

Radix-2 Decimation-In-Time (DIT) FFT

The radix-2 DIT FFT [13] is derived by first rewriting equation (2.1) as

(2.3) Xr = + ωrN+ 1, r = 0, 1, . . . ,N − 1.

Using the identity = from (2.2), (2.3) can be written as

(2.4) Xr =2k + ωrN 2k + 1, r = 0, 1, . . . ,N − 1.

Since =, it is necessary to compute only the sums for r = 0, 1, . . . , N/2 – 1

Thus, each summation in (2.4) can be interpreted as a DFT of size, the first involving the even-indexed set {x2k | k = 0, . . . , − 1}, and the second involving the odd-indexed set {x2k+1 | k = 0, . . . , −1}. (Hence the use of the term decimation in time.) Defining yk = x2k and zk = x2k+1 in (2.4) yields the two subproblems below, each having a form identical to (2.1) with N replaced by N/2:

(2.5) Yr = , r = 0, 1, . . . ,N − 1.

And

(2.6) Zr = , r = 0, 1, . . . ,N − 1.

After these two subproblems are each (recursively) solved, the solution to the original problem of size N is obtained using (2.4). The first N/2 terms are given by

(2.7) Xr = Yr + Zr , r = 0, 1, . . . ,N − 1.

and using the fact that ωN N/2 +r = − ωrN and ωN/2N/2 = 1, the remaining terms are given by

(2.8) Xr+N/2 = +

= –

= Yr – Zr , r = 0, 1, . . . ,N − 1.

Note that equations (2.7) and (2.8) can be applied to any problem of even size. Therefore, while they appear to represent only the last (combination) step, it is understood that when the problem size is a power of 2, the two subproblems defined by equations (2.5) and (2.6) (as well the subsequent subproblems of size ≥ 2) would have each been solved in exactly the same manner. The computation represented by equations (2.7) and (2.8) is commonly referred to as a Cooley Tukey butterfly in the literature, and is depicted by the annotated butterfly symbol in Figure 2.4 below.

Analyzing the arithmetic cost

Let T(N) be the arithmetic cost of computing the radix-2 DIT FFT of size N, which implies that computing a half-size transform using the same algorithm costs T(N/2). In order to set up the recurrence equation, one needs to relate T(N) to T(N/2). According to (2.7) and (2.8), N complex additions and N/2 complex multiplications are needed to complete the transform, assuming that the twiddle factors are pre-computed as suggested in Section 2.1. Recall that that one complex addition incurs two real additions according to (2.1), and one complex multiplication (with pre-computed intermediate results involving the real and imaginary parts of a twiddle factor) incurs three real multiplications and three real additions according to (2.4).

Therefore, counting a floating-point addition or multiplication as one flop, 2N flops are incurred by the N complex additions, and 3N flops are incurred by the N 2 complex multiplications. In total, 5N flops are needed to complete the transform after the two half-size subproblems are each solved at the cost of T(N/2). Accordingly, the arithmetic cost T(N) is represented by the following recurrence.

(2.9) T(N) =

Comparing (2.9) with (2.6) and using (2.7) leads to the following expression for the

Arithmetic cost: (2.10) T(N) = 5N log2 N .

Multicore Architecture

Four parameters are of paramount importance when evaluating the relevance of emerging computational platforms for time-critical, embedded applications. They are computational speed, communication speed (the I/O and inter-processor data transfer rates), the power dissipated (measured in pico-Joules (pJ) per floating point operation), and the processor footprint. For each of these parameters, one can compare the performance of an algorithm for different hardware platforms and software (e.g. compilers) tools. Multicore processors of particular relevance for many computationally intensive maritime sensing applications include the IBM Cell [5], the Coherent Logix HyperX [7], and the NVIDIA Tesla, Fermi, Ion and Tegra devices [6]. In this paper, we focus on the IBM Cell.

The Cell Broadband EngineTM

The Cell multicore architecture is the product of five years of intensive R&D efforts undertaken in 2000 by IBM, Sony, and Toshiba [5]. The results reported in this paper refer to the PXC 8i (third generation) release of the processor, implemented on QS 22 blades that utilize an IBM BladeCenterTM H. The PXC 8i model includes one multi-threaded 64-bit PowerPC processor element (PPE) with two levels of globally coherent cache, and eight SPEs. Each SPE consists of a processor (synergistic processing unit (SPU)) designed for streaming workloads, local memory, and a globally coherent Direct Memory Access (DMA) engine. Emphasis is on SIMD processing. An integrated high-bandwidth element inter-connect bus (EIB) connects the nine processors and their ports to external memory and to system I/O. The key design parameters of the PXC 8i are as follows [15]. Each SPE comprises an SPU having a peak performance of 25.6 GFLOP (single precision) for a 3.2 GHz clock, a 256 kB local store (LS) memory, a memory flow controller (MFC) with a non-blocking DMA engine, and 128 registers, each 128-bit wide to enable SIMD-type exploitation of data parallelism. The EIB

provides 2×25.6GB/s memory bandwidth to each SPE LS, and allows external communication (I/O) up to 35GB/s (out), 25GB/s (in). The PPE has a 64-bit RISC PowerPC architecture, a 32 kB L1 cache, a 512 kB L2 cache, and can operate at clock rates in excess of 3 GHz. It includes a vector multimedia extension (VMX) unit. The total power dissipation is estimated nominally around 125W per node (not including system memory and NIC). The total peak throughput, in single precision, exceeds 200 GFLOPS per Cell processor, for a total communication bandwidth above 204.8GB/s. This Cell architecture is illustrated in Figure 2.5. Further details on the design parameters of the PXC 8i are well documented [14–17] and will not be repeated here. Note that both FORTRAN 2003 and C/C++ compilers for multi-core acceleration under Linux (i.e. XLF and XLC) are provided by IBM.

The HyperX hx3100 processor

In order to contrast its architecture with that of the better known Cell, we now highlight some of the key features of the HyperXTM processor. Because of its ultra-low power consumption, the HyperX is a very strong contender not only for maritime sensing computations, but also for those applications that can substantially benefit from an MIMD capability in conjunction with real-time reconfigurability.

HyperX architectural overview

The hx3100 processor is the latest entry in the HyperX family of ultra-low power, massively parallel processors produced by Coherent Logix, Inc. [7]. The hx3100 processor is a 10-by-10 array of processing elements (PEs) embedded on an 11-by-11 array of data management and routing units (DMRs). It is illustrated in Figure 2.6. At a system clock frequency of 500 MHz, the maximum chip-level throughput for 32-bit floating-point operations is 25 GFLOPS. Alternatively, when power consumption is prioritized, performance can be measured for the current release as 16 GFLOPS/W [7]. This translates into energy consumption on the order of 10 pJ per instruction, which rivals the performance of dedicated ASIC designs.

The DMR network provides DMA for the PEs to both on-chip and off-chip memory. Each

DMR has 8 kB of SRAM, and operates at a read/write cycle rate of 500 MHz, while the eight

independent DMA engines within a DMR may act in parallel. A PE directly accesses data memory in four neighboring DMRs, such that 32 kB of data is addressable by any given PE. In addition, when a subset of PEs shares direct access to the same DMR, the associated memory space may act as shared memory between PEs. This inter-connectedness provides for a mixture of shared and distributed memory hierarchies. Each DMR consists of 8 16-bit DMA engines that route memory requests from neighboring PEs and support routing memory requests managed by other DMRs. In addition to supporting on-chip DMAs, the DMRs handle requests to off-chip

fig. 2.6 The Coherent Logix hx3100 processor, a 10-by-10 array of processing elements (PEs) interleaved by an 11-by-11 array of data management and routing units (DMRs). An expanded view of four Pes surrounded by nine DMRs demonstrates the degree of connectivity. HyperIO references the input/output ports used by the hx3100 processor to communicate with off-chip memory or other hx3100 processors.

memory, including the eight DDR2 DRAM ports. Moreover, the 24 IO ports surrounding the chip (six per side) can be wired to connect together multiple HyperX chips.

Integrated software development environment

Programming the HyperX entails writing an ANSI C program, which defines the parallelism in the algorithm through the use of the industry standard Message Passing Interface (MPI) protocol. Note that, contrary to the IBM Cell or the Nvidia Tesla, no FORTRAN 2003 compiler for high performance numerical computations is yet available. The Coherent Logix integrated software tools automatically assign individual tasks to PEs, and create routing to support the movement of data between PEs. Tasks may be both parallelized and pipelined across multiple cores, while DMAs are controlled explicitly in software. The latter steps provide opportunities for designing the flow of program execution to meet resource constraints and programming requirements.

Power management

The current version of the HyperX architecture provides the capability to power down quadrants of the PE grid that are unneeded by a designed application. As a result, programs can be optimized with respect to energy usage (of the chip) as well as the computational speed and memory bandwidth usage. Wake-up signals can be triggered by external events, such that the processor may be shutdown during periods of computational idle time.

Designing Fast Fourier Transform of the IBM Cell Multi SIMD Processor

The Cell Broadband Engine (or the Cell/B.E.) [14, 16, 17] is a novel high-performance architecture designed by Sony, Toshiba, and IBM (STI), primarily targeting multimedia and gaming applications. The Cell/B.E. con- sists of a traditional microprocessor (called the PPE) that controls eight SIMD co-processing units called synergistic processor elements (SPEs), a high-speed memory controller, and a high-bandwidth bus interface (termed the element interconnect bus, or EIB), all integrated on a single chip. The Cell is used in Sony’s PlayStation 3 gaming console, Mercury Computer System’s dual Cell-based blade servers, IBM’s QS20 Cell Blades, and the Roadrunner super- computer.

In this chapter we present the design of an efficient parallel implementation of Fast Fourier Transform (FFT) on the Cell/B.E. FFT is of primary impor-tance and a fundamental kernel in many computationally intensive scientific applications such as computer tomography, data filtering, and fluid dynamics. Another important application area of FFTs is in spectral analysis of speech, sonar, radar, seismic, and vibration detection. FFTs are also used in digital filtering, signal decomposition, and in solution of partial differential equations. The performance of these applications relies heavily on the availability of a fast routine for Fourier transforms.

In our design of Fast Fourier Transform on the Cell (FFTC) we use an iterative out-of-place approach to solve 1D FFTs with 1K to 16K complex input samples. We describe our methodology to partition the work among the SPEs to efficiently parallelize a single FFT computation where the source and output of the FFT are both stored in main memory. This differentiates our work from the prior literature and better represents the performance that one realistically sees in practice. The algorithm requires synchronization among the SPEs after each stage of FFT computation. Our synchronization barrier is designed to use inter SPE communication without any intervention from the PPE. The synchronization barrier requires only 2 log p stages (p: number of SPEs) of in- ter SPE communication by using a tree-based approach. This significantly im- proves the performance, as PPE intervention not only results in a high commu- nication latency but also in sequentialization of the synchronization step. We achieve a performance improvement of over 4 as we vary the number of SPEs from 1 to 8. We attain a performance of 18.6 GFLOP/s for a single-precision FFT with 8K complex input samples and also show significant speedup in comparison with other architectures. Our implementation is generic for this range of complex inputs.

This chapter is organized as follows. We first describe FFT and the algorithm we choose to parallelize in Section 3.2. The novel architectural features of the Cell processor are reviewed in Section 3.3. We then present our design to parallelize FFT on the Cell and optimize for the SPEs in Section 3.4.

Fast Fourier Transform

FFT is an efficient algorithm that is used for computing the DFT. Some of the important application areas of FFTs have been mentioned in the previous section. There are several algorithmic variants of the FFTs that have been well studied for parallel processors and vector architectures.

In our design we utilize the naive Cooley-Tukey radix-2 Decimate in Frequency (DIF) algorithm. The pseudo-code for an out-of-place approach of this algorithm is given in Algorithm 3.1 in Appendix). The algorithm runs in log N stages and each stage requires O (N) computation, where N is the input size.

The array w contains the twiddle factors required for FFT computation. At each stage the computed complex samples are stored at their respective locations thus saving a bit-reversal stage for output data. This is an iterative algorithm which runs until the parameter problemSize reduces to 1. Fig. 3.1 shows the butterfly stages of this algorithm for an input of 16 sample points (4 stages).

Apart from the theoretical complexity, another common performance metric used for the FFT algorithm is the floating-point operation (FLOP) count. On analyzing the sequential algorithm, we see that during each iteration of the innermost for loop there is one complex addition for the computation of first output sample, which accounts for 2 FLOPs. The second output sam- ple requires one complex subtraction and multiplication which accounts for 8 FLOPs. Thus, for the computation of two output samples during each inner- most iteration we require 10 FLOPs, which suggests that we require 5 FLOPs for the computation of a complex sample at each stage. The total compu- tations in all stages are N log N which makes the total FLOP count for the algorithm as 5N log N .

Cell Broadband Engine Architecture

The Cell/B.E. processor is a heterogeneous multicore chip that is significantly different from conventional multiprocessor or multicore architectures. It consists of a traditional microprocessor (the PPE) that controls eight SIMD co-processing units called SPEs, a high-speed memory controller, and a highbandwidth bus interface (termed the EIB), all integrated on a single chip. Fig. 3.2 gives an architectural overview of the Cell/B.E. processor. The PPE runs the operating system and coordinates the SPEs. It is a 64- bit PowerPC core with a vector multimedia extension (VMX) unit, 32 KB L1 instruction and data caches, and a 512 KB L2 cache. The PPE is a dual-issue, in-order execution design, with two-way simultaneous multithreading. Ideally, all the computation should be partitioned among the SPEs, and the PPE only handles the control flow.

Each SPE consists of a synergistic processor unit (SPU) and a memory flow controller (MFC). The MFC includes a DMA controller, a memory management unit (MMU), a bus interface unit, and an atomic unit for synchronization with other SPUs and the PPE. The SPU is a micro-architecture designed for high performance data streaming and data-intensive computation. It includes a 256 KB local store (LS) memory to hold the SPU program’s instructions and data. The SPU cannot access main memory directly, but it can issue DMA commands to the MFC to bring data into the LS or write computation results back to the main memory. DMA is non-blocking so that the SPU can continue program execution while DMA transactions are performed. The SPU is an in-order dual-issue statically scheduled architecture. Two SIMD [18] instructions can be issued per cycle: one compute instruction and one memory operation. The SPU branch architecture does not include dynamic branch prediction, but instead relies on compiler-generated branch hints using prepare-to-branch instructions to redirect instruction prefetch to branch targets. Thus branches should be minimized on the SPE as far as possible. The MFC supports naturally aligned transfers of 1, 2, 4, or 8 bytes, or a multiple of 16 bytes to a maximum of 16 KB. DMA list commands can request a list of up to 2,048 DMA transfers using a single MFC DMA command. Peak performance is achievable when both the effective address and the local storage address are 128 bytes aligned and the transfer is an even multiple of 128 bytes. In the Cell/B.E., each SPE can have up to 16 outstanding DMAs, for a total of 128 across the chip, allowing unprecedented levels of parallelism in on-chip communication.

With a clock speed of 3.2 GHz, the Cell processor has a theoretical peak performance of 204.8 GFlop/s (single precision). The EIB supports a peak bandwidth of 204.8 GB/s for intra-chip transfers among the PPE, the SPEs, and the memory and I/O interface controllers. The memory interface controller (MIC) provides a peak bandwidth of 25.6 GB/s to main memory. The I/O controller provides peak bandwidths of 25 GB/s inbound and 35 GB/s outbound.

FFT Algorithm for the Cell/B.E. Processor

There are several architectural features that make it di_cult to optimize and parallelize the Cooley-Tukey FFT algorithm on the Cell/B.E. The algorithm is branchy due to presence of a doubly nested for loop within the outer while loop. This results in a compromise on the performance due to the absence of a branch predictor on the Cell. The algorithm requires an array that consists of the N=2 complex twiddle factors. Since each SPE has a limited local store of 256 KB, this array cannot be stored entirely on the SPEs for a large input size. The limit in the size of the LS memory also restricts the maximum input data that can be transferred to the SPEs. Parallelization of a single FFT computation involves synchronization between the SPEs after every stage of the algorithm, as the input data of a stage are the output data of the previous stage. To achieve high performance it is necessary to divide the work equally among the SPEs so that no SPE waits at the synchronization barrier. Also, the algorithm requires logN synchronization stages which impact the performance.

It is diffcult to vectorize every stage of the FFT computation. For vectorization of the first two stages of the FFT computation it is necessary to shuffle the output data vector, which is not an efficient operation in the SPE instruction set architecture. Also, the computationally intensive loops in the algorithm need to be unrolled for best pipeline utilization. This becomes a challenge given limited LS on the SPEs.

Parallelizing FFTC for the Cell

As mentioned in the previous section for best performance it is important to partition work among the SPEs to achieve load balancing. We parallelize by dividing the input array held in main memory into 2p chunks, each of size N/2p , where p is the number of SPEs. During every stage, SPE i is allocated chunk i and i + p from the input array. The basis for choosing these chunks for an SPE lies in the fact that these chunks are placed at an offset of N=2 input elements. For the computation of an output complex sample we need to perform complex arithmetic operations

between input elements that are separated by this offset. Fig. 3.3 gives an illustration of this approach for work partitioning among 8 SPEs.

The PPE does not intervene in the FFT computation after this initial work allocation. After spawning the SPE threads it waits for the SPEs to finish execution.

Optimizing FFTC for the SPEs

After dividing the input array among the SPEs, each SPE is allocated two chunks each of size N/2p . Each SPE fetches this chunk from main memory using DMA transfers and uses double buffering to overlap memory transfers with computation. Within each SPE, after computation of each buffer, the computed buffer is written back into main memory at offset using DMA transfers.

The detailed pseudo-code is given in Alg. 3.2 in appendix. The first two stages of the FFT algorithm are duplicated that correspond to the first two iterations of the outer while loop in sequential algorithm. This is necessary as the vectorization ofthese stages requires a shuffle operation (spu_shuffle()) over the output to rearrange the output elements to their correct locations.In Fig.3.4 for an illustration of this technique for stages 1 and 2 of the FFT computation. The innermost for loop (in the sequential algorithm) can be easily vectorized for NP > 4 that corresponds to the stages 3 through logN. However, it is important to duplicate the outer while loop to handle stages where NP <buffersize, and otherwise. The global parameter buffersize is the size of a single DMA get buffer. This duplication is required as we need to stall for a DMA

transfer to complete, at different places within the loop for these two cases.We also unroll the loops to achieve better pipeline utilization. This significantly increases the size of the code thus limiting the unrolling factor. SPEs are synchronized after each stage, using inter-SPE communication.

This is achieved by constructing a binary synchronization tree, so that synchronization

is achieved in 2 log p stages. The synchronization involves the useof inter-SPE mailbox communication without any intervention from the PPE.

Performance Analysis of FFTC

For compiling, instruction-level profiling, and performance analysis we use the IBM Cell Broadband Engine SDK 3.0.0-1.0, gcc 4.1.1 with level 3 optimization. From `/proc/cpuinfo’ we determine the clock frequency as 3.2 GHz with revision 5.0. We use gettimeofday () on the PPE before computation on the SPE starts, and after it finishes. For profiling measurements we iterate the computation 10,000 times to eliminate the noise of the timer.

For parallelizing a single 1D FFT on the Cell, it is important to divide the work among the SPEs. Fig. 3.4 shows the performance of our algorithm with varying the number of SPEs for 1K and 4K complex input samples. Our algorithm obtains a scalability of about 4x with 8 SPEs.

Our design requires barrier synchronization among the SPEs after each stage of the FFT computation. We focus on FFTs that have from 1K to 16K complex input samples. For relatively small inputs and as the number of SPEs increases, the synchronization cost becomes a significant issue since the time per stage decreases but the cost per synchronization increases. With instruction level profiling we determine that the time required per synchronization stage using our tree-synchronization barrier is about 1 microsecond (3,200 clock cycles). We achieve a high-performance barrier using inter-SPE mailbox communication which significantly reduces the time to send a message, and by using the tree-based technique we reduced the number of communication stages required for the barrier (2 log p steps).

POWER5 processors.

Fig. 3.5 shows the single-precision performance for complex inputs of FFTC, our optimized FFT, as compared with the following architectures:

1. IBM Power 5: IBM OpenPower 720, Two dual-core 1.65 GHz

2. AMD Opteron: 2.2 GHz Dual Core AMD Opteron Processor 275.

3. Intel Core Duo: 3.0 GHz Intel Xeon Core Duo (Woodcrest), 4MB L2 cache.

4. Intel Pentium 4: Four-processor 3.06 GHz Intel Pentium 4, 512 KB L2.

We use the performance numbers from benchFFT [19] for the comparison with the above architectures. We consider the FFT implementation that gives best performance on these architectures for comparison.

In summary, we present FFTC, our high-performance design to parallelize the 1D FFT on the Cell/B.E. processor. FFTC uses an iterative out-of-place approach and we focus on FFTs with 1K to 16K complex input samples. We describe our methodology to partition the work among the SPEs to efficiently parallelize a single FFT computation. The computation on the SPEs is fully

vectorized with other optimization techniques such as loop unrolling and double buffering. The algorithm requires synchronization among the SPEs after each stage of FFT computation. Our synchronization barrier is designed to use inter-SPE communication only without any intervention from the PPE.

The synchronization barrier requires only 2 log p stages (p: number of SPEs) of inter-SPE communication by using a tree-based approach. This significantly improves the performance, as PPE intervention not only results in high communication latency but also results in sequentializing the synchronization step.

We achieve a performance improvement of over 4 as we vary the number of SPEs from 1 to 8. We also demonstrate FFTC’s performance of 18.6 GFlop/s for an FFT with 8K complex input samples and show significant speedup in comparison with other architectures. Our implementation outperforms Intel Core Duo (Woodcrest) for input sizes greater than 2K and to our knowledge we have the fastest FFT for these ranges of complex input samples.

Chapter 4

Implementing FFT on multicore architecture

This chapter demonstrates how a traditional algorithm, Fast Fourier Transform (FFT), can exploit typical parallel resources on multicore architecture platforms to achieve near-optimal performance. FFT algorithms are of great importance to a wide variety of applications ranging from large-scale data analysis and solving partial di_erential equations, to the multiplication of large integers. The fundamental form of FFT can be traced back to Gauss (1777-1855) and his work in the early 19th century [20]. After computers emerged in the mid-20th century, this computationally efficient algorithm was rediscovered [13] and subsequently mapped to different modern computer architectures.

Modern processors and their Single Instruction Multiple Data (SIMD) architectures allow a programmer to efficiently execute massively data-parallel algorithms. The FFT algorithm is data-parallel in nature. But its data access transactions require a programmer to arrange input data and intermediate results in specific patterns to avoid unnecessary data movement. The associated computations also need to be properly scheduled to avoid unnecessary memory load and store operations [21, 22, 23]. Finding an optimal FFT algorithm for a given architecture is non-trivial. The scheduling of computation and data movement in order to reduce data movement overhead (e.g. register spill) is an NP-hard problem. With the advent of multicore architectures, the additional levels of memory hierarchy make it even harder to find an optimal FFT implementation.

This chapter first reviews the fundamentals of the FFT algorithm including its computation, data movement and data preparation. Computations are the numeric operations required in an FFT algorithm. Data movement refers to the operations that move the data between the different data storage locations in the system. Data preparation refers to the steps required to organize the data for efficient utilization by the computation units. These three components provide the framework for high-performance design of FFTs. Each of these components are then considered with respect to each level of a typical multicore memory hierarchy including the register file memory, private core memory, shared core memory and system memory. Each memory level has different considerations for computation, data movement and data preparation. Particular FFT optimization techniques for each aspect are explained.

These techniques have been proven to provide significant performance improvement in many FFT implementations. The same paradigm can be similarly applied to the development of other types of multicore applications. The principles of automatic FFT generation leverage the strategies for efficient design of an FFT over the available computational resources. And finally, a case study of a large 3-D FFT across a large cluster of multicore systems is presented. Because of the many multicore hardware features available in the Cell Broadband EngineTM (Cell/B.E.) architecture [24], the Cell/B.E. processor is frequently referenced to demonstrate typical parallel hardware resources and constraints existing in modern multicore architectures.

Computational Aspects of FFT Algorithms

An FFT is an efficient algorithm for computing discrete Fourier tranforms (DFTs). The size-N DFT is defined by the formula below where x0, x1, … xN-1 are complex numbers.

Xk = k = 0,…, N-1 (4.1)

In the case of radix-2, decimation-in-time, FFT, the DFT formula is reexpressed

as two size-N/2 DFTs from the even-number-indexed elements (Ej)

and the odd-number-indexed elements (Oj).

Because of the periodicity of the root of unity, it holds true that Ek-M = Ek and Ok–M = Ok. This fact provides a recursive way to divide and conquer the original O(N2) DFT computational complexity and yield an O(Nlog2N) complexity. The two size-N/2 DFTs come from the even and odd elements, respectively, along the time series. Such FFTs are called a decimation-in-time (DIT). Another form of FFT is decimation-in-frequency (DIF) [20] which has exactly the same computational complexity.

Regardless of the choice of FFT, DIT or DIF, the computation consists of repeatedly applying a simple computational kernel over an array of elements as defined by equation (4.2). This computation kernel is customarily represented as a buttery dataow graph as shown in Figure 4.1. By combining these butteries with their complex roots of unity W (traditionally referred to as

twiddle factors [22]), a complete FFT is achieved.

FFT Performance

Based on the computation counts of all butteries, an upper bound on the performance for a particular target system can be established. Even though there are several choices for near-optimal FFT algorithms with different numbers of operations no proof is yet known for the mathematical question of what is the minimal number of oating-point operations (FLOPS)

required to compute a DFT of a given size N.

For modern multicore processors which support fused multiply-add (FMA) instructions, there is a good choice. The simplest radix-2 FFT algorithm not only offers the highest usage of FMA instructions, its simplicity in implementation also serves as a good vehicle to demonstrate a practical optimization approach for multicore platforms [25]. The basic DIT radix-2 buttery is used as the building block for most implementations, where (a, b) is the input, (C, D) is the output and Wi is the twiddle factor. Without FMA instructions, a buttery requires 10 oatingpoint

real number add or multiply operations. With FMA instructions, the buttery is reduced to 6 oating-points multiply-add operations.

Dr = (ar – br * ) – bi * (4.3)

Di = (ai – bi * ) – br * (4.4)

Cr = 2.0 * ar – Dr (4.5)

Ci = 2.0 * ai – Di (4.6)

Subscripts r and i denote real and imaginary parts of a complex number, respectively.

Data Movement and Preparation of FFT Algorithms

Another aspect that affeects the FFT performance is the overhead of moving and preparing data for the butteries. Such overhead is not directly expressed in the formulas in equation (4.2) and has often been ignored by many FFT studies.

Because the size of the input array of an FFT is usually larger than that of the processor’s register file, the data have to spill over more than one level of memory hierarchy. Moving data among the levels of the memory hierarchy is inevitable. More levels of memory hierarchy exist in multicore platforms than those of traditional single-core platforms. This data movement overhead can affect final performance more than the computational portion on many of today’s computer systems.

A good algorithm not only needs to minimize the frequency of unnecessary data movement, it also needs to make data movement as efficient as possible. Usually, the algorithm must match the memory granularity defined by the hardware, placing additional restrictions on the data movement design. Even when data are not transferred from one memory level to another, their location in the storage may not match what is required by the computation unit. For example, a typical single-precision SIMD operation requires four adjacent data elements to be operated by the same SIMD instruction. The sequential layout is not always the case for FFT arrays.

Figure 4.2 shows the dataow graph of a small size-8 DIT FFT where are the twiddle factors. The graph illustrates one of the many ways to store the data array between stages. In this particular example, the index of the final output array is bit-reversed. This results from the ordering of the sub-FFT computations and also the recursive decimation of the even and odd parts of the sub-arrays. SIMD instructions likely cannot operate over such a decimated array without preparation of the data into a SIMD-consumable format.

Finding the most effective data movement and proper data layout for FFT is similar to the problem a compiler uses to map a directed acyclic graph (DAG) onto a finite number of registers while avoiding register spills. It has been demonstrated that, if the DAG is not ordered, this is an NP-hard problem [26]. With the many buttery computations, their input and output data and dependencies and a set of hardware constraints, it is not surprising that FFT designers findnd it difficult to fully optimize their implementations over multicore platforms.

Proper scheduling of the data movement along the memory hierarchy and hardware-friendly data preparation are, in fact, two of the most rewarding steps in the algorithm design. This chapter discusses techniques and algorithms in these two areas. The same performance design principles can scale from instruction level, up to single core level and up to multicore and multinode

systems.

Multicore FFT Performance Optimization

Because data movement is a significant factor affecting performance, it is important to optimize the FFT stages according to the system’s memory hierarchy. At each level in the hierarchy, one should exploit all available hardware parallelism and at the same time minimize the effect of data access to the next level of memory hierarchy. By using this heuristic approach, one can achieve a

performance close to the upper bound previously established. In a sense, the design process schedules the DAG and reduces the complexity of the design problem from NP-hard to linear while ensuring that the final performance is nearly optimal.

One hardware parallelism technique proven to be effective is exploiting concurrency between the computational operations and data movement operations. Such a feature is common in modern CPU design. By allowing the core to move data while computing on another block of data, the overhead of data movement can be mostly hidden behind the time the computational instructions

are being executed. Effectively, this results in virtually no overhead for data movement and near-optimal performance is achieved. Such concurrency exists in all levels of the memory hierarchy of Cell/B.E. architecture [24]. At the register level, the Synergistic Processing Element (SPE) load and store instructions are executed on an instruction pipeline which is different from the one used by the computational instructions. These two activities can occur at the same time. At the private core memory level (e.g. local store), the direct memory access engine can transfer blocks of data to and from system memory at the same time the SPEs are executing their programs. While all these activities are occurring, the PowerPC core can fur- ther transfer data between the network and system memory without affecting computation and data movements at the other levels.

For large FFTs that cannot completely fit into one level of memory, the principle of general factorizations of the Cooley-Tukey FFT algorithm can be applied to allow the larger FFT to be divided into a set of smaller sub-FFTs for execution on the specific memory hierarchy level. Because the computational complexity of FFT is O(Nlog2N), the larger the N that can execute

on a particular level, the less data, O(1/log2N), that need to transferred per computation unit. Therefore, it is best to execute as large an FFT as possibleon each memory level.

To benchmark our performance, we have also implemented the FFT alorithm in pMatlab (pMatlab version is pMatlab_v2.0.1). Both coding and optimization steps for efficiently implementing the FFT algorithms are discussed briefly in appendix. Table 4.1 shows the

multicore performance on an eight-core system. The first test is to compare the performance of running the program using NP = 1 with distributed arrays turned off (PARALLEL=0) and distributed arrays turned on (PARALLEL=1). Here, NP is the number of copies or instances of the running program. Columns 2 and 3 in Table 4.1 provide this data. In this case, the performance differences are negligible and indicate that distributed arrays

Absolute launch time, relative allocation, computation, communication, and run times, as well as the relative performance for the FFT benchmark using an eight-core processor. All values are normalized to column 2 in which NP = 1 with the distributed arrays turned off (PARALLEL=0). Columns 3-6 are the values with distributed arrays turned on (PARALLEL=1). The notation 1*8 means that NP = 8, and all PID s were run on the same processing node with the same shared memory. Each program is assigned a unique processor ID which is denoted PID, which ranges from 0 to NP – 1.

| Multicore processors: | 1 | 1*1 | 1*2 | 1*4 | 1*8 |

| Launch time (sec) | 0 | 0.0 | 1.23 | 1.80 | 1.57 |

| Relative allocation time | 1 | 1.0 | 0.79 | 0.54 | 0.42 |

| Relative compute time | 1 | 1.0 | 0.41 | 0.25 | 0.07 |

| Relative comm time | 0.99 | 0.33 | 0.30 | ||

| Relative execution time | 1 | 1.0 | 1.41 | 0.58 | 0.37 |

| Relative performance | 1 | 1.0 | 0.71 | 1.72 | 2.70 |

| Relative bandwidth | 1 | 3.00 | 3.30 |

Table 4.1 Multicore FFT performance.

If using distributed arrays with NP = 1 slows things down significantly, then this must be addressed. It is very difficult to get any benefit with NP > 1 if the NP = 1 case is slower than the base serial code. Working only on the local parts of distributed arrays almost guarantees the performance will be the same as when distributed arrays are not being used. Columns 3-5 of Table 4.1 show the parallel performance of the code on a multicore system. The launch time row shows a generally increasing launch time with NP. Ideally, it would be a constant value that is independent of NP. However, depending upon the underlying launch mechanism, this time can be an increasing function of NP. Typically, launch time will not be a large fraction of the overall computation, but it can easily become significant when NP is large. For example, suppose launch time was proportional to NP and each additional PID added 1 second. If NP = 100, then the launch time would be 100 seconds. If this is the Amdahl fraction of the program, then the program will need to run for at least N2P seconds in order to achieve a 50x speedup over the serial case. The relative allocation time row shows that allocation generally decreases with increasing NP because each PID need only allocate N= NP data elements. The measured decrease in allocation time is not linear with NP because there are some overheads associated with allocation. Typically, allocation time will not be a large fraction of the overall computation. However, for cases in which NP is large, these overheads can become important, and so it is always good to measure allocation time and make sure it is reasonable. The relative compute time generally decreases linearly with NP because each core is working on a smaller problem. The relative communication time is computed with respect to the NP = 1 compute time. Thus, for NP = 2, the relative communication

ime is equal to the compute time, resulting in a net decrease in overall performance compared to the NP = 1 case. As NP increases, the communication time decreases and a net decrease in the relative compute time is observed. The relative performance row indicates that a speedup of 2.7 with NP = 8 is achieved. This is not unexpected given the large amount of communication that is incurred. In some instances, this performance improvement might be advantageous; in other circumstances, it may not be worth the added programming complexity. In general, the parallel FFT often falls in this middle performance regime. The relative bandwidth row shows that as NP increases, the bandwidth among the processors increases. In fact, increasing the NP by a factor of 4 (i.e., going from NP = 2 to NP) results in a bandwidth increase of 3.3. This increase in bandwidth with NP is the only reason that the FFT achieves any parallel performance. If the bandwidth did not improve, then the overall communication time would remain nearly the same as the NP = 1 computation time.

The techniques presented in this chapter for FFTs are applicable to other multicore applications.

The new parallel resources of the multicore architectures introduce a new programming challenge that has not been well supported by today’s traditional programming languages. New language constructs are required for developers to specify performance design knowledge. Such knowledge is critical for a multicore compiler to map an algorithm and schedule operations effectively

on the available computational resources. A successful implementation depends upon a deep understanding of the data access patterns, computation properties and available hardware resources. Applications like FFTs with regular data access and computation patterns can readily take advantage of generalized performance planning techniques to produce successful implementations across a wide variety of multicore architectures.

Conclusion

In this paper we have proposed a multi-dimensional high performance parallel FFT algorithm which is implemented in pMATLAB. The research contribution of this thesis provides a new parallel implementation of the FFT. This implementation was coded in MATLAB. This application can be used when a fast FFT computation needs to be done. The results are promising, but the speedup when using two or six processors has not yet been shown to provide a significant increase.

Long term perspectives

Long term perspectives include a system where in the user can improve the performance of the FFT by increasing the number of processors. This would require using all of the processors with a load-balancing technique. The general FFT program works with any number of input points. In order to achieve better timings, generators can be implemented which will create fewer lines of code for small values of N and thus give better timings. All well known FFT developers in the market currently use generators.

References

[1] C. Van Loan, Computational Frameworks for the Fast Fourier Transform, SIAM Press (1992).

[2] D. H. Bailey, “Computational Frameworks for the Fast Fourier Transform.”, SIAM Review, 35(1), 142-143 (1993).

[3] J. Barhen et al. “Vector-Sensor Array Algorithms for Advanced Multicore Processors.”, US Navy Journal of Underwater Acoustics (in press, 2010).

[4] H. L. Van Trees, Detection, Estimation, and Modulation Theory, Part I, Wiley (1968); Part III, Wiley (1971); ibid, Wiley.–Interscience (2001).

[5] J. A. Kahle et al. “Introduction to the Cell multi-processor.”, IBM Journal of Research and Development, 49(4-5), 589 604 (2005).

[6] www.nvidia.com

[7] M. Stolka (Coherent Logix), personal communication; see also: www.coherentlogix.com

[8] http://www.bridgeport.edu/sed/projects/cs597/Summer_2002/kunhlee/index/,

online link [dated Summer 2002].

[9] http://www.fftw.org, online link [dated August 10, 2008].

[10] http://spiral.net/hardware/dftgen/, online link [dated August 12, 2008].

[11] P. Lee and F. Y. Huang, Restructured Recursive DCT and DST Algorithms, IEEE

Trans. Signal Processing, v. 42 (7), pp. 1600-1609, 1994.

[12] S. Jenkins “MIMO/OFDM Implement OFDMA, MIMO for WiMAX, LTE”, picoChip <http://www.eetasia.com/ARTICLES/2008MAR/PDF/EEOL_2008M AR17_RFD_NETD _TA.pdf.

[13] J. W. Cooley and J. W. Tukey. An algorithm for the machine calculation of

complex Fourier series. Math. Comp., 19:297–301, 1965.

[14] G. Bruun. z-transform DFT _lters and FFT’s. Acoustics, Speech and

Signal Processing, IEEE Transactions on, 26(1):56{63, Feb 1978.

[15] Vogt JS et al. IBM BladeCenter QS22: Design, performance, and utilization in hybrid computing systems. IBM Journal of Research and Development 2009; 53(5). See also IBM Systems and Technology Group. IBM BladeCenter QS22, BLD03019-USEN-02. IBM Corporation, 2008.

[16] IBM Corporation. Cell Broadband Engine technology. http://wwwalphaworks.ibm.com/topics/cell.

[17] IBM Corporation. The Cell project at IBM Research. http://www.

research.ibm.com/cell/home/.

[18] A.C. Chow, G.C. Fossum, and D.A. Brokenshire. A Programming Example: Large FFT on the Cell Broadband Engine. Tech. Conf. Proc. of the Global Signal Processing Expo (GSPx), 2005.

[19] M. Frigo and S.G. Johnson. FFTW on the Cell Processor. http://www. fftw.org/cell/index/, 2007.

[20] M.T. Heideman, D.H. Johnson, and C.S. Burrus. Gauss and the History of the Fast Fourier Transform. IEEE ASSP Magazine, October 1984.

[21] D.H. Bailey. FFTs in external or hierarchical memory. Journal of Supercomputing,

vol. 4, no. 1, 1990, pages 23-35.

[22] W.M. Gentleman and G. Sande. Fast Fourier transforms for fun and profit. Proc. AFIPS, vol. 29, 1966, pages 563-578.

[23] R.C. Singleton. On computing the fast Fourier transform. Communications of the ACM, vol. 10, 1967, pages 647-654.

[24] IBM Corporation. 2007. Cell Broadband Engine Architecture specification. web: www.ibm.com/chips/techlib/techlib.nsf/products/Cell Broadband Engine

[25] H. Karner, M. Auer, and C.W. Ueberhuber. Optimum Complexity FFT Algorithms for RISC Processors. Technology Report AURORA TR1998- 03, Institute for Applied and Numerical Mathematics, ViennaUniversity of Technology, 1998.

[26] R. Motwani, K.V. Palen, V. Sarkar, and S. Reyen. Combining Register Allocation and Instruction Scheduling. Technical Report, 1995.