A new technique greatly reduces the error in an optical neural network, which uses light to process data instead of electrical signals. With their technique, the larger an optical neural network becomes, the lower the error in its computations. This could enable them to scale these devices up so they would be large enough for commercial uses.

Machine-learning models require faster and more energy-efficient hardware to perform computations as they grow in size and complexity. Traditional digital computers are having trouble keeping up.

An analog optical neural network can perform the same tasks as a digital neural network, such as image classification or speech recognition, but because computations are performed with light rather than electrical signals, optical neural networks can run many times faster while using less energy.

However, these analog devices are susceptible to hardware errors, which can result in less precise computations. One source of these errors is microscopic flaws in hardware components. Errors can quickly accumulate in an optical neural network with many connected components.

Even with error-correction techniques, some amount of error is unavoidable due to fundamental properties of the devices that comprise an optical neural network. A network that is large enough to be implemented in the real world would be far too imprecise to be effective.

It is a very simple algorithm if you know what the errors are. But these errors are notoriously difficult to ascertain because you only have access to the inputs and outputs of your chip. This motivated us to look at whether it is possible to create calibration-free error correction.

Ryan Hamerly

MIT researchers have overcome this barrier and discovered a method for effectively scaling an optical neural network. They can reduce even uncorrectable errors that would otherwise accumulate in the device by adding a tiny hardware component to the optical switches that comprise the network’s architecture.

Their work could pave the way for a super-fast, energy-efficient analog neural network with the same accuracy as a digital one. With this technique, as the size of an optical circuit increases, the amount of error in its computations decreases.

“This is remarkable, as it runs counter to the intuition of analog systems, where larger circuits are supposed to have higher errors, so that errors set a limit on scalability. This present paper allows us to address the scalability question of these systems with an unambiguous ‘yes,'” says lead author Ryan Hamerly, a visiting scientist in the MIT Research Laboratory for Electronics (RLE) and Quantum Photonics Laboratory and senior scientist at NTT Research.

Multiplying with light

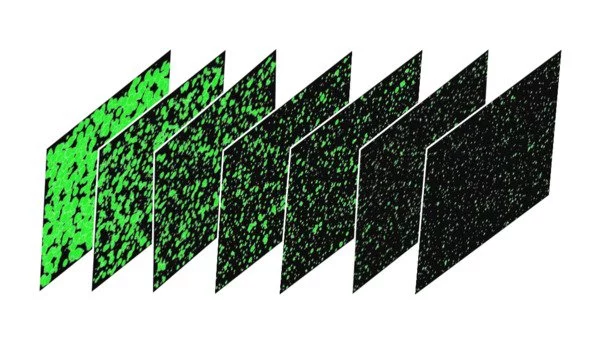

An optical neural network is made up of many interconnected components that work similarly to reprogrammable, tunable mirrors. Mach-Zehnder Inferometers are the name given to these tunable mirrors (MZI). Data from neural networks are encoded into light, which is then fired into the optical neural network by a laser.

Two mirrors and two beam splitters are typical components of a MZI. Light enters a MZI through the top and is split into two parts that interfere with each other before being recombined by the second beam splitter and reflected out the bottom to the next MZI in the array. The interference of these optical signals can be used by researchers to perform complex linear algebra operations known as matrix multiplication, which is how neural networks process data.

But errors that can occur in each MZI quickly accumulate as light moves from one device to the next. One can avoid some errors by identifying them in advance and tuning the MZIs so earlier errors are cancelled out by later devices in the array.

“It is a very simple algorithm if you know what the errors are. But these errors are notoriously difficult to ascertain because you only have access to the inputs and outputs of your chip,” says Hamerly. “This motivated us to look at whether it is possible to create calibration-free error correction.”

Hamerly and his collaborators previously demonstrated a mathematical technique that went a step further. They could successfully infer the errors and correctly tune the MZIs accordingly, but even this didn’t remove all the error.

Due to the fundamental nature of an MZI, there are instances where it is impossible to tune a device so all light flows out the bottom port to the next MZI. If the device loses a fraction of light at each step and the array is very large, by the end there will only be a tiny bit of power left.

“Even with error correction, there is a fundamental limit to how good a chip can be. MZIs are physically unable to realize certain settings they need to be configured to,” he claims.

So, the team developed a new type of MZI. The researchers added another beam splitter to the device’s end, naming it a 3-MZI because it has three beam splitters rather than two. Due to the way this additional beam splitter mixes the light, it becomes much easier for a MZI to reach the setting it needs to send all light from out through its bottom port.

Bigger chip, fewer errors

When the researchers conducted simulations to test their architecture, they found that it can eliminate much of the uncorrectable error that hampers accuracy. And as the optical neural network becomes larger, the amount of error in the device actually drops – the opposite of what happens in a device with standard MZIs.

Using 3-MZIs, they could potentially create a device big enough for commercial uses with error that has been reduced by a factor of 20, Hamerly says.

The researchers also created a variant of the MZI design specifically for correlated errors. These are caused by manufacturing flaws; for example, if the thickness of a chip is slightly off, the MZIs may all be off by the same amount, resulting in the same errors. They discovered a way to modify the configuration of a MZI to make it more resistant to these types of errors. This technique also increased the bandwidth of the optical neural network so it can run three times faster.