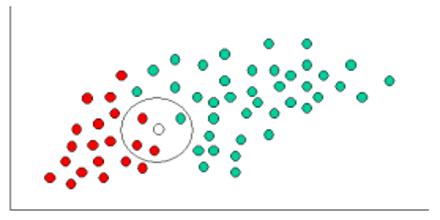

Naive Bayes classifiers are highly scalable, requiring a number of parameters linear in the number of variables in a learning problem. Naive Bayes Classifier technique is based on the so-called Bayesian theorem and is particularly suited when the dimensionality of the inputs is high. Despite its simplicity, Naive Bayes can often outperform more sophisticated classification methods. It can handle an arbitrary number of independent variables whether continuous or categorical. For some types of probability models, Naive Bayes classifiers can be trained very efficiently in a supervised learning setting.

Naive Bayes Classifier