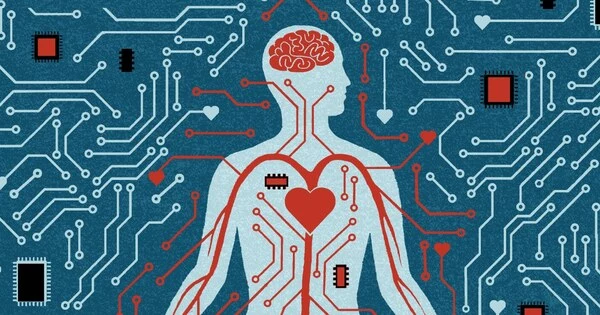

The question of whether society is ready for AI ethical decision making is a complex and multifaceted one. On the one hand, AI technologies have made tremendous progress in recent years, and have the potential to revolutionize many areas of our lives, from healthcare to transportation to education. However, at the same time, the rapid development of AI has raised a number of ethical concerns, particularly around issues such as bias, privacy, and accountability.

With the rapid advancement of technology, artificial intelligence (AI) is playing an increasingly important role in decision-making processes. Humans are increasingly reliant on algorithms to process information, recommend specific behaviors, and even act on their behalf. A team of researchers investigated how humans react to the introduction of AI decision making. They investigated the question, “Is society ready for AI ethical decision making?” by studying human interaction with self-driving cars.

The team’s findings were published in the Journal of Behavioral and Experimental Economics.

The researchers presented 529 human subjects with an ethical dilemma that a driver might face in the first of two experiments. The car driver in the scenario created by the researchers had to decide whether to crash into one group of people or another – the collision was unavoidable. The crash would severely injure one group of people while saving the lives of the other. The subjects in the study were asked to rate the decision of the car driver, both when the driver was human and when the driver was AI. This first experiment was designed to assess people’s biases against AI ethical decision making.

We discovered a social fear of AI ethical decision-making. The source of this fear, however, is not inherent in individuals. Indeed, individuals’ rejection of AI stems from what they believe to be society’s opinion.

Shinji Kaneko

In their second experiment, 563 people answered the researchers’ questions. The researchers studied how people react to the debate over AI ethical decisions when they are brought up in social and political contexts. There were two scenarios in this experiment. One scenario involved a fictitious government that had already decided to allow self-driving cars to make ethical decisions. In their alternate scenario, subjects could “vote” on whether to allow the autonomous cars to make ethical decisions. In both cases, the subjects could choose to support or oppose the technology’s decisions. This second experiment was designed to assess the impact of two different approaches to introducing AI into society.

When the subjects were asked to evaluate the ethical decisions of either a human or an AI driver, the researchers discovered that they did not have a clear preference for either. However, when asked explicitly whether a driver should be allowed to make ethical decisions on the road, the subjects were more strongly opposed to AI-operated cars. The researchers believe that the difference between the two results is due to the interaction of two factors.

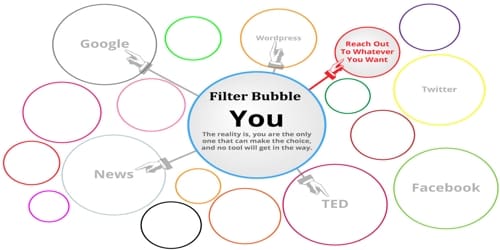

The first component is that individuals believe society as a whole does not want AI ethical decision making, so they give their beliefs a positive weight when asked for their thoughts on the subject. “In fact, when participants are explicitly asked to separate their answers from those of society, the difference between the permissibility of AI and human drivers vanishes,” said Johann Caro-Burnett, an assistant professor at Hiroshima University’s Graduate School of Humanities and Social Sciences.

The second factor is that, depending on the country, allowing discussion of this new technology in society has mixed results. “Information and decision-making power improve how subjects evaluate the ethical decisions of AI in regions where people trust their government and have strong political institutions. In contrast, decision-making capability deteriorates how subjects evaluate the ethical decisions of AI in regions where people do not trust their government and have weak political institutions” Caro-Burnett stated.

“We discovered a social fear of AI ethical decision-making. The source of this fear, however, is not inherent in individuals. Indeed, individuals’ rejection of AI stems from what they believe to be society’s opinion” Shinji Kaneko, a professor at Hiroshima University’s Graduate School of Humanities and Social Sciences and the Network for Education and Research on Peace and Sustainability, agreed. People do not show any signs of bias against AI ethical decision-making when not explicitly asked. However, when specifically asked, people express a dislike for AI. Furthermore, where there is more discussion and information on the subject, AI acceptance improves in developed countries while deteriorating in developing countries.

The researchers believe this rejection of a new technology, that is mostly due to incorporating individuals’ beliefs about society’s opinion, is likely to apply in other machines and robots. “Therefore, it will be important to determine how to aggregate individual preferences into one social preference. Moreover, this task will also have to be different across countries, as our results suggest,” said Kaneko.

In short, while society may not be fully ready for AI ethical decision making, there is a growing recognition of the importance of these issues, and efforts are underway to build the necessary knowledge and infrastructure to support ethical AI development and deployment.