A classic watermark is a visible trademark or pattern that can appear on anything from your wallet currency to a postal stamp to discourage counterfeiting. For example, you may have noticed a watermark in the preview of your graduation images. However, in the case of artificial intelligence, it takes a minor detour, as most things in space do.

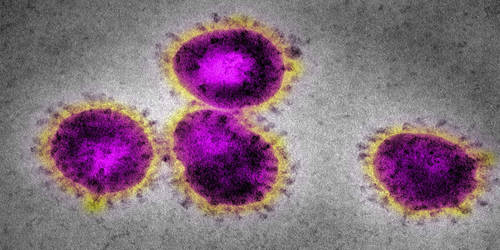

Watermarking in the context of AI can allow a computer to determine whether text or a picture was generated by artificial intelligence. But why watermark photographs in the first place? Generative art fosters the development of deep fakes and other forms of disinformation. Watermarks, despite being undetectable to the naked eye, can prevent the exploitation of AI-generated information and can even be embedded into machine-learning programs built by digital behemoths such as Google. Other big firms in the sector have vowed to develop watermarking technology to prevent misinformation, ranging from OpenAI to Meta and Amazon.

That’s why computer scientists at the University of Maryland (UMD) decided to investigate and comprehend how simple it is for bad actors to add or remove watermarks. According to Soheil Feizi, a professor at UMD, his team’s findings reinforce his skepticism that there are no reliable watermarking applications at this time. During testing, the researchers were able to readily avoid current watermarking technologies and discovered that it was much easier to add phony emblems to images that were not generated by AI. But, in addition to investigating how easy it is to avoid watermarks, one UMD team produced a watermark that is nearly hard to remove from content without jeopardizing intellectual property. This application makes it possible to detect when products are stolen.

In a similar collaborative study effort, researchers from the University of California, Santa Barbara, and Carnegie Mellon University discovered that watermarks may be easily removed using simulated attacks. The research identifies two separate strategies for removing watermarks via these attacks: destructive and constructive approaches. When it comes to harmful attacks, bad actors can treat watermarks as if they were an integral component of the image. Watermarks can be removed by adjusting the brightness, and contrast, utilizing JPEG compression, or simply rotating a picture. However, while these methods do remove the watermark, they also mess with the image quality, making it substantially poorer. Watermark removal in a constructive attack is more sensitive and employs techniques such as the good old Gaussian blur.

Although watermarking AI-generated content requires improvement before it can successfully navigate simulated tests like those presented in these research papers, it’s simple to imagine a scenario in which digital watermarking becomes a race against hackers. We can only hope for the best when it comes to new tools like Google’s SynthID, an identification tool for generative art, which will continue to be worked on by developers until it reaches the public.

However, the moment for thought leaders to innovate could not be greater. With the 2024 presidential election in the United States quickly approaching, AI-generated content, such as deep fake advertisements, could play a significant role in affecting political opinion. The Biden administration has also raised the matter, noting genuine concerns about how artificial intelligence can be exploited for disruptive objectives, notably in the field of misinformation.