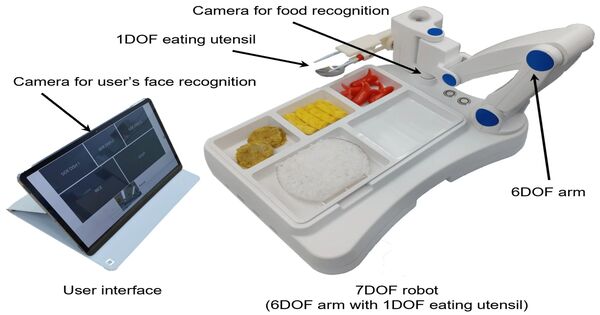

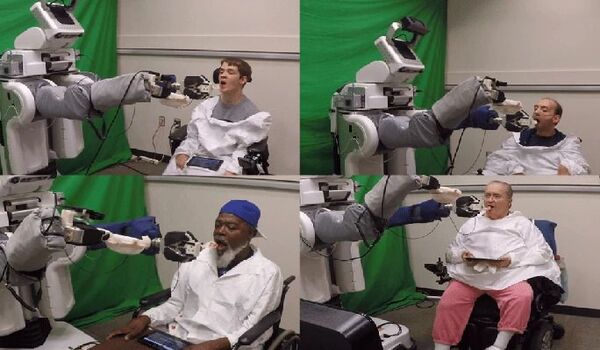

Cornell researchers have created a robotic feeding system that employs computer vision, machine learning, and multimodal sensing to properly feed persons with severe mobility impairments, such as spinal cord injuries, cerebral palsy, and multiple sclerosis.

“Feeding individuals with severe mobility limitations with a robot is difficult, as many cannot lean forward and require food to be placed directly inside their mouths,” said Tapomayukh “Tapo” Bhattacharjee, assistant professor of computer science at Cornell Ann S. Bowers College of Computing and Information Science and senior developer of the system. “The challenge intensifies when feeding individuals with additional complex medical conditions.”

A paper on the system, “Feel the Bite: Robot-Assisted Inside-Mouth Bite Transfer using Robust Mouth Perception and Physical Interaction-Aware Control,” was presented at the Human Robot Interaction conference, held March 11-14, in Boulder, Colorado. It received a Best Paper Honorable Mention recognition, while a demo of the research team’s broader robotic feeding system received a Best Demo Award.

Current technology only looks at a person’s face once and assumes they will remain still, which is often not the case and can be very limiting for care recipients.

Rajat Kumar Jenamani

A leader in assistive robotics, Bhattacharjee and his EmPRISE Lab have spent years teaching machines the complex process by which we humans feed ourselves. It’s a complicated challenge to teach a machine — everything from identifying food items on a plate, picking them up and then transferring it inside the mouth of a care recipient.

“This last 5 centimeters, from the utensil to inside the mouth, is extremely challenging,” Bhattacharjee stated.

Some care users may have very small mouth openings of less than 2 cm, while others suffer from involuntary muscle spasms that can come unexpectedly, even when the utensil is within their mouth, according to Bhattacharjee. Furthermore, some people can only bite food in specific places inside their mouth, which they signal by pushing the utensil with their tongue, he explained.

“Current technology only looks at a person’s face once and assumes they will remain still, which is often not the case and can be very limiting for care recipients,” said Rajat Kumar Jenamani, the paper’s lead author and a doctoral student in the field of computer science.

To address these challenges, researchers created and outfitted their robot with two critical features: real-time mouth tracking that adjusts to user movements and a dynamic response mechanism that allows the robot to detect and react to physical interactions as they occur. This allows the system to discern between abrupt spasms, intentional bites, and attempts by the user to move the utensil inside their mouth, according to researchers.

The robotic system successfully fed 13 individuals with diverse medical conditions in a user study spanning three locations: the EmPRISE Lab on the Cornell Ithaca campus, a medical center in New York City, and a care recipient’s home in Connecticut. Users of the robot found it to be safe and comfortable, researchers said.

“This is one of the most extensive real-world evaluations of any autonomous robot-assisted feeding system with end-users,” Bhattacharjee said.

The team’s robot is a multi-jointed arm with a custom-built utensil at the end that detects forces applied to it. The mouth tracking system, which was trained on thousands of photos of different participants’ head positions and facial expressions, combines data from two cameras positioned above and below the utensil. According to the researchers, this enables for exact mouth detection while also overcoming any optical obstacles generated by the utensil. Jenamani explained that this physical interaction-aware response mechanism detects how humans interact with the robot using both visual and force sensing techniques.

“We’re empowering individuals to control a 20-pound robot with just their tongue,” he stated. He described the user studies as the most rewarding component of the research, highlighting the robot’s tremendous emotional impact on both care recipients and caregivers. During one session, the parents of a daughter with schizencephaly quadriplegia, a rare birth abnormality, watched her successfully feed herself using the device.

“It was a moment of real emotion; her father raised his cap in celebration, and her mother was almost in tears,” Jenamani stated.

While further work is needed to explore the system’s long-term usability, its promising results highlight the potential to improve care recipients’ level of independence and quality of life, researchers said.