A liquid state machine (LSM) has emerged as a computational model more adequate to explain computations in neuronal biological networks than the Turing machine. It is a special kind of neural network that spikes. In order to incorporate new and creative ways to process knowledge, these models expand on conventional designs. Like different kinds of neural networks, liquid state machines, and similar builds are based around the neurobiology of the human brain.

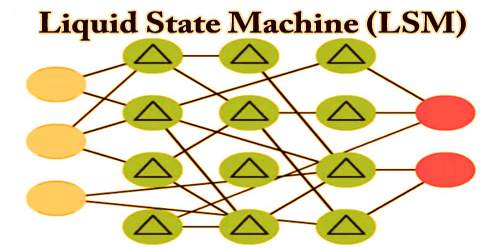

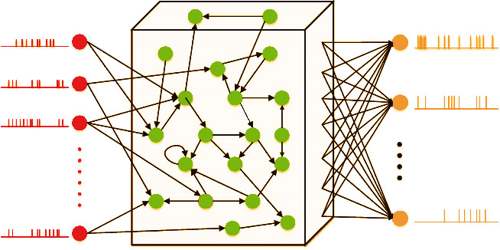

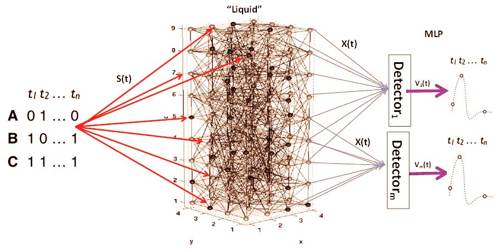

A large set of units (called nodes or neurons) consists of an LSM; each node receives time-varying inputs from both external sources (inputs) and other nodes. Nodes are related randomly to one another. To really understand what a liquid state machine is, it’s important to know the kind of machine learning program into which it falls. The repetitive existence of the connections converts the time-varying input into a Spatio-temporal pattern of network node activations. Linear discriminant units read out the Spatio-temporal patterns of activation.

The structure of Liquid State Machine (LSM)

LSM was suggested as a computational model that is more suitable than conventional models for modeling computations in cortical microcircuits, such as Turing machines or attracting or dependent models in dynamic systems. These sorts of machine learning are sometimes called “third-generation” neural networks, and lots of experts talk over with “spiking” neural networks for instance how they work. To synaptic and neural components, the spiking neural network, which uses many of the same models as a liquid state computer, adds a property of time. In the name, the word liquid comes from the analogy drawn to falling stone in still water or other liquid body. The falling stone will generate ripples within the liquid. The input (motion of the dropping stone) was transformed into a liquid displacement Spatio-temporal pattern (ripples).

In other words: both inputs and outputs of an LSM are streams of knowledge in continuous time. These inputs and outputs are modeled mathematically as functions u(t) and y(t) of continuous-time. In a very liquid state machine model, the evaluation of spiking neural activity ends up in a spatiotemporal pattern of neuron network activation. This is a recurrent type of neural network, so in the process, certain forms of memory are retained. The LSM model is inspired by the hypothesis that an information processing device’s learning capacity is its most delicate feature and that the availability of enough training examples is a primary bottleneck for goal-directed learning ( i.e. supervised or reward-based).

Functions of Liquid State Machine (LSM)

Another clue to the character of a liquid state machine has got to do with the name of this particular quite spiking neural network. LSMs suggest the simplest way to elucidate the operation of brains. LSMs are argued to be an improvement over the speculation of artificial neural networks because:

- Circuits are not hardcoded to perform a specific task.

- Continuous-time inputs are handled “naturally”.

- Computations on various time scales can be done using the same network.

- The same network can perform multiple computations.

The Liquid is a generic dynamical system consisting preferentially of complex rather than uniform and stereotypical components in more abstract models. The theory is that dropping a stone or other solid object into a water body or some other liquid creates ripples on the surface, which can be analyzed to understand what is happening in the system, and movement under the surface. In the same way, humans can evaluate the operations of a liquid state machine to know more about how it’s modeling human brain activity.

Information Sources: