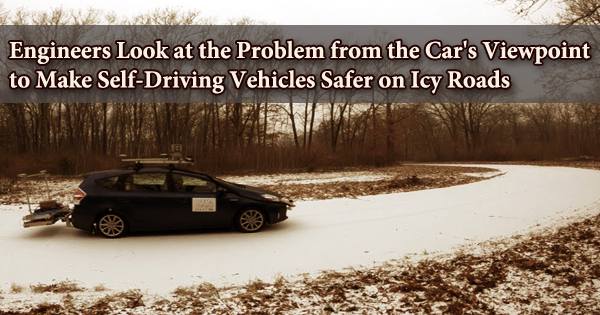

Nobody, including autonomous cars, enjoys driving in a blizzard. Engineers look at the problem from the car’s perspective to make self-driving vehicles safer on icy roads. Navigating adverse weather is a key problem for fully autonomous cars. Snow muddles sensor data that helps a vehicle assess depth, locate hazards, and stay on the right side of the yellow line, provided it is visible.

The Keweenaw Peninsula in Michigan, which receives more than 200 inches of snow each winter, is the ideal location for testing autonomous car technology. Researchers from Michigan Technological University examine solutions for snowy driving conditions in two papers presented at SPIE Defense + Commercial Sensing 2021, which might help bring self-driving alternatives to snowy cities like Chicago, Detroit, Minneapolis, and Toronto.

Autonomy, like the weather, isn’t always a sunny or snowy yes-or-no proposition. Autonomous vehicles range from automobiles with blind-spot alerts or brake assistance that are already on the market, to vehicles that can transition between self-driving and non-driving modes, to vehicles that can navigate fully on their own. Self-driving technologies and algorithms are still being tweaked by major manufacturers and research institutes. Accidents do happen sometimes, either as a result of a car’s artificial intelligence (AI) making a mistake or a human driver abusing self-driving features.

Humans, too, have sensors: our scanning eyes, sense of balance and movement, and cerebral processing capability help us comprehend our surroundings. Because our brains are adept at generalizing fresh experiences, these seemingly fundamental inputs allow us to drive in practically any circumstance, even if it is unfamiliar to us. Two cameras set on gimbals scan and detect depth using stereo vision to replicate human vision in autonomous cars, while an inertial measurement unit measures balance and motion. Computers, on the other hand, can only react to circumstances they’ve seen previously or have been taught to identify.

Because artificial brains aren’t yet available, task-specific artificial intelligence (AI) algorithms must step in, requiring autonomous cars to use numerous sensors. Fisheye cameras broaden the field of vision, whilst other cameras mimic the human eye. Heat signatures are picked up by infrared. Radar is able to see through fog and rain. Lidar (light detection and ranging) pierces the darkness and creates a neon tapestry of laser beam strands.

One of the study’s primary researchers, Nathir Rawashdeh, assistant professor of computing in Michigan Tech’s College of Computing, stated, “Every sensor has limitations, and every sensor covers another one’s back.” He uses an AI method called sensor fusion to bring the data from the sensors together.

“Sensor fusion uses multiple sensors of different modalities to understand a scene,” he said. “You cannot exhaustively program for every detail when the inputs have difficult patterns. That’s why we need AI.”

Nader Abu-Alrub, a doctoral student in electrical and computer engineering, and Jeremy Bos, an assistant professor of electrical and computer engineering, are among Rawashdeh’s Michigan Tech collaborators, as are master’s degree students and graduates from Bos’ lab: Akhil Kurup, Derek Chopp, and Zach Jeffries. Lidar, infrared, and other sensors, according to Bos, are like the hammer in an ancient proverb. “To a hammer, everything looks like a nail,” quoted Bos. “Well, if you have a screwdriver and a rivet gun, then you have more options.”

In bright, clean settings, the majority of autonomous sensors and self-driving algorithms are being developed. Bos’s group began gathering local data in a Michigan Tech autonomous vehicle (safely operated by a person) amid severe snowfall, knowing that the rest of the globe is not like Arizona or southern California. More than 1,000 frames of lidar, radar, and picture data from snowy roads in Germany and Norway were poured over by Rawashdeh’s team, led by Abu-Alrub, to begin teaching their AI software what snow looks like and how to see past it.

“All snow is not created equal,” Bos said, pointing out that the variety of snow makes sensor detection a challenge. Pre-processing the data and ensuring correct labeling, according to Rawashdeh, is a crucial step in guaranteeing accuracy and safety: “AI is like a chef if you have good ingredients, there will be an excellent meal,” he said. “Give the AI learning network dirty sensor data and you’ll get a bad result.”

One issue is low-quality data, as well as actual dirt. Snow accumulation on the sensors, like road dirt, is a manageable but inconvenient problem. Even when the view is clear, autonomous car sensors don’t always agree on which obstacles they identify. Bos gave an excellent example of finding a deer while cleaning the data collected locally. That blob was nothing to Lidar (30% probability of being an impediment), the camera viewed it as if it were a drowsy human behind the wheel (50% chance), and the infrared sensor screamed WHOA (90% sure that is a deer).

Getting the sensors and risk assessments to communicate and learn from one another is like to the Indian tale of the three blind men who locate an elephant: each feels a different portion of the elephant’s ear, trunk, and leg and arrives to a different judgment about what type of animal it is. Rawashdeh and Bos want autonomous sensors to collectively find out the answer, whether it’s elephant, deer, or snowbank, using sensor fusion. “Rather than strictly voting, by using sensor fusion we will come up with a new estimate,” Bos says.

While navigating a snowstorm in the Keweenaw is a long way off for autonomous cars, their sensors can improve at learning about poor weather, and with breakthroughs like sensor fusion, they may one day be able to drive safely on icy roads.