Advanced machine-learning computations can now be performed on a low-power, memory-constrained edge device thanks to a new computing architecture. The technique could allow self-driving cars to make decisions in real time while only using a fraction of the energy that their power-hungry on-board computers currently require.

When you ask a smart home device for the weather forecast, the device takes several seconds to respond. One reason for this latency is that connected devices lack the memory and power to store and run the massive machine-learning models required for the device to understand what a user is asking of it. The model is stored in a data center hundreds of miles away, where the solution is computed and sent to the device.

MIT researchers developed a new method for computing directly on these devices that significantly reduces latency. Their method offloads the memory-intensive steps of running a machine-learning model to a centralized server where model components are encoded onto light waves.

The waves are transmitted to a connected device via fiber optics, which allows massive amounts of data to be sent in real time across a network. The receiver then employs a simple optical device that performs computations quickly using the components of a model carried by those light waves.

When compared to other methods, this technique improves energy efficiency by more than a hundredfold. It could also improve security, since a user’s data do not need to be transferred to a central location for computation.

Optics is great because there are many ways to carry data within optics. For instance, you can put data on different colors of light, and that enables a much higher data throughput and greater bandwidth than with electronics.

Bandyopadhyay

This method could enable a self-driving car to make decisions in real-time while using just a tiny percentage of the energy currently required by power-hungry computers. It could also allow a user to have a latency-free conversation with their smart home device, be used for live video processing over cellular networks, or even enable high-speed image classification on a spacecraft millions of miles from Earth.

“Every time you want to run a neural network, you have to run the program, and how fast you can run the program depends on how fast you can pipe the program in from memory. Our pipe is massive – it corresponds to sending a full feature-length movie over the internet every millisecond or so. That is how fast data comes into our system. And it can compute as fast as that,” says senior author Dirk Englund, an associate professor in the Department of Electrical Engineering and Computer Science (EECS) and member of the MIT Research Laboratory of Electronics.

Joining Englund on the paper is lead author and EECS grad student Alexander Sludds; EECS grad student Saumil Bandyopadhyay, Research Scientist Ryan Hamerly, as well as others from MIT, the MIT Lincoln Laboratory, and Nokia Corporation. The research will be published in Science.

Lightening the load

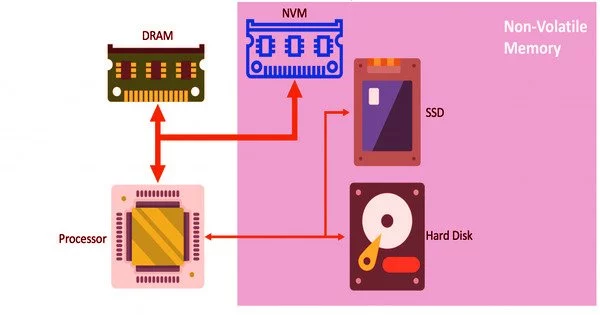

Neural networks are machine-learning models that use layers of interconnected nodes, or neurons, to recognize patterns in datasets and perform tasks such as image classification and speech recognition. However, these models can have billions of weight parameters, which are numerical values that transform input data as it is processed. These weights must be remembered. At the same time, the data transformation process involves billions of algebraic computations that require a significant amount of processing power.

According to Sludds, one of the most significant limiting factors to speed and energy efficiency is the process of retrieving data (the weights of the neural network in this case) from memory and moving it to the parts of a computer that do the actual computation.

“So we thought, why don’t we take all that heavy lifting — the process of retrieving billions of weights from memory and move it away from the edge device and put it somewhere where we have abundant access to power and memory, giving us the ability to retrieve those weights quickly?” he says.

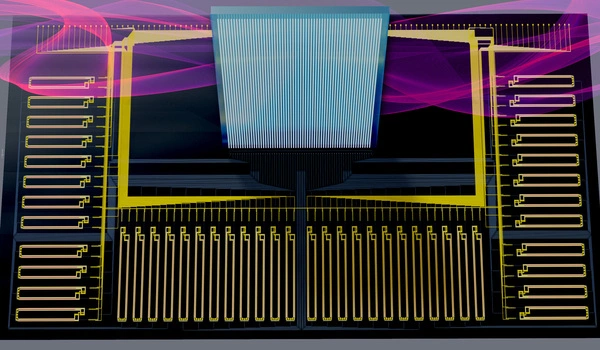

Netcast, the neural network architecture they created, involves storing weights in a central server that is linked to a novel piece of hardware known as a smart transceiver. This smart transceiver, a thumb-sized chip that can receive and transmit data, employs silicon photonics technology to retrieve trillions of weights from memory each second.

It receives weights as electrical signals and imprints them onto light waves. Since the weight data are encoded as bits (1s and 0s) the transceiver converts them by switching lasers; a laser is turned on for a 1 and off for a 0. It combines these light waves and then periodically transfers them through a fiber optic network so a client device doesn’t need to query the server to receive them.

“Optics is great because there are many ways to carry data within optics. For instance, you can put data on different colors of light, and that enables a much higher data throughput and greater bandwidth than with electronics,” explains Bandyopadhyay.

Trillions per second

Once the light waves arrive at the client device, a simple optical component known as a broadband “Mach-Zehnder” modulator uses them to perform super-fast analog computation. This entails encoding input data from the device, such as sensor information, onto the weights. Then it sends each individual wavelength to a receiver, which detects the light and measures the result of the computation.

The researchers devised a method to use this modulator to perform trillions of multiplications per second, significantly increasing the device’s computation speed while using only a small amount of power.

“In order to make something faster, you need to make it more energy efficient. But there is a trade-off. We’ve built a system that can operate with about a milliwatt of power but still do trillions of multiplications per second. In terms of both speed and energy efficiency, that is a gain of orders of magnitude,” Sludds says.

They put this architecture through its paces by sending weights across an 86-kilometer fiber that connects their lab to MIT Lincoln Laboratory. Netcast enabled machine learning at high speeds with high accuracy (98.7 percent for image classification and 98.8 percent for digit recognition).

“We had to do some calibration,” Hamerly adds, “but I was surprised by how little work we had to do to achieve such high accuracy out of the box; we were able to get commercially relevant accuracy.”