Researchers at Tokyo Tech have recently created a brand-new accelerator chip called “Hiddenite” that can achieve cutting-edge precision in the computation of sparse “hidden neural networks” with less computing labor.

The suggested on-chip model construction, which combines weight generation and “supermask” expansion, is used by the Hiddenite chip to significantly minimize the amount of time spent accessing external memory, improving computing efficiency.

Deep neural networks (DNNs) are a sophisticated type of artificial intelligence (AI) machine learning architecture that need a lot of parameters to train to anticipate results. However, DNNs can be “pruned,” which lessens the computational load and model size.

The “lottery ticket hypothesis” swept the machine learning community a few years ago. The theory proposed that a DNN with random initialization contains subnetworks that, following training, reach accuracy comparable to the original DNN.

The more “lottery tickets” there are for successful optimization, the bigger the network. By using these lottery tickets, sparse neural networks can be “pruned” to achieve accuracy levels comparable to more intricate, “dense” networks, which eases the load on computers and saves energy.

The hidden neural network (HNN) algorithm, which employs AND logic (where the output is only high when all the inputs are high) on the initialized random weights and a “binary mask” dubbed a “supermask,” is one method for locating such subnetworks.

The unselected and chosen connections are denoted as 0 and 1, respectively, in the supermask, which is defined by the top-k% highest scores. The HNN aids in decreasing software-side computational efficiency.

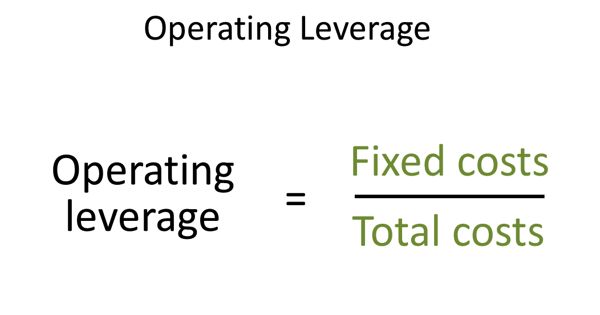

However, advancements in the hardware are also necessary for the computing of neural networks. Although traditional DNN accelerators have a great performance, they do not take into account the power usage resulting from external memory access.

A novel accelerator chip named “Hiddenite,” created by researchers at Tokyo Institute of Technology (Tokyo Tech) under the direction of Professors Jaehoon Yu and Masato Motomura, can now calculate hidden neural networks with much reduced power consumption.

Moreover, we also introduced a new training method for hidden neural networks, called ‘score distillation,’ in which the conventional knowledge distillation weights are distilled into the scores because hidden neural networks never update the weights. The accuracy using score distillation is comparable to the binary model while being half the size of the binary model.

Professor Masato Motomura

“Reducing the external memory access is the key to reducing power consumption. Currently, achieving high inference accuracy requires large models. But this increases external memory access to load model parameters. Our main motivation behind the development of Hiddenite was to reduce this external memory access,” explains Prof. Motomura.

The International Solid-State Circuits Conference (ISSCC) 2022, a global conference presenting the pinnacles of success in integrated circuits, will present their findings.

The first HNN inference chip is called “Hiddenite,” which stands for Hidden Neural Network Inference Tensor Engine. To decrease external memory access and achieve excellent energy efficiency, the Hiddenite architecture offers three advantages.

The first is that it provides on-chip weight creation, which uses a random number generator to re-generate weights. As a result, there is no need to store the weights in an external memory or access it.

The second advantage is the availability of “on-chip supermask expansion,” which lowers the quantity of supermasks that the accelerator must load. The high-density four-dimensional (4D) parallel processor on the Hiddenite chip is the third advancement it makes. It increases data reuse throughout the computational process, increasing efficiency.

“The first two factors are what set the Hiddenite chip apart from existing DNN inference accelerators,” reveals Prof. Motomura. “Moreover, we also introduced a new training method for hidden neural networks, called ‘score distillation,’ in which the conventional knowledge distillation weights are distilled into the scores because hidden neural networks never update the weights. The accuracy using score distillation is comparable to the binary model while being half the size of the binary model.”

The team used the 40nm process of Taiwan Semiconductor Manufacturing Company (TSMC) to design, produce, and measure a prototype chip based on the hiddenite architecture.

The 3mm × 3mm pixel chip can do 4,096 MAC (multiply-and-accumulate) operations simultaneously. It reduces the quantity of model transmission to half that of binarized networks while achieving state-of-the-art computational efficiency, up to 34.8 trillion or tera operations per second (TOPS) per Watt of power.

The world of machine learning will undoubtedly undergo another paradigm shift as a result of these discoveries and their successful demonstration in a real silicon chip, opening the door for computing that is quicker, more effective, and ultimately more environmentally friendly.