A new system known as a “stashing system” that uses less energy has been proposed by researchers and was inspired by the neuromodulation of the brain. By simulating the continuous changes in the topology of the neural network according to the situation, the research team headed by Professor Kyung Min Kim from the Department of Materials Science and Engineering has created a technology that can efficiently handle mathematical operations for artificial intelligence.

The human brain adapts to retain or retrieve memories as needed by changing its neuronal architecture in real-time. The study team unveiled a brand-new artificial intelligence learning technique that directly employs these arrangements of brain coordinating circuits.

The best potential for semiconductor businesses in decades are now available because to artificial intelligence (AI). Every day, the world produces enormous amounts of data, and the AI systems designed to make sense of it all are continuously in need of faster and more reliable technology.

Particularly in the Fourth Industrial Revolution period, artificial intelligence research is becoming increasingly active, and the creation of electronic gadgets and product releases based on AI is rising.

The largest opportunity for semiconductor companies in decades could be realized if AI enables them to capture between 40% and 50% of the total value of the technological stack. The fastest growth will be in storage, but semiconductor businesses will profit most from advances in computing, memory, and networking.

Customized hardware development should be encouraged in order to integrate artificial intelligence into electrical gadgets. However, the majority of electronic devices for artificial intelligence demand highly integrated memory arrays and considerable power consumption for large-scale tasks.

These power consumption and integration constraints have been difficult to overcome, and research has been done to understand how the brain resolves issues. The large warehouses of big data that AI applications demand may be handled considerably more easily by specialized memory for AI, which has 4.5 times more bandwidth than conventional memory.

In this study, we implemented the learning method of the human brain with only a simple circuit composition and through this we were able to reduce the energy needed by nearly 40 percent.

Professor Kyung Min Kim

Due to the significant performance boost, many users would be more than happy to pay the additional cost of specialist memory (about $25 per gigabyte, compared with $8 for standard memory). Artificial intelligence-related chips may make up about 20% of demand by 2025, generating around $67 billion in revenue.

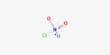

The study team produced artificial neural network hardware with a self-rectifying synaptic array and an algorithm known as a “stashing system” that was developed to conduct artificial intelligence learning in order to demonstrate the effectiveness of the developed technology.

As a result, it was able to decrease energy consumption inside the stashing system by 37% without sacrificing accuracy. This finding supports the notion that neuromodulation can be mimicked in people.

Professor Kim said, “In this study, we implemented the learning method of the human brain with only a simple circuit composition and through this we were able to reduce the energy needed by nearly 40 percent.”

This stashing system, inspired by neuromodulation, replicates the neural activity of the brain and is compatible with commercially available semiconductor hardware. It is anticipated that it will be utilized in the creation of future artificial intelligence semiconductor chips.

This study was published in Advanced Functional Materials in March 2022 and supported by KAIST, the National Research Foundation of Korea, the National NanoFab Center, and SK Hynix.