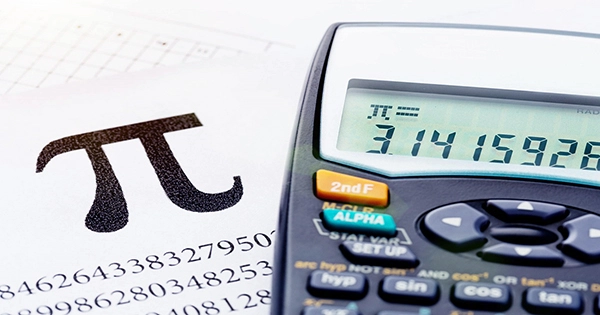

If you ask a physicist the value of pi, they’ll probably say 3.14 – or 3.142 if they’re feeling really academic that day. It’s even worse if you ask an engineer: the normal response is “around three.” However, if you ask Google Cloud, you’re in for a lengthy and highly precise journey. The business recaptured the global record for the most digits of pi computed this week, raising the known value of pi, the ratio between the circumference and diameter of a circle, to an astounding 100 trillion digits.

And when we say “reclaimed,” we’re referring to the fact that this is the second time Google Cloud has held the title. In a news statement celebrating the milestone, Google developer advocate Emma Haruka Iwao commented, “Records are designed to be broken.” “This is the second time we’ve utilized Google Cloud to calculate a record number of digits for the mathematical constant,” she explained, “in only three years, we’ve tripled the amount of digits.”

That is, if anything, an understatement: Google previously set a world record by computing 31.4 trillion digits of pi, an accomplishment they celebrated on Pi Day 2019. Last year, however, they had their thunder stolen when a group of Swiss scientists quadrupled the number of significant figures, bringing the most exact known value of pi to 62.8 trillion digits.

Google has raised the stakes once more after nearly six months of steady computation. It wasn’t simple, as you might think for a feat of this magnitude: “we calculated the amount of the temporary storage necessary for the computation to be roughly 554 TB,” Haruka Iwao revealed. “The highest permanent disk storage that you can attach to a single virtual machine is 257 TB, which is usually plenty for standard single-node applications, but not in this situation.”

Instead, the engineers created a cluster of nodes consisting of one compute node and 32 storage nodes with a total disk capacity of 663 TB. To give you an idea of how enormous it is, imagine installing the massively multiplayer online role-playing game World of Warcraft 7,000 times all at once. But what is the purpose of all this power?

The digits were calculated by the Cloud using a method devised by David and Gregory Chudnovsky, two mathematicians. The Chudnovsky brothers devised their method in the late 1980s, and within a few years, they had individually smashed the record for most pi digits computed — two billion digits – using a supercomputer they built from mail-order parts in their shared New York apartment. The Chudnovsky technique has been the algorithm of choice for most record attempts in pi-calculation since its publication — in fact; it is the only approach that has found more digits than prior records for the last 12 years.

That’s because it’s an extremely efficient algorithm: on average, you acquire more than 14 more digits every time you conduct a new iteration. It’s a huge leap forward from the early attempts to compute pi. The first method was devised by Archimedes – he of the bathtime “eureka!” fame – and relied on polygons: A computer, this time human rather than mechanical, would have to construct two regular polygons on either side of a circle, and then use the perimeters of the polygons as limits to find an approximation of the circumference.

The more sides a form had, the more precise the estimate of pi was — Archimedes went all the way up to 96-sided models, establishing that pi was greater than 3.1408 but less than 3.1429.