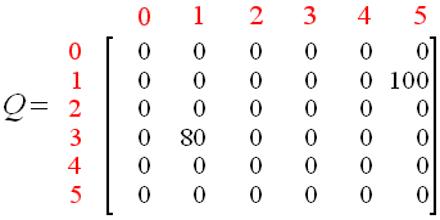

This article talks about Q-Learning, which learns the optimal policy even when actions are selected according to a more exploratory or even random policy. It is an Off-Policy algorithm for Temporal Difference learning. It is a form of reinforcement learning in which the agent learns to assign values to state-action pairs. Q-Learning works by learning an action-value function that ultimately gives the expected utility of taking a given action in a given state and following the optimal policy thereafter. Sometimes in noisy environments “Q-Learning” can overestimate the actions values, slowing the learning.

Q-Learning