Imagine making a deposit into your account using an online banking app. These transmissions could be tampered with by noise that introduces flaws into the data, just like any other data delivered over the internet.

To get around this issue, senders encrypt data prior to transmission, and a receiver employs a decoding technique to fix mistakes and recover the original message. In some cases, data are received together with reliability data that aids the decoder in determining which portions of a transmission are most likely to include errors.

Researchers from MIT and other institutions have created a decoder device that uses a novel statistical model to exploit this reliability information more quickly and easily than previous methods.

The team’s earlier universal decoding technique, which is used by their chip, can decipher any error-correcting code. Typically, decoding gear is limited to processing a single kind of code. This brand-new, universal decoder chip outperforms conventional hardware by a factor of 10 to 100, breaking the previous record for energy-efficient decoding.

Since there would be no longer be a need for separate hardware for different codes, this development might allow mobile devices to have fewer chips. By doing so, the amount of material required for manufacture would be reduced, lowering costs and enhancing sustainability.

The chip may also enhance device performance and extend battery life by reducing the energy requirements of the decoding process. It might be especially helpful for demanding applications like 5G networks and augmented and virtual reality.

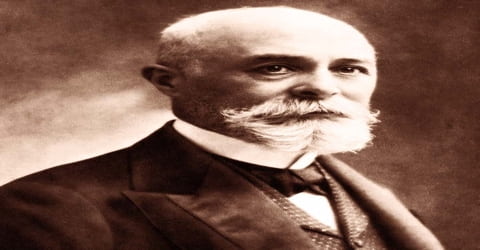

“This is the first time anyone has broken below the 1 picojoule-per-bit barrier for decoding. That is roughly the same amount of energy you need to transmit a bit inside the system. It had been a big symbolic threshold, but it also changes the balance in the receiver of what might be the most pressing part from an energy perspective we can move that away from the decoder to other elements,” says Muriel Médard, the School of Science NEC Professor of Software Science and Engineering, a professor in the Department of Electrical Engineering and Computer Science, and a co-author of a paper presenting the new chip.

Médard’s co-authors include lead author Arslan Riaz, a graduate student at Boston University (BU); Rabia Tugce Yazicigil, assistant professor of electrical and computer engineering at BU; and Ken R. Duffy, then director of the Hamilton Institute at Maynooth University and now a professor at Northeastern University, as well as others from MIT, BU, and Maynooth University. The work is being presented at the International Solid-States Circuits Conference.

If your car isn’t working, soft information might tell you that it is probably the battery. But if it isn’t the battery alone, maybe it is the battery and the alternator together that are causing the problem. This is how a rational person would troubleshoot you’d say that it could actually be these two things together before going down the list to something that is much less likely.

Professor Muriel Médard

Smarter sorting

Digital data are transmitted over a network in the form of bits (0s and 1s). A sender encodes data by adding an error-correcting code, which is a redundant string of 0s and 1s that can be viewed as a hash. Information about this hash is held in a specific code book.

The original information, which might have been muddled by noise, is retrieved by a decoding algorithm at the receiver that was created specifically for this code and employs its code book and hash structure. To decode several codes, a device would require numerous chips because each algorithm is code-specific and the majority of them requires specialized hardware.

The researchers previously demonstrated GRAND (Guessing Random Additive Noise Decoding), a universal decoding algorithm that can crack any code. GRAND operates by inferring the type of noise that interfered with the transmission, taking that noise pattern out of the data that was received, and then comparing the data that is left to a code book. It guesses a series of noise patterns in the order they are likely to occur.

Data are frequently received with soft information (also known as reliability information), which aids a decoder in determining which bits contain mistakes. The new decoding chip, called ORBGRAND (Ordered Reliability Bits GRAND), uses this reliability information to sort data based on how likely each bit is to be an error.

But it isn’t as simple as ordering single bits. While it is possible that the least reliable bit will have the most errors, it’s possible that the third and fourth least reliable bits together will contain errors just as frequently as the seventh-least trustworthy bit.

In order to sort bits in this way, ORBGRAND employs a new statistical model that takes into account the fact that groups of bits are just as likely to contain errors as individual bits.

“If your car isn’t working, soft information might tell you that it is probably the battery. But if it isn’t the battery alone, maybe it is the battery and the alternator together that are causing the problem. This is how a rational person would troubleshoot you’d say that it could actually be these two things together before going down the list to something that is much less likely,” Médard says.

Traditional decoders, which would instead analyze the code structure and typically have performance that is built for the worst-case scenario, are far less effective than this approach.

“With a traditional decoder, you’d pull out the blueprint of the car and examine each and every piece. You’ll find the problem, but it will take you a long time and you’ll get very frustrated,” Médard explains.

ORBGRAND stops sorting as soon as a code word is found, which is often very soon. In order to find the code word more quickly, the chip also uses parallelization, creating and testing numerous noise patterns at once. Even though it runs numerous processes simultaneously, the decoder’s energy usage maintains low since it stops operating once it discovers the code word.

Record-breaking efficiency

When they compared their approach to other chips, ORBGRAND decoded with maximum accuracy while consuming only 0.76 picojoules of energy per bit, breaking the previous performance record. ORBGRAND consumes between 10 and 100 times less energy than other devices.

One of the biggest challenges of developing the new chip came from this reduced energy consumption, Médard says. The researchers’ previous non-focus operations, including confirming the code word in a code book, are now so energy-efficient thanks to ORBGRAND that they take up the majority of their time.

“Now, this checking process, which is like turning on the car to see if it works, is the hardest part. So, we need to find more efficient ways to do that,” she says.

The team is also looking into ways to modify transmission modulation so they can benefit from the ORBGRAND chip’s increased efficiency. They also intend to investigate how their method might be applied to handle overlapping broadcasts more effectively.

The U.S. Defense Advanced Research Projects Agency (DARPA) and Science Foundation Ireland fund the research, in part.