Autonomous systems are easily lost without GPS. Now, a new algorithm created at Caltech allows autonomous devices to determine where they are just by looking at the ground surrounding them, and the technology operates regardless of seasonal changes in that terrain for the first time.

The technique was detailed in the journal Science Robotics, published by the American Association for the Advancement of Science (AAAs), on June 23. The visual terrain-relative navigation (VTRN) technique was initially created in the 1960s. Autonomous devices can find themselves by comparing adjacent topography to high-resolution satellite images.

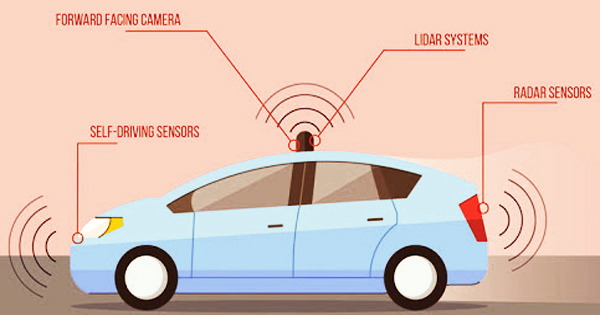

A completely automated driving system is used in an autonomous vehicle to allow it to respond to external situations that a human driver would handle. Traffic lights and other non-vehicular activity can be detected by highly automated cars with totally automated speed control. When compared to human-driven cars, autonomous vehicle technology may be able to offer certain benefits.

One such possible benefit is that they might improve road safety. Vehicle collisions cause a large number of deaths each year, and automated cars could possibly reduce the number of casualties because the software employed in them is expected to make fewer errors than people. Autonomous cars may be able to minimize traffic congestion by reducing the amount of accidents, which is another possible benefit.

Autonomous driving can also do this by eliminating human behaviors that produce roadblocks, such as stop-and-go traffic. The difficulty is that the present version of VTRN requires that the landscape it is looking at closely matches the pictures in its database in order for it to operate. Anything that changes or obscures the topography, such as snow or fallen leaves, causes the pictures to mismatch, causing the system to malfunction.

VTRN systems can be readily confused unless there is a database of landscape photos in every possible situation. To solve this obstacle, a team from Soon-Jo Chung’s lab at JPL, which Caltech oversees for NASA, used deep learning and artificial intelligence (AI) to eliminate seasonal information that stymies existing VTRN systems.

“The rule of thumb is that both images the one from the satellite and the one from the autonomous vehicle have to have identical content for current techniques to work. The differences that they can handle are about what can be accomplished with an Instagram filter that changes an image’s hues,” says Anthony Fragoso (MS ’14, Ph.D. ’18), lecturer and staff scientist, and lead author of the Science Robotics paper. “However, in real-world systems, things vary dramatically depending on the season since the pictures no longer include the same items and can no longer be directly compared.”

Chung and Fragoso used “self-supervised learning” in the approach they created with graduate student Connor Lee (BS ’17, MS ’19) and undergraduate student Austin McCoy. Rather of relying on human annotators to meticulously curate enormous data sets in order to educate an algorithm on how to recognize what it is seeing, this method allows the algorithm to teach itself. The AI searches for patterns in photos by sifting through nuances and characteristics that people are likely to overlook.

Supplementing the existing generation of VTRN with the new method results in more precise localization: in one experiment, the researchers used a correlation-based VTRN technique to localize pictures of summer foliage versus winter leaf-off imagery. They discovered that performance was no better than a coin flip, with navigation failures occurring in half of the tries.

Incorporating the new algorithm into the VTRN, on the other hand, was considerably more successful: 92% of efforts were successfully matched, and the remaining 8% could be flagged as troublesome ahead of time and simply controlled using other existing navigation approaches.

“Computers can find obscure patterns that our eyes can’t see and can pick up even the smallest trend,” says Lee. VTRN was in danger of turning into an infeasible technology in common but challenging environments, he says. “We rescued decades of work in solving this problem.”

The technology has potential for space missions in addition to autonomous drones on Earth. For the first time on Mars, the entry, descent, and landing (EDL) system of JPL’s Mars 2020 Perseverance rover mission utilized VTRN to land at the Jezero Crater, a site previously thought too dangerous for a safe entry.

With rovers such as Perseverance, “a certain amount of autonomous driving is necessary,” Chung says, “since transmissions take seven minutes to travel between Earth and Mars, and there is no GPS on Mars.” The researchers studied the Martian polar areas, which have comparable seasonal variations to Earth’s, and the new method might enhance navigation to help scientific objectives like as the quest for water.

The system will then be expanded by Fragoso, Lee, and Chung to account for variations in the weather, such as fog, rain, snow, and so on. If successful, their research might aid in the development of navigation systems for self-driving automobiles.

The Boeing Company and the National Science Foundation jointly sponsored this study. McCoy took part in the Summer Undergraduate Research Fellowship program at Caltech.