Music is a powerful emotional communicator, with distinct emotional connections for different musical “modes” in different cultures. A recent cross-cultural study has discovered that tonal patterns used in music to communicate sentiments are stable across cultures and are comparable to those used in speech. The full report is published 14th March 2012 in the open-access journal PLoS ONE.

Because speech and music have different structural elements and purposes, they may contain domain-specific emotional signals. Pitch in music, for example, is generally distinct and arranged hierarchically. Pitches can also be determined by the composer and are out of the performer’s control. Pitch movement in speech is usually continuous, not hierarchically ordered, and at the speaker’s option.

Regular stress cycles, known as meters, are another feature of music. The expressiveness of a musical performance is enhanced by departures from the expected time. The major mode is linked with exuberant joyful feelings in Western music, whereas the minor mode is associated with more muted or sorrowful emotions. Carnatic music, South India’s classical music, has a similar relationship between “ragas” and emotions.

Across four distinct music samples, an optimal factorial design was employed with six major musical cues (mode, tempo, dynamics, articulation, timbre, and register). Listeners assessed 200 musical examples based on four emotional characteristics they detected (happy, sad, peaceful, and scary). The results showed that all signals had strong effects, and multiple regression was used to assess the relevance of these cues.

The mode was the most significant cue, followed by tempo, register, dynamics, articulation, and timbre, however, the order of importance changed depending on the mood. The study’s authors discovered that some elements were shared when comparing modes and ragas used to communicate comparable emotions. They go on to show that these qualities are related to cross-cultural tonal properties of speech that communicate similar emotions.

Music communicates emotion, which is one of the main reasons it involves the listener so profoundly. Not only do music composers and performers profit from music’s powerful emotional impacts, but so do the gaming and film industries, as well as marketing and music therapy. Music has been researched from a variety of viewpoints to see how it affects listeners’ emotions.

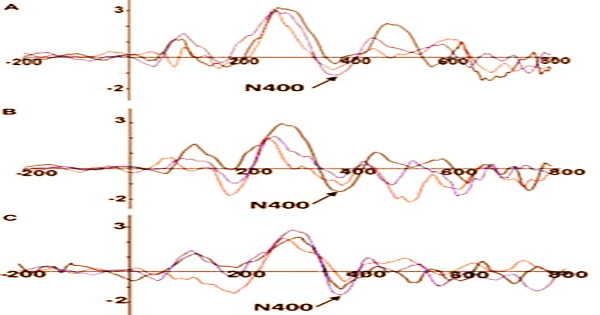

Self-report measures are one such approach, in which listeners record the feelings that they perceive or really experience while listening to music. Another technique includes using physiological and neurological markers of the emotions elicited by music.

The authors, lead by Duke University’s Dale Purves, believe that their findings corroborate the theory that a piece of music’s tonality communicates emotion by imitating the tonal qualities of emotion in the voice. Although many non-musical factors play a role in the induction of emotions (e.g., context, associations, and individual factors), the focus of this paper is on the properties inherent in music that cause the listener to experience emotions and are generally related to the mechanism of emotional contagion.

In speech, rhythm is more delicate, and there are disagreements over how to effectively quantify it. These difficulties show how difficult it is to compare pitch and rhythm in emotional speech and music. As a result, while speech and music may both have shared and domain-specific emotional signals, only a limited number of the most evident emotional cues have been studied.

Because all signals are completely interconnected in actual music, determining the exact contribution of individual cues to emotional expression is difficult. The answer is to synthesize versions of a particular tune and modify the cues in it separately and systematically. The causal role of each cue in conveying emotions in music may be assessed using a factorial design like this.

Because each level of the variables must be manipulated independently, the resulting exhaustive combinations soon add up to an unrealistic total number of trials needed to assess the design, research on emotional expression in music using factorial design has generally concentrated on relatively few signals. Because of this intricacy, most current research have focused on two or three distinct variables, each with two or three different levels. Furthermore, most research have concentrated on just a few “basic emotions,” ignoring more complicated emotions.