In a new study, which was just published in Frontiers in Neurorobotics, it is shown how a paraplegic man was able to feed himself for the first time in thirty years by controlling two robotic arms with his thoughts. An developing area of study at the intersection of robotics, artificial intelligence, and neuroscience is known as neurorobotics.

BCIs, often referred to as brain-machine interfaces (BMIs), are a type of neurotechnology that uses artificial intelligence (AI) to help people with speech or motor difficulties live more independently.

“This demonstration of bimanual robotic system control via a BMI in collaboration with intelligent robot behavior has major implications for restoring complex movement behaviors for those living with sensorimotor deficits,” wrote the authors of the study.

This study was led by principal investigator Pablo A. Celnik, M.D., of Johns Hopkins Medicine, as part of a clinical trial with an approved Food and Drug Administration Investigational Device Exemption.

Six Blackrock Neurotech NeuroPort electrode arrays were implanted in the motor and somatosensory cortices of the left and right brains of a 49-year-old partially paralyzed quadriplegic who had lived with a spinal cord injury for about 30 years prior to the study in order to record his neural activity.

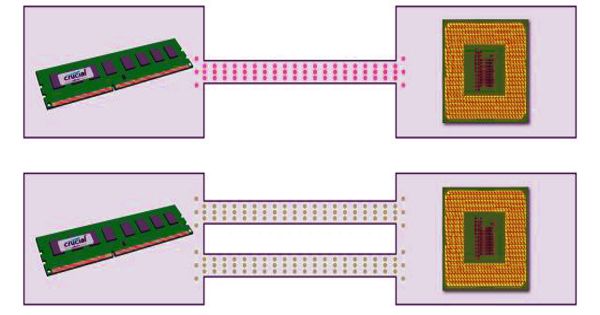

Two 96-channel arrays in the left primary motor cortex and two 32-channel arrays in the somatosensory cortex were specifically implanted in the man’s left hemisphere. A 96-channel array was implanted in the primary motor cortex of the right cerebral hemisphere and a 32-channel array in the somatosensory cortex.

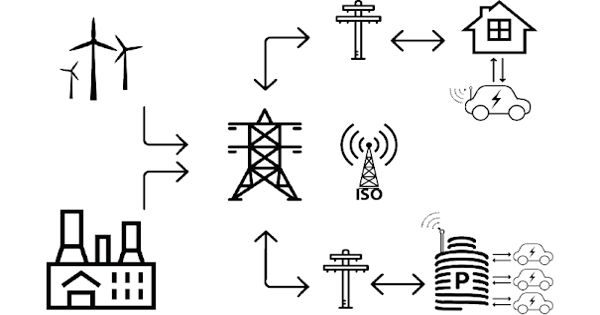

The subject was given activities to complete while three 128-channel Neuroport Neural Signal Processors connected by wire to the implanted microelectrode arrays captured brain activity. He was seated at a table between two robotic arms with a pastry on a plate set in front of him. He was tasked to use his thoughts to guide the robotic limbs with an attached fork and knife to cut a piece of the pastry and bring it to his mouth.

The intention was to have the robotic arms handle the majority of the work while giving the participant some degree of autonomy. Greater dexterity, according to the researcher’s hypothesis, would be made possible by sharing control of the robotic limbs during a task that calls for bimanual coordination and delicate manipulation. The participant’s mouth, food, and plate’s approximate locations were previously provided to the robot.

“Using neurally-driven shared control, the participant successfully and simultaneously controlled movements of both robotic limbs to cut and eat food in a complex bimanual self-feeding task,” reported the researchers.