The foundation for billions of linked devices, from internet-of-things (IoT) gadgets to sensors in autos, are microcontrollers, tiny processors that can execute simple orders. It is difficult to train artificial intelligence models on “edge devices” that operate independently from central computer resources due to the extremely low memory capacity and lack of an operating system of low-cost, low-power microcontrollers.

A machine-learning model can be trained on an intelligent edge device so that it can adjust to new inputs and produce more accurate predictions. A smart keyboard, for instance, might be able to continuously improve itself by learning from the writing of its users if a model is trained on it.

But before the model is put into use on a device, the training procedure necessitates so much memory that it is normally carried out on powerful computers in a data center. Due to the requirement to send user data to a central server, this is more expensive and creates privacy concerns.

Researchers from MIT and the MIT-IBM Watson AI Lab created a novel method that permits on-device training while utilizing less than a quarter of a megabyte of RAM to solve this issue. Other training programs created for linked devices can utilize more memory than 500 megabytes, which is significantly more than the 256 kilobyte limit of the majority of microcontrollers (there are 1,024 kilobytes in one megabyte).

By reducing the amount of computing necessary to train a model, the researchers’ clever algorithms and framework have sped up and improved memory efficiency. Their method enables quick training of a machine learning model using a microcontroller.

By preserving data on the device, this method also protects privacy, which may be advantageous when dealing with sensitive data, like in medical applications. Additionally, it can make it possible to modify a model to suit users’ requirements. Comparing the framework to other training methods, it also maintains or boosts the model’s accuracy.

“Our study enables IoT devices to not only perform inference but also continuously update the AI models to newly collected data, paving the way for lifelong on-device learning. The low resource utilization makes deep learning more accessible and can have a broader reach, especially for low-power edge devices,” says Song Han, an associate professor in the Department of Electrical Engineering and Computer Science (EECS), a member of the MIT-IBM Watson AI Lab, and senior author of the paper describing this innovation.

We push a lot of the computation, such as auto-differentiation and graph optimization, to compile time. We also aggressively prune the redundant operators to support sparse updates. Once at runtime, we have much less workload to do on the device.

Song Han

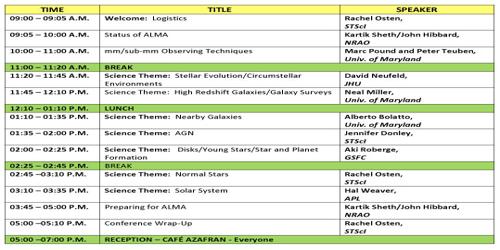

Joining Han on the paper are co-lead authors and EECS PhD students Ji Lin and Ligeng Zhu, as well as MIT postdocs Wei-Ming Chen and Wei-Chen Wang, and Chuang Gan, a principal research staff member at the MIT-IBM Watson AI Lab. The research will be presented at the Conference on Neural Information Processing Systems.

As part of their TinyML program, Han and his team have already tackled the memory and computational limitations that arise when attempting to execute machine-learning models on tiny edge devices.

Lightweight training

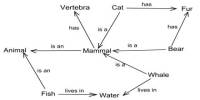

A common type of machine-learning model is known as a neural network. These models, which are loosely based on the human brain, have layers of connected nodes, or neurons, that process data to carry out a task, such identifying people in photographs. In order for the model to learn the task, it must first be trained, which entails showing it millions of instances.

The model alters the weights, or degrees of strength, of the connections between neurons as it learns. As the model learns, it may go through hundreds of updates, and each round requires the storage of the intermediate activations.

In a neural network, activation is the middle layer’s intermediate results. Han argues that executing a pre-trained model uses significantly less memory than training a model, which may have millions of weights and activations.

Two algorithmic approaches were used by Han and his colleagues to improve the training process’ efficiency and reduce its memory requirements. The first method, called sparse update, employs an algorithm to determine which weights should be updated throughout each training phase.

The program begins freezing the weights one at a time and continues doing so until the accuracy falls below a predetermined level. While the activations corresponding to the frozen weights do not require memory storage, the remaining weights are modified.

“Updating the whole model is very expensive because there are a lot of activations, so people tend to update only the last layer, but as you can imagine, this hurts the accuracy. For our method, we selectively update those important weights and make sure the accuracy is fully preserved,” Han says.

The weights, which are normally 32 bits, are simplified as part of their second method, which uses quantized training. A quantization approach reduces the amount of memory required for both training and inference by rounding the weights to only eight bits.

Inference is the process of applying a model to a dataset and generating a prediction. The approach then employs a method known as quantization-aware scaling (QAS), which functions as a multiplier to modify the weight-to-gradient ratio in order to prevent any accuracy loss that can result from quantized training.

The researchers created a device they called a little training engine that allows a basic microcontroller without an operating system to run these algorithmic improvements. This system flips the training process’s order so that more work is done in the compilation phase before the model is put into use on the edge device.

“We push a lot of the computation, such as auto-differentiation and graph optimization, to compile time. We also aggressively prune the redundant operators to support sparse updates. Once at runtime, we have much less workload to do on the device,” Han explains.

A successful speedup

In contrast to other strategies intended for lightweight training, their optimization only required 157 kilobytes of RAM to train a machine-learning model on a microcontroller.

By teaching a computer vision model to recognize humans in photos, they put their framework to the test. It was trained to finish the assignment successfully in about ten minutes. In comparison to other methods, their approach was able to train a model more than 20 times faster.

The researchers intend to apply these techniques to language models and various sorts of data, such as time-series data, now that they have shown how well they work for computer vision models.

They also hope to apply this knowledge to lower the size of larger models without compromising accuracy, which could lessen the environmental impact of developing large-scale machine-learning models.

This work is funded by the National Science Foundation, the MIT-IBM Watson AI Lab, the MIT AI Hardware Program, Amazon, Intel, Qualcomm, Ford Motor Company, and Google.