Multi-Core Processor

Multi-core processing is a growing industry trend as single-core processors rapidly reach the physical limits of possible complexity and speed. Most current systems are multi-core. Systems with a large number of processor core — tens or hundreds — are sometimes referred to as many-core or massively multi-core systems.

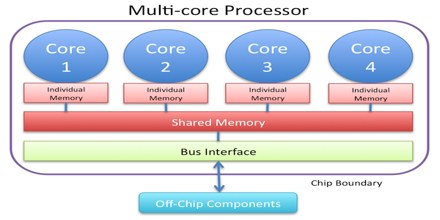

A multi-core processor is a single computing component comprised of two or more CPUs that read and execute the actual program instructions. A dual core set-up is somewhat comparable to having multiple, separate processors installed in the same computer, but because the two processors are actually plugged into the same socket, the connection between them is faster.

A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share caches, and they may implement message passing or shared-memory inter-core communication methods. Common network topologies to interconnect cores include bus, ring, two-dimensional mesh, and crossbar. Homogeneous multi-core systems include only identical cores; heterogeneous multi-core systems have cores that are not identical (e.g. big.LITTLE have heterogeneous cores that share the same instruction set, while AMD Accelerated Processing Units have cores that don’t even share the same instruction set). Just as with single-processor systems, cores in multi-core systems may implement architectures such as VLIW, superscalar, vector, or multithreading.

Multi-core processors are widely used across many application domains, including general-purpose, embedded, network, digital signal processing (DSP), and graphics (GPU).

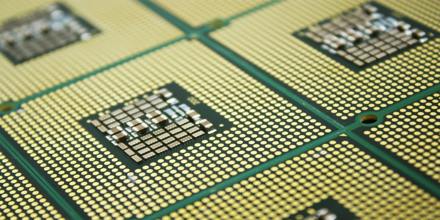

The first multi-core processors were produced by Intel and AMD in the early 2000s. Since then, processors have been created with two cores (“dual core”), four cores (“quad core”), six cores (“hexa core”), eight cores (“octo core”), and so on. Processors have also been made with as many as 100 physical cores, and chip designers have used Field Programmable Gate Arrays (FPGAs) to create processors with 1000 effective independent cores.

Multi-Core Processors Hardware and Development

In addition, multi-core chips mixed with simultaneous multithreading, memory-on-chip, and special-purpose “heterogeneous” (or asymmetric) cores promise further performance and efficiency gains, especially in processing multimedia, recognition and networking applications. Chips designed from the outset for a large number of cores (rather than having evolved from single core designs) are sometimes referred to as manycore designs, emphasising qualitative differences.

The composition and balance of the cores in multi-core architecture show great variety. Some architectures use one core design repeated consistently (“homogeneous”), while others use a mixture of different cores, each optimized for a different, “heterogeneous” role.

Several business motives drive the development of multi-core architectures. For decades, it was possible to improve performance of a CPU by shrinking the area of the integrated circuit (IC), which reduced the cost per device on the IC. Alternatively, for the same circuit area, more transistors could be used in the design, which increased functionality, especially for complex instruction set computing (CISC) architectures. Clock rates also increased by orders of magnitude in the decades of the late 20th century, from several megahertz in the 1980s to several gigahertz in the early 2000s.

As the rate of clock speed improvements slowed, increased use of parallel computing in the form of multi-core processors has been pursued to improve overall processing performance. Multiple cores were used on the same CPU chip, which could then lead to better sales of CPU chips with two or more cores. For example, Intel has produced a 48-core processor for research in cloud computing; each core has an x86 architecture.

Various other methods are used to improve CPU performance. Some instruction-level parallelism (ILP) methods such as superscalar pipelining are suitable for many applications, but are inefficient for others that contain difficult-to-predict code. Many applications are better suited to thread-level parallelism (TLP) methods, and multiple independent CPUs are commonly used to increase a system’s overall TLP. A combination of increased available space (due to refined manufacturing processes) and the demand for increased TLP led to the development of multi-core CPUs.

Software Dependent

While the concept of multiple core processors sounds very appealing, there is a major caveat to this ability. In order for the true benefits of the multiple processors to be seen, the software that is running on the computer must be written to support multithreading. Without the software supporting such a feature, threads will be primarily run through a single core thus degrading the efficiency. After all, if it can only run on a single core in a quad core processor, it may actually be faster to run it on a dual core processor with higher base clock speeds.

Thankfully, all of the major current operating systems have multithreading capability. But the multithreading must also be written into the application software. Thankfully the support for multithreading in consumer software has greatly improved but for many simple programs, multithreading support is still not implemented due to the complexity.

For instance, a mail program or web browser is not likely to see huge benefits to multithreading as say a graphics or video editing program where complex calculations are being done by the computer.

Multi-Core Processor Advantages & Disadvantages

Multi-core processors are widely used across many application domains including: general-purpose, embedded, network, digital signal processing, and graphics.

Advantages

The proximity of multiple CPU cores on the same die allows the cache coherency circuitry to operate at a much higher clock rate than is possible if the signals have to travel off-chip. Combining equivalent CPUs on a single die significantly improves the performance of cache snoop (alternative: Bus snooping) operations. These higher quality signals allow more data to be sent in a given time period since individual signals can be shorter and do not need to be repeated as often.

The largest boost in performance will likely be noticed in improved response time while running CPU-intensive processes, like antivirus scans, ripping/burning media (requiring file conversion), or searching for folders.

Assuming that the die can fit into the package, physically, the multi-core CPU designs require much less Printed Circuit Board (PCB) space than multi-chip SMP designs. Also, a dual-core processor uses slightly less power than two coupled single-core processors, principally because of the decreased power required to drive signals external to the chip. In terms of competing technologies for the available silicon die area, multi-core design can make use of proven CPU core library designs and produce a product with lower risk of design error than devising a new wider core design. Also, adding more cache suffers from diminishing returns.

Disadvantages

In addition to operating system (OS) support, adjustments to existing software are required to maximize utilization of the computing resources provided by multi-core processors. Also, the ability of multi-core processors to increase application performance depends on the use of multiple threads within applications. Emergent Game Technologies’ Gamebryo engine includes their Floodgate technology which simplifies multicore development across game platforms.

Integration of a multi-core chip drives production yields down and they are more difficult to manage thermally than lower-density single-chip designs. Intel has partially countered this first problem by creating its quad-core designs by combining two dual-core on a single die with a unified cache, hence any two working dual-core dies can be used, as opposed to producing four cores on a single die and requiring all four to work to produce a quad-core. From an architectural point of view, ultimately, single CPU designs may make better use of the silicon surface area than multiprocessing cores, so a development commitment to this architecture may carry the risk of obsolescence. Finally, raw processing power is not the only constraint on system performance. Two processing cores sharing the same system bus and memory bandwidth limits the real-world performance advantage. It would be possible for an application that used two CPUs to end up running faster on one dual-core if communication between the CPUs was the limiting factor, which would count as more than 100% improvement.