Humans are usually pretty good at recognizing mistakes, but artificial intelligence systems are not. According to a new study, AI has inherent limitations caused by a century-old mathematical paradox.

AI systems, like some people, frequently have a level of confidence that far exceeds their actual abilities. Many AI systems, like overconfident people, are unaware when they are making mistakes. It is sometimes more difficult for an AI system to recognize when it is making a mistake than it is to produce the correct result.

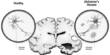

According to researchers from the University of Cambridge and the University of Oslo, modern AI’s Achilles’ heel is instability, and a mathematical paradox demonstrates AI’s limitations. The most advanced AI tool, neural networks, roughly mimic the connections between neurons in the brain. The researchers demonstrate that there are problems that require stable and accurate neural networks, but no algorithm can generate such a network. Algorithms can only compute stable and accurate neural networks in certain circumstances.

Under certain conditions, the researchers propose a classification theory that describes when neural networks can be trained to provide a trustworthy AI system. Their findings have been published in the Proceedings of the National Academy of Sciences.

The paradox first identified by Turing and Gödel has now been brought forward into the world of AI by Smale and others. There are fundamental limits inherent in mathematics, and similarly, AI algorithms cannot exist for certain problems.

Dr. Matthew Colbrook

Deep learning, the leading AI technology for pattern recognition, has received a lot of attention recently. Examples include diagnosing disease more accurately than physicians or preventing traffic accidents through self-driving vehicles. Many deep learning systems, however, are untrustworthy and easily duped.

“Many AI systems are unstable, and this is becoming a significant liability, particularly as they are increasingly used in high-risk areas such as disease diagnosis or autonomous vehicles,” said co-author Professor Anders Hansen of Cambridge’s Department of Applied Mathematics and Theoretical Physics. “If AI systems are used in areas where they can cause serious harm if they go wrong, trust in those systems must be prioritized.”

The researchers traced the paradox back to two twentieth-century mathematical giants: Alan Turing and Kurt Gödel. Mathematicians attempted to justify mathematics as the ultimate consistent language of science at the turn of the twentieth century. Turing and Gödel, on the other hand, demonstrated a fundamental paradox in mathematics: it is impossible to prove whether certain mathematical statements are true or false, and some computational problems cannot be solved with algorithms. Furthermore, whenever a mathematical system is rich enough to describe the arithmetic we learn in school, it cannot demonstrate its own consistency.

Decades later, the mathematician Steve Smale proposed a list of 18 unsolved mathematical problems for the 21st century. The 18th problem concerned the limits of intelligence for both humans and machines.

“The paradox first identified by Turing and Gödel has now been brought forward into the world of AI by Smale and others,” said co-author Dr. Matthew Colbrook of the Department of Applied Mathematics and Theoretical Physics. “There are fundamental limits inherent in mathematics, and similarly, AI algorithms cannot exist for certain problems.”

According to the researchers, because of this paradox, there are cases where good neural networks exist but an inherently trustworthy one cannot be built. “No matter how accurate your data is, you can never get the perfect information to build the required neural network,” said co-author Dr Vegard Antun of the University of Oslo.

Regardless of the amount of training data, it is impossible to compute a good existing neural network. The desired network will not be produced regardless of how much data an algorithm has access to. “This is analogous to Turing’s argument: there are computational problems that cannot be solved regardless of computing power or runtime,” Hansen explained.

According to the researchers, not all AI is inherently flawed, but it is only reliable in specific areas and using specific methods. “The problem is in areas where you need a guarantee, because many AI systems are a black box,” Colbrook explained. “It’s perfectly fine for an AI to make mistakes in some situations, but it must be honest about it.”

And that’s not what we’re seeing for many systems – there’s no way of knowing when they’re more confident or less confident about a decision.”

“Currently, AI systems can have a touch of guesswork to them,” Hansen said. “You try something, and if it doesn’t work, you add more stuff in the hopes that it will. You’ll eventually get tired of not getting what you want and try a different approach. It is critical to understand the limitations of various approaches. We have reached the point where AI’s practical successes have far outpaced theory and understanding. A program on understanding the foundations of AI computing is needed to bridge this gap. When 20th-century mathematicians discovered various paradoxes, they did not abandon their studies of mathematics. They only needed to find new paths because they were aware of the constraints” Colbrook stated.

“In the case of AI, it may be a matter of changing or developing new paths to build systems that can solve problems in a trustworthy and transparent manner, while also understanding their limitations.”

The researchers will then use approximation theory, numerical analysis, and computation foundations to determine which neural networks can be computed by algorithms and which can be made stable and trustworthy. Just as the paradoxes identified by Gödel and Turing about the limitations of mathematics and computers led to rich foundation theories describing both the limitations and the possibilities of mathematics and computations, perhaps a similar foundations theory will emerge in AI.