Experimental data is frequently noisy and replete with artifacts in addition to being highly dimensional. It is challenging to analyze the data because of this.

Currently, a team at HZB has developed software that intelligently compresses the data using self-learning neural networks before reconstructing a low-noise version. This makes it possible to recognize linkages that might not otherwise be obvious.

The software has now been successfully used in photon diagnostics at the FLASH free electron laser at DESY. But it is suitable for very different applications in science.

More is not always better, but sometimes a problem. Correlations are frequently no longer discernible with very complex data, which have several dimensions because of their numerous factors. Particularly considering how noisy and disordered experimentally obtained data are as a result of uncontrollable variables.

Helping humans to interpret the data

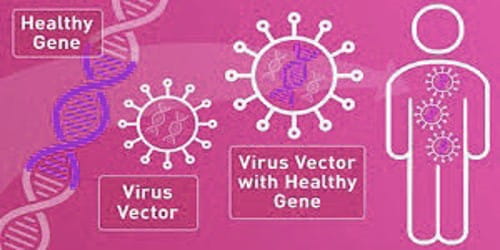

Now, new software based on artificial intelligence methods can help: It is a special class of neural networks (NN) that experts call “disentangled variational autoencoder network (β-VAE).” Simply said, the first NN handles data compression, and the second NN handles data reconstruction.

“In the process, the two NNs are trained so that the compressed form can be interpreted by humans,” explains Dr. Gregor Hartmann. The physicist and data scientist supervises the Joint Lab on Artificial Intelligence Methods at HZB, which is run by HZB together with the University of Kassel.

We succeeded in extracting this information from noisy electron time-of-flight data, and much better than with conventional analysis methods.

Dr. Gregor Hartmann

Extracting core principles without prior knowledge

Google Deepmind had already proposed to use β-VAEs in 2017. Many experts believed that the application would be problematic in the actual world since non-linear components are tricky to separate.

“After several years of learning how the NNs learn, it finally worked,” says Hartmann. β-VAEs are able to extract the underlying core principle from data without prior knowledge.

Photon energy of FLASH determined

In the paper that has just been published, the team used the software to extract single-shot photoelectron spectra and calculate the photon energy of FLASH.

“We succeeded in extracting this information from noisy electron time-of-flight data, and much better than with conventional analysis methods,” says Hartmann. Even data with detector-specific artefacts can be cleaned up this way.

A powerful tool for different problems

“The method is really good when it comes to impaired data,” Hartmann emphasises.

The program can recreate even minute signals that were invisible in the raw data. In huge experimental data sets, such networks can assist in revealing unexpected physical effects or correlations.

“AI-based intelligent data compression is a very powerful tool, not only in photon science,” says Hartmann.

Now plug and play

In total, Hartmann and his team spent three years developing the software. “But now, it is more or less plug and play. We hope that soon many colleagues will come with their data and we can support them.”